Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 1 by Jing Xu and Version 2 by Sirius Huang.

Given the challenges encountered by industrial cameras, such as the randomness of sensor components, scattering, and polarization caused by optical defects, environmental factors, and other variables, the resulting noise hinders image recognition and leads to errors in subsequent image processing. An increasing number of papers have proposed methods for denoising RAW images.

- image denoising

- RAW noise

- convolutional neural network

1. Introduction

The field of industrial cameras, as a significant application area of image processing technology, has gained widespread attention and application. Through the acquisition and processing of image data, industrial cameras are extensively utilized in automation inspection, quality control, logistics, and other domains. However, the images captured by industrial cameras often contain noise due to various factors, such as the influence of sensors and internal components of the imaging system [1]. The distribution and magnitude of this noise are non-uniform, severely impacting the retrieval of image information. The removal of image noise has become an indispensable step in image processing. Furthermore, while removing image noise, it is essential to ensure the preservation of complete image information.

In recent years, image denoising has become a prominent research area in the fields of computer vision and image processing. Research methods can be broadly categorized into two groups: traditional denoising methods and learning-based deep learning methods. Among these, a representative method is the non-local means (NLM) proposed by Buades [2], which aims to remove noise by exploiting the similarity between pixels in an image. Subsequently, Dabov, Foi [3], and others introduced the block matching and 3D filtering (BM3D) technique, which identifies blocks similar to the current block through block matching and applies 3D filtering to these blocks, resulting in a denoised image. Mairal, Bach, and et al. [4] proposed dictionary learning-based sparse representation and self-similarity non-local methods, both of which exploit the properties of the image to eliminate noise [5]. Additionally, there are other popular image denoising methods, including but not limited to denoising using Markov random fields [6][7][8][6,7,8], gradient-based denoising [9][10][9,10], and total variation denoising [11][12][13][11,12,13].

The aforementioned methods are conventional denoising approaches that, at the time, achieved decent results in image denoising. However, they inevitably suffer from several issues: (1) reliance on manual design and prior knowledge, (2) the need for extensive parameter tuning, and (3) difficulty in handling complex noise. In contrast, deep learning-based image denoising methods exhibit strong learning capabilities, enabling them to fit complex noise distributions and achieve excellent results through parallel computing and GPU utilization, effectively reducing resource consumption. Initially, multilayer perceptrons (MLPs) [14][15][14,15] and autoencoders [16][17][18][16,17,18] were employed for denoising tasks, but due to their limited network capacity and inability to effectively capture noise characteristics, they fell short of the performance achieved by traditional methods. However, the introduction of ResNet by Zhang [19] addressed these issues, enabling continuous improvement in the performance of deep learning-based denoising and gradually establishing its dominance in the field. In recent years, the emergence of ViT [20][21][22][20,21,22] brought the application of Transformers to the field of computer vision, yielding remarkable outcomes. Nowadays, Transformers [23][24][25][26][23,24,25,26] and CNNs [27][28][29][27,28,29] have become mainstream methods in the domain of image denoising.

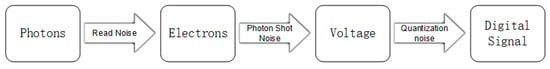

In the realm of deep learning-based denoising methods, noise can be effectively modeled. However, the current mainstream deep learning approaches primarily focus on denoising RGB images. As shown in Figure 1, many noises are generated during the camera imaging process [30]. Hence, the denoising methods designed for RGB images perform unsatisfactorily when applied to denoise RAW images. In recent years, an increasing number of papers have proposed methods for denoising RAW images. Zhang [1] modeled the noise in RAW images and made assumptions about different types of noise in the images, enabling the network to learn the noise distribution more effectively. Subsequently, Wei [31] presented a model for synthesizing real noise images, forming an extreme low-light RAW noise formation model, and proposed a noise parameter correction scheme. At the same time, in the field of RGB denoising, Brooks [32] introduced a denoising approach that involves converting RGB images to RAW format for denoising, followed by reconversion to RGB format, yielding significant improvements.

Figure 1.

Imaging noise generating processes.

2. RAW Image

Compared to sRGB images processed through the Image Signal Processing (ISP) unit of a camera, direct processing of RAW images is superior. This is because ISP processing includes steps such as white balance, denoising, gamma correction, color channel compression, and demosaicking, which result in information loss in high spatial frequencies and dynamic range. Moreover, the nature of image noise becomes more complex and challenging to handle. Throughout the camera imaging process, from photons to electrons, and then to voltage, before being converted into digital signals, noise is introduced. These noise sources primarily include thermal noise, photon shot noise, readout noise, and quantization error noise. After going through the ISP modules, noise continues to be generated, amplified, or its statistical characteristics altered, leading to an amplified impact on image quality and increasingly uncontrollable noise characteristics. Therefore, it is more feasible and effective to perform denoising on RAW images before ISP processing. In the experiments conducted by Brooks [32] they inverted RGB images to RAW images and introduced the estimated noise into the network for denoising. Their approach achieved promising results in the field of RGB image denoising.

3. RAW Image Denoising

The study of image denoising has always been an essential component of the computer vision field. With the advent of deep learning, utilizing deep neural networks for denoising has become the mainstream approach in image denoising. Early deep learning denoising methods primarily focused on removing additive Gaussian white noise from RGB images. However, for the RAW image denoising benchmark established in 2017 [33][34], these denoising methods, while outperforming some traditional approaches, exhibited poor performance in denoising the original image data. In the case of RAW images, neighboring pixels belong to different color channels and exhibit weak correlation, lacking the traditional notion of pixel smoothness. Furthermore, since each pixel in RAW image data contains information for only one color channel, denoising algorithms designed for color images are not applicable. In recent years, noise modeling methods, such as those proposed by Wang [34][36] and Wei [31], have simulated the noise distribution generated during the image signal processing (ISP) pipeline, achieving promising denoising results through network-based learning. Feng [35][37] introduced a method that decomposes real noise into shot noise and read noise, improving the accuracy of data mapping. Zhang [36][38] further extended this by proposing two components of noise synthesis, namely signal-independent, and signal-dependent, which were implemented using different methods. Although utilizing noise modeling methods can provide a good understanding of the statistical characteristics and distribution patterns of noise, which also contributes to noise removal, in practical applications, noise often exhibits diversification and is influenced by various factors, such as sensor temperature and environmental lighting, among others. A single noise model cannot fully describe all noise situations, resulting in unsatisfactory denoising performance in specific scenarios. Therefore, the researchers employ a real noise dataset to fit image noise, aiming to achieve denoising effects that accurately simulate and reproduce noise conditions in the real world. This approach enables the algorithm to learn more types and features of noise, enhancing its generalization capability and adaptability. Consequently, the algorithm becomes more versatile, applicable to a wide range of scenarios, and more closely aligned with real-world applications.