Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 2 by Sirius Huang and Version 1 by Tiexin Wang.

Microsurgical techniques have been widely utilized in various surgical specialties, such as ophthalmology, neurosurgery, and otolaryngology, which require intricate and precise surgical tool manipulation on a small scale. In microsurgery, operations on delicate vessels or tissues require high standards in surgeons’ skills. This exceptionally high requirement in skills leads to a steep learning curve and lengthy training before the surgeons can perform microsurgical procedures with quality outcomes. To address these challenges, a growing number of researchers have begun to explore the use of robotic technologies in microsurgery and have developed various microsurgery robotic (MSR) systems.

- microsurgery robot (MSR)

- mechanism design

- imaging and sensing

- control and automation

- human–machine interaction (HMI)

1. Introduction

Microsurgery is a surgical procedure that involves operating on small structures with the aid of a surgical microscope or other magnifying instrument. The visual magnification allows surgeons to operate on delicate structures with greater precision and accuracy, resulting in better treatment outcomes. With its unique advantages, microsurgery has been widely adopted in various surgical specialties, including ophthalmology, otolaryngology, neurosurgery, reconstructive surgery, and urology, where intricate and precise surgical tool manipulation on a small scale is required [1].

Despite its benefits, microsurgery also presents significant challenges. Firstly, the small and delicate targets in microsurgery require a high level of precision, where even the slightest tremor may cause unnecessary injury [2]. Microsurgery requires complex operations under limited sensory conditions, such as limited microscope field of view and low tool–tissue interaction force [3]. This is demanding on the surgeon’s surgical skills and requires extensive training before the surgeon can perform such surgical procedures clinically. In addition, microsurgery often requires the surgeon to perform prolonged tasks in uncomfortable positions, which can lead to fatigue and increase the risk of inadvertent error [4].

To address these challenges, a growing number of researchers have begun to explore the use of robotic technologies in microsurgery and have developed various microsurgery robotic (MSR) systems. The MSR system has the potential to make a significant impact in the field of microsurgery. It can provide surgeons with increased precision and stability by functions such as tremor filtering and motion scaling [5]. By integrating various perception and feedback technologies, it can provide richer information about the surgical environment and offer intuitive intraoperative guidance [4,6][4][6]. It can also enhance surgeons’ comfort during surgical operations through ergonomic design. With the above features, surgeons can improve their surgical performance with the help of MSR and even accomplish previously impossible surgical tasks [7].

2. Key Technologies of the MSR Systems

2.1. Concept of Robotics in Microsurgery

Robotics has a wide application space and great potential in the field of microsurgery. It is believed that through the detailed introduction of these technologies, readers can gain a more comprehensive understanding of how MSR systems work, and it can provide valuable references for future research.

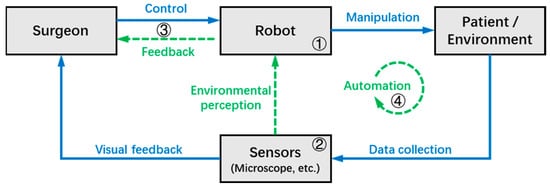

The typical workflow of the MSR system can be summarized in Figure 1, where the gray boxes and blue arrows indicate the elements and processes that are involved in most MSR systems, respectively, while the green arrows indicate additional functions available in some systems. During robotic surgery, the surgeon can directly or remotely transmit control commands to the MSR, which in turn operates the surgical instruments to interact with the target tissues with improved precision and stability. At the same time, surgical information is collected by the microscope and other sensors in real-time, and then transmitted to the surgeon in the form of visual feedback.

Figure 1.

The typical workflow of the MSR system.

In some MSR systems, the robot can achieve environmental perception with the help of various sensors, and the information that can help improve surgical outcomes (e.g., tool–tissue interaction force, tool tip depth, danger zones, etc.) is fed back to the surgeon through the human-machine interface in different forms. Furthermore, some MSR systems have achieved a certain level of autonomy based on adequate environmental perception, allowing the robot to perform certain tasks autonomously under the supervision of the surgeon.

Based on the workflow of the MSR system illustrated in Figure 1, this chapter divides the key technologies of the MSR into four sections, which will be introduced in subsequent sections as follows:

- ➀

-

Operation modes and mechanism designs: As the foundation of the robot system, this section discusses the structural design and control types of the MSR;

- ➁

-

Sensing and perception: This is the medium through which surgeons and robots perceive the surgical environment, and this section discusses the techniques that use MSR systems to collect environmental data;

- ➂

-

Human–machine interaction (HMI): This section focuses on the interaction and collaboration between the surgeon and MSR, discussing techniques that can improve surgeon precision and comfort, as well as provide more intuitive feedback on surgical information;

- ➃

-

Automation: This section discusses technologies for the robot to automatically or semi-automatically perform surgical tasks, which can improve surgical efficiency and reduce the workload of the surgeon.

2.2. MSR Operation Modes and Mechanism Designs

MSR can be broadly classified as handheld, teleoperated, co-manipulated, and partially automated robots based on the control method. All of them use a microscope and/or OCT as visual feedback. In traditional surgery, the surgeon controls surgical tools with a microscope as visual feedback. MSR brings great convenience to the surgery, and the surgeon no longer needs to perform the operation completely manually. There is a system of categorizing according to the degree of robotic control from low to high, as shown in Figure 2, including handheld, teleoperated, co-manipulated, and partially automated robots.

Figure 2.

Types of surgical robots. (

a

) Handheld robot, (

b

) Teleoperated robot, (

c

) Co-manipulated robot, (

d

) Partially automated robot.

(a) Handheld robot. In the handheld robotic system, the surgical tool itself is retrofitted into a miniature robotic system called a robotic tool. The surgeon manipulates it to perform the surgical procedure. The robotic tool provides tremor elimination, depth locking, and other functions. “Micron” is a typical example [52][8].

(b) Teleoperated robot. In the teleoperated robotic system, the surgeon manipulates the master module to control the slave module, which replaces the surgeon’s hand to manipulate the surgical tool. The system integrates the functions of motion scaling and tremor filtering through servo algorithms. In addition, it achieves three-dimensional perception with the integration of haptic feedback or depth perception algorithms at the end of the surgical tool. A typical example is “Preceyes Surgical System” [53,54][9][10].

(c) Co-manipulated robot. In the co-manipulated robotic system, the surgeon manipulates the surgical tool simultaneously with the robot. The surgeon manually manipulates the surgical tool directly to control the motion. At the same time, the robot also holds the surgical tool, which provides assistive compensation for hand tremor and allows for prolonged immobilization of the surgical tool. “Co-manipulator” is a typical example [22][11].

(d) Partially automated robot. In the partially automated robotic system, specific procedures or steps of procedures are performed automatically by the robot. The robot directly manipulates and controls the motion of the surgical tool. The processed image information is provided to the robot as feedback and guidance. Simultaneous visual information is transmitted to the surgeon, who can provide override orders to supervise the partially automated procedure at any time. “IRISS” is a typical example [55][12].

MSR are broadly classified into RCM mechanisms and non-RCM mechanisms based on the robot structure. Taylor et al. first introduced the concept of remote center of motion (RCM) for motion control in 1995, which restrains the movement of the surgical tools within the surgical incision [56][13]. Therefore, depending on the different surgical environment, for example, closed or open surgical environment, MSR adopts an RCM structure or non-RCM structure, respectively.

Open surgical environments such as neurosurgery mostly utilize non-RCM mechanism robots. This type of structure is characterized by flexibility and is not limited by the shape of the mechanism. And the serial robotic arm can be directly used as the MSR’s manipulator.

Enclosed surgical environments, in the example of ophthalmic surgery, mostly utilize RCM mechanism robots. The RCM mechanism is divided into passive joint, active control, and mechanical constraint according to the implementation method.

-

Passive-joint RCM mechanism.

It is generally composed of two degrees of freedom vertically intersecting rotary joints, which achieve RCM through the active joint movement of the robotic arm under the restriction of the incision on the patient’s body surface. This design guarantees safety while reducing number of joints and mechanism size. However, it is easily influenced by the flexibility at the human incision and it is difficult to determine the accurate position of instrument insertion, which has a bad impact on the manipulation of precision. “MicroHand S” is a typical example [63][14].

-

Active-control RCM mechanism.

In this type of RCM mechanism, the RCM of the surgical tools around the incision is generally achieved by a programable control approach, which is usually called virtual RCM. This design is simple in structure and flexible in form. However, the precision of the movement of the surgical tools depends on the stability of the precision of the control system. The general concern is that the security of the system is guaranteed by the algorithm. “RAMS” is a typical example [68][15].

-

Mechanical-constraint RCM mechanism.

It generally used a specific mechanical mechanism to achieve the RCM of surgical tools, which is characterized by high safety under mechanical restraint. The commonly used mechanical-constraint RCM mechanisms are usually divided into four categories, namely triangular, arc, spherical, and parallelogram structures, according to the different mechanical structures. Triangular RCM mechanisms have the features of high structural stiffness but significant joint coupling [69][16]. The recent research nowadays proposes a novel dual delta structure (DDS), which can interchange wide motion and precise motion by adjusting the work area and precision to meet the requirements of intraocular surgery and reconstructive surgery. However, the disadvantages of the triangular mechanism are still obvious [61][17]. The arc RCM mechanism has a simple structure but a large volume, which is difficult to drive [60][18]. The spherical RCM mechanism is very compact but with poor stability and is prone to motion interference [59][19]. Among them, the parallelogram RCM mechanism is the most widely utilized because of its apparent advantages. Various researchers have tried different structural designs based on parallelograms in anticipation of improvements. Integrating the synchronous belt into the parallelogram mechanism can simplify the mechanism linkage and compact the structure [58][20]. Some researchers have utilized the six-bar structure to be capable of further improving the structural stability and increasing the diversity of the drive [70][21].

2.3. Sensing and Perception in MSR

2.3.1. Imaging Modalities

Imaging technologies play a crucial role in MSR systems. In traditional microsurgery, surgeons rely primarily on the operating microscope to observe the surgical environment, which helps surgeons clearly observe the tissue and perform precise surgery by magnifying the surgical site. As technology continues to evolve, an increasing number of imaging techniques are being used in MSR systems to provide additional information about the surgical environment. These imaging techniques can help surgeons better identify the target tissue or guide the intraoperative movements of the robot, so as to achieve better surgical outcomes. This section will introduce imaging techniques with current or potential applications in MSR.

-

Magnetic resonance imaging and computed tomography

Magnetic resonance imaging (MRI) and computed tomography (CT) are medical imaging technologies that can generate high-resolution 3D images of the organs. MRI uses magnetic fields and radio waves to produce images with a spatial resolution of about 1 mm [71][22]. CT, on the other hand, uses X-rays and computer processing to create higher spatial resolution images at the sub-millimeter level [72][23]. Both have been widely used in preoperative and postoperative diagnosis and surgical planning.

Some researchers have attempted to apply MRI technology to robot systems to achieve intraoperative image guidance. Currently, a number of MRI-guided robot systems have been developed for stereotactic [73,74][24][25] or percutaneous intervention [75,76][26][27]. In the microsurgery field, Sutherland et al. designed an MRI compatible MSR system for neurosurgery [77][28]; the robotic arm is made of non-magnetic materials, such as titanium and polyetheretherketone, to prevent magnetic fields or gradients from affecting its performance and to ensure that the robot does not degrade image quality significantly. The system enables alignment of the robot arm position with the intraoperative MRI scans, allowing stereotaxy to be performed within the imaging environment (magnet bore) and microsurgery outside the magnet [37,65][29][30]. Fang et al. [78][31] proposed a soft robot system with 5-DOF and small size (Ø12 × 100 mm) for MRI-guided transoral laser microsurgery, and the intraoperative thermal diffusion and tissue ablation margin are monitored by magnetic resonance thermometry.

-

Optical coherence tomography

Optical coherence tomography (OCT) is a non-invasive imaging technique that uses low-coherence light to produce 2D and 3D images within light-scattering media. It is capable of capturing high resolution (5–20 μm) images in real-time [79][32] as well as visualizing the surgical instruments and subsurface anatomy, and it has gained wide application in ophthalmology [80,81][33][34]. Many researchers have integrated the OCT technology with MSR systems to provide intraoperative guidance in different dimensions (A-Scan for 1-D depth information, B-Scan for 2-D cross-sectional information, C-Scan for 3-D information) for better surgical outcomes.

Cheon et al. integrated the OCT fiber into a handheld MSR with piezo motor, and the feedback data from the OCT A-Scan was used to achieve an active depth locking function, which effectively improved the stability of the surgeon’s grasping actions [82,83][35][36]. Yu et al. designed the OCT-forceps, a surgical forceps with OCT B-Scan functionality, which was installed on a teleoperate MSR to enable OCT-guided epiretinal membrane peeling [84][37]. Gerber et al. combined the MSR with a commercially available OCT scanning device (Telesto II-1060LR, Thorlabs, NJ, USA), enabling the intraoperative ocular tissue identification and tool tip visual servoing, and completed several OCT-guided robotic surgical tasks, including semi-automated cataract removal [55][12], automated posterior capsule polishing [85][38], and automated retinal vein cannulation [22][11].

-

Near-infrared fluorescence

Near-infrared fluorescence (NIRF) imaging is a non-invasive imaging technique that utilizes near-infrared (NIR) light and NIR fluorophores to visualize biological structures and processes in vivo [86][39], and it can provide images with up to sub-millimeter resolution [87][40]. The NIRF imaging technique with the Indocyanine green (ICG) fluorophore has been widely used in the field of surgery, enabling the identification and localization of important tissues such as blood vessels, lymphatic vessels, and ureters [86,88][39][41]. Compared with white light, the NIRF imaging technique, with the addition of ICG fluorophores, allows for better optical contrast, higher signal/background ratio, and deeper photon penetration (up to 10 mm, depending on the tissue properties) [89,90,91][42][43][44].

The da Vinci surgical robot system (Intuitive Surgical, Sunnyvale, CA, USA) is equipped with a fluorescent imaging system called Firefly (Novadaq Technologies, Toronto, Canada), which allows surgeons to use NIRF imaging during robotic surgery to better visualize tissues such as blood vessels and extra-hepatic bile ducts [92][45]. Gioux et al. used a microscope equipped with an integrated NIRF system for lympho-venous anastomosis (LVA), and they found that the NIRF guidance during the microsurgery accelerated the surgeon’s identification and dissection of lymphatic vessels [93][46]. Using NIRF-based preoperative diagnosis and target marking, Mulken et al. successfully performed robotic microsurgical LVA using the MUSA microsurgery robot [66][47]. At present, there is no MSR system that uses intraoperative NIRF imaging to guide microsurgery.

-

Other imaging technologies

There are other imaging techniques that have the potential to be integrated with MSR systems to provide intraoperative guidance. One such technique is the probe-based confocal laser endomicroscopy (pCLE), which uses a fiber-optic probe to capture cellular level (up to 1 μm resolution [94][48]) images of in vivo tissues. It enables real-time, on-the-spot diagnosis and can generate larger maps of tissue morphology by performing mosaicking functions [95][49]. A handheld force-controlled robot was designed by Latt et al. to aid in the acquisition of consistent pCLE images during transanal endoscopic microsurgery [96][50]. Li et al. used pCLE as an end-effector for a collaborative robot, and a hybrid control framework was proposed to improve the image quality of robot-assisted intraocular non-contact pCLE scanning of the retina [97][51].

Another imaging technique that has gained popularity in recent years is the exoscope, which is essentially a high-definition video camera mounted on a long arm. It is used to observe and illuminate an object field on a patient from a location remote from the patient’s body, and project magnified high-resolution images onto a monitor to assist the surgeon [98][52]. In addition, the exoscope can be integrated with NIRF imaging techniques for improved surgical visualization [99][53]. Compared to the operation microscope, the exoscope has a longer working distance and provides better visual quality and greater comfort for the surgeon [100][54]. Currently, the effectiveness of the exoscope has been validated in neurosurgery and spinal surgery [98,101,102][52][55][56].

Ultrasound biomicroscopy (UBM) is another imaging technology that may be useful for MSRs. It utilizes high-frequency sound waves (35–100 MHz, higher than regular ultrasound) to visualize internal structures of the tissues with a high resolution (20 μm axial and 50 μm lateral for 50 MHz transducer), and the tissue penetration is approximately 4–5mm [103,104][57][58]. It is mainly used for imaging of the anterior segment of the eye [105][59]. Compared to OCT, which is also widely used in ophthalmology, UBM can provide better penetration through opaque or cloudy media, but it has relatively lower spatial resolution, requires contact with the eye, and is highly operator-dependent [106][60]. Integrating UBW technology into MSR systems can help surgeons to identify tissue features and provide intraoperative image guidance. Table 21 summarizes the important parameters and the corresponding references of the imaging techniques mentioned above. Overall, the application of various imaging techniques to MSRs has the potential to increase the precision and accuracy of surgical procedures and improve surgical outcomes. Continued research and development in this area is likely to lead to even more advanced and effective MSR systems in the future.

Table 21.

MSR-related imaging technologies.

| Modality | Source | Resolution | Depth of Penetration |

Main Clinical Application |

References |

|---|---|---|---|---|---|

| Magnetic resonance imaging (MRI) | Magnetic fields | ~1 mm | unlimited | Neurosurgery, Plastic surgery, etc. |

[71,77][22][28] |

| Computed tomography (CT) | X-rays | 0.5–1 mm | unlimited | Orthopedic, Neurosurgery, etc. |

[72,107][23][61] |

| Optical coherence tomography (OCT) | Low-coherence light | 5–20 μm | <3 mm | Ophthalmology | [79,107][32][61] |

| Near-infrared fluorescence (NIRF) | Near-infrared light | Up to sub-millimeter | <10 mm | Plastic surgery | [87,89,90,91][40][42][43][44] |

| Exoscope | Light | Determined by camera parameters |

Determined by the imaging modality |

Neurosurgery, Spinal surgery |

[98,99,100,101,102][52][53][54][55][56] |

| Ultrasound biomicroscopy (UBM) | Sound waves | 20–50 μm (For 50 MHz transducer) | <5 mm | Ophthalmology | [103,104][57][58] |

2.3.2. 3D Localization

Traditional microsurgery presents challenges for precise navigation and manipulation of surgical tools due to the limited workspace and top-down microscope view. One of the crucial obstacles is the 3D localization of the surgical instruments and intricate tissues such as the retina, cochlea, nerve fibers, and vascular networks deep inside the skull or spinal cord. Take ophthalmic microsurgery as an example; the lack of intraocular depth perception can significantly escalate the risk of penetration damage. To address this challenge, the adoption of high-precision target detection and advanced depth perception techniques becomes essential [5,10,108][5][62][63].

The 3D localization task in microsurgery can be divided into two aspects: target detection and depth perception. The target detection requires finding the target, such as the tool tip or blood vessels, from different types of images, while the depth perception involves analyzing the 3D position information of the target from the image. This section will provide a detailed introduction to both of these aspects.

-

Target detection

Numerous techniques emphasize target detection to accurately estimate an instrument’s position, thereby enhancing the clinician’s perceptual abilities. Initial attempts at automatic target detection depended on identifying instrument geometry amidst complex instrument appearance changes [109,110][64][65]. Recently, some methods leverage machine learning for rapid and robust target detecting and pose estimation [111,112,113,114][66][67][68][69]. Demonstrated autonomous image segmentation also offers the possibility of fully automated eye disease screening when combined with machine learning algorithms for ophthalmic OCT interpretation [115,116,117][70][71][72]. Deep learning-based methods have demonstrated strong performance and robustness in 2D instrument pose estimation [118,119][73][74]. Park et al. [120][75] suggested a deep learning algorithm for real-time OCT image segmentation and correction in vision-based robotic needle insertion systems, achieving a segmentation error of 3.6 μm. The algorithm has potential applications in retinal injection and corneal suturing.

An image registration-based pipeline using symmetric normalization registration method has been proposed to enhance existing image guidance technologies, which rapidly segments relevant temporal bone anatomy from cone-beam CT images without the need for large training data volumes [121][76]. In addition, several other image segmentation methods have been applied to retinal surgery. For instance, GMM [122][77] has been used for tool tip detection, while k-NN classifiers and Hessian filters [123,124[78][79][80][81],125,126], as well as the image projection network (IPN) [127][82] have been used for retinal vessel segmentation.

-

Depth perception based on microscope

Advanced target detection techniques in 3D space have substantially enhanced depth perception and procedural experience for the operating clinician [128,129][83][84]. For instance, Kim et al. leveraged deep learning and least squares for 3D distance prediction and optimal motion trajectories from a manually assigned 2D target position on the retina, demonstrating the effectiveness of deep learning in sensorimotor problems [130,131][85][86].

Some groups have used the relationship between the needle tip and its shadow to estimate the depth information [122,132][77][87]. Koyama et al. [133][88] implemented autonomous coordinated control of the light guide using dynamic regional virtual fixtures generated by vector field inequalities, so that the shadows of the instruments were always within the microscope view and the needle tip was automatically localized on the retina by detecting the instrument and its shadow at a predefined pixel distance. However, the accuracy of the positioning depends on the quality of the image, which affects the precise segmentation of the instrument and its shadows. Similarly, Richa et al. utilized the stereoscopic parallax between the surgical tool and the retinal surface for proximity detection to prevent retinal damage [134][89]. However, the effectiveness of this method is limited in fine ophthalmic surgery due to the rough correlation of 5.3 pixels/mm between parallax and depth. Yang et al. used a customized optical tracking system (ASAP) to provide the tool tip’s position and combined it with a structured light estimation method to reconstruct the retinal surface and calculate the distance from the tip to the surface, thereby achieving monocular hybrid visual servoing [135,136,137][90][91][92]. Bergeles et al. considered the unique optical properties and introduced a Raxel-based projection model to accurately locate the micro-robot in real-time [138,139][93][94]. However, the servo error is still several hundred microns, which is too large for microsurgical tasks.

| Combining geometric information of the needle tip and OCT | 4.7 μm (average | distance error) |

The needle has less deformation |

[29][100] |

| OCT, image segmentation, and reconstruction |

99.2% (confidence of localizing the needle) |

The needle has large deformation |

[145][101] | |

| Epiretinal surgery |

Estimation of calibration parameters for OCT cameras |

9.2 μm (mean calibration error) |

Unmarked hand-eye calibration and needle body segmentation |

[146][102] |

| Spotlight-based 3D instrument guidance | 0.013 mm (average tracking error) |

For positioning the needle tip when it is beyond the OCT scanning area | [147][103] | |

| Monocular microscope, structured light, and customized ASAP |

-

Depth perception based on other imaging methods

Some researchers are exploring ways to improve the positioning accuracy of the tool tip by utilizing various imaging techniques. Table 32 briefly describes the main points of depth perception methods along with their corresponding references. Bruns et al. [140,141][95][96] proposed an image guidance system that integrates an optical tracking system with intraoperative CT scanning, enabling real-time accurate positioning of a cochlear implant insertion tool with a mean tool alignment accuracy of 0.31 mm. Sutherland et al. [4,37][4][29] proposes the NeuroArm system for stereotactic orientation and imaging within the magnet bore, with microsurgery performed outside the magnet. By integrating preoperative imaging data with intraoperatively acquired MRI scans, the robotic system achieves precise co-localization within the imaging space. After updating the images based on spatial orientation at the workstation with tool overlay, the surgical impact on both the lesion and the brain can be visualized. Clarke et al. proposed a 4-mm ultrasound transducer microarray for imaging and robotic guidance in retinal microsurgery [142][97], capable of resolving retinal structures as small as 2 μm from a distance of 100 μm. Compared to other imaging methods, the use of high-resolution OCT imaging information at the 3D location of the tool tip is potentially even more advantageous. Some groups enable the direct analysis of instrument–tissue interaction directly in OCT image space, eliminating the need for complex multimodal calibration required with traditional tracking methods [143,144][98][99].

Table 32.

Different depth information perception methods.

| Procedure | Method | Precision | Note | References |

|---|---|---|---|---|

| Subretinal surgery | ||||

| 69 ± 36 μm | ||||

| Hybrid vision and position control | [ | 135 | ,136,137][90][91][92] | |

| Stereomicroscope and 3D reconstruction |

150 μm (translational errors of the tool) |

Hand-eye calibration with markers and 3D reconstruction of the retina | [129,148][84][104] | |

| Stereomicroscopy and parallax-based depth estimation | 5.3 pixels/mm | Inability to perform fine intraocular manipulation |

[134][89] | |

| Depth perception based on deep learning |

137 μm (accuracy of physical experiment) | Predict waypoint to goal in 3D given 2D starting point | [130,131][85][86] | |

| Automatic localization based on tool shadows | 0.34 ± 0.06 mm (average height for autonomous positioning) | Dependent on image quality | [122,132,133][77][87][88] | |

| Raxel-based projection model | 314 ± 194 μm | Positioning dependent on unique optical properties | [138,139][93][94] | |

| Cochlear implant |

Optical tracker and stereo cameras | 0.31 mm (mean tool alignment accuracy) | Image-guidance paired with an optical tracking system | [140,141,149][95][96][105] |

| Glioma resection |

Pre- and intraoperative image alignment | / | Stereotactic orientation and imaging within the magnet bore and microsurgery outside the magnet | [4,[437]][29] |

In epiretinal surgery, Zhou et al. utilized microscope-integrated OCT to segment the geometric data model of the needle body, thereby facilitating marker-less online hand-eye calibration of the needle, with a mean calibration error of 9.2 μm [146][102]. To address the situation where the tip of the needle extends beyond the OCT scanning area, the same group proposed a spotlight projection model to localize the needle, enabling 3D instrument guidance for autonomous tasks in robot-assisted retinal surgery [147][103]. For subretinal surgery, the team used the reconstructed needle model over the retina to predict subretinal positioning when needle deformation was minimal [29][100]. For cases where the needle deformation could not be ignored, they proposed a deep learning-based method to detect and locate the subretinal position of the needle tip, and ultimately reconstruct the deformed needle tip model for subretinal injection [145][101].

2.3.3. Force Sensing

Haptic is one of the important human senses. The temporal resolution of human touch is about 5 ms and the spatial resolution at the fingertips down to 0.5 mm [150][106]; thus, humans can acquire a wealth of information through the touch of the hand. However, haptic sensing is often lacking in most commercially available surgical robot systems (e.g., the da Vinci Surgical System). Force sensing technology can provide effective assistance in precise and flexible microsurgical operations, including determining tension during suture procedures [151][107], assessing tissue consistency in tumor resection surgeries [152][108], and executing the membrane peeling procedure in vitreoretinal surgery with appropriate force [153][109]. In current research, two primary types of force sensing technologies are employed in the MSR systems: those based on electrical strain gauge and those based on optical fibers.

-

Electrical strain gauge-based force sensors

The electrical strain gauge sensors measure force by detecting small changes in electrical resistance caused by the deformation of a material under stress, offering advantages such as wide measurement range and good stability. Some MSR systems connect the commercial electrical strain gauge sensors to their end-effector to enable force sensing during surgical procedures [53,152,154][9][108][110].

Take the NeuroArm robot system as an example; Sutherland et al. equipped each manipulator of the robot with two titanium 6-DOF Nano17 force/torque sensors (ATI Industrial Automation Inc., Apex, NC, USA), allowing for the measurement of tool–tissue interaction forces during neurosurgical procedures [152][108]. These force sensors were attached to the robotic tool holders, and each were capable of 0.149 g-force resolution, with a maximum threshold of 8 N and a torque overload of ±1.0 Nm. Due to the size limitation, this type of sensor is difficult to install near the tip of the surgical tool, which makes the measured data vulnerable to external force interference. Taking retinal surgery as an example, there is friction between the trocar and the tool, making it difficult for the externally mounted sensor to truly reflect the force between the instrument-tip and the target tissue [5].

-

Optical fiber-based force sensors

The optical fiber-based force sensors, on the other hand, measure the force by detecting the changes in light properties (wavelength and intensity) due to the external strain. Most of the fiber optic force sensors have the advantages of very slim size, high accuracy, biocompatibility, and sterilizability, and can be mounted distally on the surgical tools to provide more accurate force information [155][111]. In the field of optical fiber sensing, three categories of techniques can be identified based on their sensing principle: fiber Bragg grating (FBG) sensors, interferometer-based optical fiber sensors, and intensity-modulated optical fiber sensors [156][112]. Compared to other fiber optic force sensors, the FBG sensor exhibits higher precision, faster response time, and convenient multiplexing capabilities [156[112][113],157], which has led to its widespread adoption in MSR, especially in vitreoretinal surgery robots.

2.4. Human–Machine Interaction (HMI)

2.4.1. Force Feedback

The integration of advanced force sensing and feedback techniques into robotic surgery can help surgeons perceive the surgical environment and improve their motion accuracy, thereby improving the outcome of the procedure [155][111]. Force sensing techniques in MSR systems have been previously discussed, and this section will focus on the force feedback techniques that transmit the force information to the surgeon.

Compared to the widely used visual display technology, the application of force feedback technology in surgical robots is relatively immature [151][107]. Enayati et al. point out that that the bidirectional nature of haptic perception is a major difficulty preventing its widespread application [167][114]. The bidirectional nature of haptic perception means that there is a mechanical energy exchange between the environment and the sensory organ; thus, the inappropriate force feedback may interfere with the surgeon’s intended movement. But this also opens new possibilities for HMI. For example, the virtual fixture function can be achieved by combining the force feedback with different perception techniques (e.g., force or visual perception), which can reduce unnecessary contact forces or guide the surgeon’s movements [4,162][4][115]; it will be further described in the subsequent section.

The force feedback in MSR systems can be generally divided into two methods, direct force feedback and sensory substitution-based feedback.

-

Direct force feedback

For the direct force feedback method, the interaction forces between the tool and the tissue are proportionally fed back to the surgeon through haptic devices, so as to recreate the tactile sensations of the surgical procedure.

There are many MSR systems that use commercially available haptic devices to achieve direct force feedback. The force feedback in the second generation of the NeuroArm neurosurgical system is enabled by the commercially available Omega 7 haptic device (Force Dimension, Switzerland), which uses a parallel kinematic design and can provide a workspace of ∅ 160 × 110 mm and translational/grip force feedback of up to 12 N/8 N [168][116]. With the haptic device, the NeuroArm system can achieve force scaling, virtual fixture, and haptic warning functions [4,39][4][117]. The force scaling function can help the surgeon to clearly perceive small forces and recognize the consistency of the tissue during the operation; the virtual fixture function can guide the surgical tool or prevent it from entering the dangerous zone that is defined by the MRI information; and the haptic warning function will alert the surgeon by vibrating when force exceeds the threshold to avoid tissue damage.

Commonly used haptic devices also include Sigma-7 (Force Dimension, Nyon, Switzerland), HD2 (Quanser, Markham, ON, Canada), and PHANToM Premium 3.0 (Geomagic, Research Triangle Park, NC, USA). Zareiniaet al. conducted a comparative analysis of these systems and found that PHANToM Premium 3.0, which has a similar kinematic structure to the human arm, exhibited the best overall performance [169][118].

Meanwhile, some MSR systems employ custom-designed haptic devices to achieve the direct force feedback. Hoshyarmanesh et al. designed a microsurgery-specific haptic device [170][119], which features a 3-DOF active human arm-like articulated structure, a 4-DOF passive microsurgery-specific end-effector (3-DOF gimbal mechanism, 1-DOF exchangeable surgical toolset), and 3 supplementary DOF. The haptic device provides 0.92–1.46 mm positioning accuracy, 0.2 N force feedback resolution, and up to 15 N allowable force.

Gijbels et al. [3] developed a teleoperated robot for retinal surgery with a novel 4-DOF RCM structure slave arm and a 4-DOF spherical master arm. All DOFs of the master arm are active, so that the functions of active constraints and scaled force feedback can be implemented in the system to assist surgeons during retinal surgery.

Based on a cooperative MSR “Steady-hand Eye Robot (SHER)”, Balicki et al. [171][120] implemented the force scaling and velocity limiting functions. For the velocity limiting function, the threshold of velocity will decrease when the tool–tissue interaction force increases, so as to minimize the risk of retinal damage during the membrane peeling procedure. Uneri et al. [6] developed a new generation of SHER, which had a mechanical RCM structure and a larger workspace, and the robot system utilized real-time force information to gently guide the surgeon’s tool movements towards the direction of lower applied force on the tissue. On this basis, He et al. [162][115] further optimized the system and implemented an adaptive RCM constraint, thus reducing the force of the tool on the sclera during the surgery.

-

Sensory substitution-based force feedback

For the sensory substitution-based force feedback method, the force information is indirectly conveyed through other types of feedback, such as vibration, sound, or overlaid visual cues. Although this type of approach is relatively unintuitive compared to direct force feedback, it is stable and does not interfere with the surgeon’s movements [172][121].

Many researchers have applied force-to-auditory sensory substitution methods to robotic systems, where the system emits sound when the interaction force exceeds a threshold [6,70,173][6][21][122] or changes the sound accordingly to the magnitude of the interaction force [163,171,174][120][123][124]. Gonenc et al. found that incorporating force-to-auditory feedback can effectively reduce the maximum force during the membrane peeling [174][124].

2.4.2. Improved Control Performance

In microsurgery, the precision and stability of the surgeon’s control of the surgical instruments are critical to the outcome of the procedure. Riviere and Jensen observed that the RMS amplitude of tremor during manual instrument manipulation by surgeons is approximately 182 μm [23][125]. This level of tremor presents a significant challenge for the precise execution of microsurgery, which requires an accuracy of up to 25 μm [1,30][1][126]. Therefore, MSRs typically provide functions to enhance the control performance of surgeons on surgical instruments, including tremor filtering, motion scaling, and virtual fixtures.

-

Tremor filtering

Tremor filtering is a common function in most MSRs that helps to minimize unintended hand tremor of surgeons during surgical procedures. The basic principle of tremor filtering is to use various methods to filter out the high frequency signal caused by tremor in control commands. For teleoperated robots, the tremor filtering function can be achieved by applying a low-pass filter between the master module and the slave module. The NeuroArm robot, for example, processes the command signals through a low-pass filter, and surgeons can adjust its cut-off frequency according to their hand tremor for better tremor filtering [4]. For co-manipulated robots, the surgeon and the robot jointly control the movement of the surgical instruments, and the surgeon’s hand tremor can be effectively damped by the stiff structure of the robot arm [162][115]. Gijbels et al. designed a back-drivable co-manipulated robot, which allows the surgeon to control the manipulator without the need for a force sensor on the mechanism [179][127]. The tremor filtering function is achieved by adding virtual damping to the manipulator, and the surgeon can dynamically adjust the damping of the manipulator with a pedal for different surgical procedures. To balance control accuracy and flexibility, some researchers have proposed variable admittance-based control methods, where the system adjusts the admittance parameters according to the magnitude of the applied force [180][128] or the distance to the target [162][115] to achieve a better trade-off between compliance and accuracy. For handheld robots, the tremor filtering function is achieved primarily through a compensation algorithm, which drives the actuators between the tool tip and the surgeon’s hand to counteract unwanted tremors.

-

Motion scaling

Motion scaling is another function for control performance improvement, which scales down the surgeon’s hand movements by a certain level to relatively improve the precision. This function can be easily implemented into the teleoperated robots by processing the command signals before they are sent to the slave module [3,4,181][3][4][129]. Some handheld robots also have motion scaling functionality, but due to the small workspace of their manipulator, this feature can only be applied within a limited operational space [182][130]. Additionally, the co-manipulated robots do not support the motion scaling function due to the fixed position of the tool tip relative to the operator’s hand. The use of the motion scaling function can improve surgical accuracy, but it also reduces the range of motion of the surgical instrument, making it difficult to maneuver the instrument to reach distant targets during surgery. Zhang et al. proposed a motion scaling framework that combines preoperative knowledge with intraoperative information to dynamically adjust the scaling ratio, using a fuzzy Bayesian network to analyze intraoperative visual and kinematic information to achieve situation awareness [183][131]. The same group of researchers designed a teleoperated MSR with a hybrid control interface that allows the operator to switch between position mapping mode (with motion scaling) and velocity mapping mode via buttons on the master controller [49][132]. In velocity mapping mode, the MSR system controls the speed of the surgical instrument based on the force applied by the operator to the master controller, which facilitates its large-distance motion.

-

Virtual fixture

In addition to the above features to improve surgical precision, some MSR systems can provide customized constraints to improve the control performance and safety of robotic surgery through the virtual fixture function. The virtual fixtures rely on perceptual information, such as vision, force, position, and orientation, to create boundaries or guidance paths for surgical instruments in intricate scenarios, especially micromanipulation and fine manipulation in micron-sized workspaces. Some primary advantages of virtual fixtures include: the ability to easily define and modify fixture characteristics and dynamics within the software (such as stiffness, coarse, and viscous environment), the absence of mass or mechanical constraints, the elimination of maintenance needs, and the capability to easily develop, customize, and adapt these fixtures based on the surgical corridor for a specific patient [4,184][4][133].

Virtual fixtures, when designed around the operator’s objectives, can maximize surgical success and minimize incidental damage. For instance, in ophthalmology, virtual fixtures significantly reduce positioning error and the force applied to tissue in intraocular vein tracking and membrane peeling experiments [185][134]. Dewan et al. [186][135] used a calibrated stereo imaging system and surface reconstruction to create virtual fixtures for surface following, tool alignment, targeting, and insertion/extraction in an eye phantom. Similarly, Yang et al. [187][136] developed an eye model grounded in anatomical parameters, dividing the dynamic virtual constraint area to address cataracts caused by eyeball rotation and establishing unique force feedback algorithms for various surgical areas. This method does not require physical force sensors and meets the actual surgical requirements, reducing the complexity and cost of the surgical robot.

Furthermore, several groups have employed structured light and instrument tip tracking to maintain a constant confrontation distance [136,147][91][103]. Utilizing an OCT-guided system, Balicki et al. [188][137] precisely positioned the anatomical features and maintained a constant distance offset from the retina to prevent any potential collisions. More recently, the PRECEYES surgical system has incorporated similar fixed intraocular boundaries [189][138]. Kang et al. [190][139] have also introduced an OCT-guided surgical instrument for accurate subretinal injections, which features a dynamic depth positioning function that continually guides the syringe needle to the desired depth.

2.4.3. Extended Reality (XR) Environment

In the field of HMI, the efficiency and effectiveness of user interactions with digital interfaces crucially shape the overall user experience. Extended Reality (XR), which encompasses virtual reality (VR), augmented reality (AR), and mixed reality (MR) [193[140][141],194], represents the frontier of HMI with its potential to radically transform how we interact with digital content. To achieve a high-quality immersive experience in the XR environment, it is crucial to ensure that users have precise, natural, and intuitive control over virtual objects, and that virtual objects realistically reflect the physical world [195,196,197][142][143][144]. There are three key challenges, namely high-fidelity feedback, real-time processing, and multimodal registration [198[145][146],199], that need to be addressed to minimize interaction errors and ensure that users can accurately manipulate virtual objects in a manner that mirrors the real world. This section will describe the 3D reconstruction techniques that provide high-fidelity surgical information for the XR environment, and present the current state of research on the XR environment for preoperative training and intraoperative guidance.

-

High-fidelity 3D reconstruction

As an integral part of visualization and perceptual interaction in XR, 3D reconstruction is increasingly used to create precise models of difficult-to-access objects or environments for preoperative planning [200[147][148][149],201,202], further enhancing the interaction precision and fidelity in the XR environment. However, its limitations such as time consumption, cost, and the effect of imaging conditions on model accuracy present challenges for microsurgery.

Combined with 3D reconstruction technologies, the visualization of anatomical structures using XR can significantly improve the accuracy and intuitive presentation in microsurgery. Stoyanov et al. [203][150] introduced motion-compensated visualization to ensure perceptual fidelity of AR and emphasized the need for real-time tissue deformation recovery and modeling, along with incorporating human perceptual factors in surgical displays. Probst et al. [129][84] implemented automatic 3D reconstruction and registration of the retina in ex vivo pig-eyes based on stereo camera calibration and robotic sensors. Zhou et al. segmented the geometric data model of the needle body and reconstructed the retinal model with a point cloud using microscope-integrated intraoperative OCT, though it lacked details like retinal layer segmentation and blood flow information [29,145,146][100][101][102].

Some researchers have reconstructed 3D retinal surgical models using optical coherence tomography angiography (OCTA), which provides more blood flow information, for retinal angiography or retinal layer segmentation. These models can be used to diagnose the health of the intraocular retinal vasculature based on the foveal avascular zone, retinal vessel density, and retinal thickness [204,205][151][152]. Li et al. [127][82] presented an image projection network that accomplishes 3D-to-2D image segmentation in OCTA images for retinal vessel segmentation and foveal avascular zone segmentation.

-

XR for preoperative training

The use of XR platforms for preoperative training has become increasingly popular in microsurgical procedures, with several platforms available, such as Eyesi (VRmagic Holding AG, Mannheim, Germany) [206,207][153][154], PhacoVision (Melerit Medical, Linköping, Sweden) [208[155][156],209], and MicrovisTouch (ImmersiveTouch Inc, Chicago, IL, USA) [210,211][157][158]. These platforms have proven effective in reducing the learning curve for surgical teams and saving time and costs in surgeries [203,204,205][150][151][152]. Additionally, these platforms are also widely used in clinical training, diagnosis, and treatment/therapy [212,213][159][160]. However, most of the platforms primarily utilize AR to incorporate comprehensive intraocular tissue information for preoperative training, but their use during intraoperative procedures remains limited.

In addition, some research teams have developed XR environments for preoperative training that accompany specific surgical robots. For instance, to improve efficiency and safety in preretinal membrane peeling simulation, Francone et al. [153][109] developed a haptic-enabled VR simulator using a surgical cockpit equipped with two multi-finger haptic devices. This simulator demonstrated reduced task completion time, improved tool tip path trajectory, decreased tool-retina collision force, and minimized retinal damage through haptic feedback. Sutherland et al. [214][161] have also developed a VR simulator for the NeuroArm system, and a study indicated that gamers adapted quickly to the simulator, potentially attributed to enhanced visual attention and spatial distribution skills acquired from video game play. Consequently, visuospatial performance has emerged as a crucial design criterion for microsurgical visualization [215][162].

-

XR for intraoperative guidance

The XR environment for intraoperative guidance requires timely feedback of surgical information to the surgeon, which places high demands on the efficiency of data processing and rendering in the MSR system. Many teams anticipate that combining intuitive and real-time presentation of intraocular tissue information using OCT in ophthalmic surgery will potentially address this issue. Seider et al. [216][163] used a 4D OCT system to generate a 3D surgical model with real-time surgical data, projected into the surgical telescope as B-scan and stereoscopic OCT volume for high-detail, near-real-time volumetric imaging during macular surgery. However, issues like high cost, limited scanning range, and challenging real-time performance of OCT remain to be tackled. Several teams are working on these challenges. Sommersperger et al. [217][164] utilized 4D OCT to achieve real-time estimation of tool and layer spacing, although such systems are not readily accessible

Furthermore, many research groups hope to integrate OCT imaging with XR for real-time intraoperative ophthalmic surgery in microsurgical workflows to compensate for intraocular information perception limitations. In AR-based surgical navigation for deep anterior lamellar keratoplasty (DALK), Pan et al. [218][165] introduced a deep learning framework for suturing guidance, which can track the excised corneal contour through semantic segmentation and reconstruct the missing motion caused by occlusion using an optical flow inpainting network. Draelos et al. [219,220][166][167] developed a VR-OCT viewer using volume ray casting and GPU optimization strategies based on texture trilinear interpolation, nearest neighbor interpolation, gradient packing, and voxel packing. It improves the efficiency of data processing without significantly degrading the rendering quality, thus bringing the benefits of real-time interaction and full-field display. Unlike traditional microscope systems which constrain the head of the surgeon to the eye-piece, an extended reality-based head-mounted device allows the surgeon to move their head freely while visualizing the imagery.

Another challenge in the application of XR for intraoperative guidance is the identification of essential information for augmentation in each surgical step, and the registration of multimodal images is not easy. To address this challenge, Tang et al. achieved 2D and 3D microscopic image registration of OCT using guiding laser points (fiducials) in AR [221][168], which eases intraocular surgery information perception for surgeons and improves surgery visualization. Roodaki et al. [144][99] combined AR and OCT to perform complex intraoperative image fusion and information extraction, obtaining real-time information about surgical instruments in the eye to guide surgery and enhance its visualization.

2.5. Automation

In the field of MSR, autonomous or semi-autonomous robots can not only respond to the surgeon’s commands, but also perform certain tasks automatically under the surgeon’s supervision, which can further improve surgical precision and efficiency and reduce fatigue caused by prolonged surgery [222][169]. The rise of automation in the surgical environment is increasingly being integrated into robotic microsurgery, particularly in ophthalmic surgery [223][170]. This section will provide a detailed presentation of the current automated methods and potential applications of automation in the MSR system.

-

Current automated methods

Current autonomous applications mainly address individual aspects of surgical procedures rather than covering the entire process [16,224][171][172]. Some MSR systems divide the process into subtasks, some of which are automated under the supervision, while others require manual intervention due to the limitations of existing technology. For example, cataract surgery encompasses six steps, including corneal incision, capsulorhexis, fragmentation, emulsifying and aspirating lens material, clearing the remaining lens material, and implant insertion [57][173]. The first three steps and implant insertion have been investigated for potential automation [225,226,227][174][175][176]. However, emulsifying lens removal and implant insertion, being delicate and hazardous, face challenges such as hand tremors and the absence of depth information. To address these challenges, Chen et al. [57][173] developed an MSR system called IRISS to achieve the semi-automated OCT-guided cataract removal. The system can generate anatomical reconstructions based on OCT images and generate tool trajectories, then automatically insert the instrument under image guidance and perform cataract removal tasks under the surgeon’s supervision. Subsequently, the same group utilized convolutional neural networks to accurately segment intraocular structures in OCT images, enabling successful semi-automatic detection and extraction of lens material [228][177]. In addition, polishing of the posterior capsule (PC, approximately 4–9 μm thick) reduces complications but is considered a high-risk procedure [229][178]. To address this, Gerber et al. developed the posterior capsule polishing function for IRISS, incorporating the guidance provided by OCT volume scan and B-scan [85][38].

Moreover, some research teams are focusing on automating preoperative operations to further improve precision and efficiency. A self-aligning scanner was proposed to achieve fully automatic ophthalmic imaging without mechanical stabilization in mannequins and free-standing human subjects, accelerating the automation process [220][167]. By combining laser-based marking, Wilson et al. [230][179] achieved the visualization of the otherwise invisible RCM to enable automatic RCM alignment with precision of 0.027 ± 0.002 mm, facilitating fully automated cataract extraction.

However, autonomous positioning and navigation pose challenges in microsurgery due to limited workspace and depth estimation. To overcome these difficulties, autonomous positioning of surgical instruments based on coordinated control of the light guide is being explored in RVC [133][88]. A spotlight-based 3D instrument guidance technique was also utilized for an autonomous vessel tracking task (an intermediate step in RVC) with better performance than manual execution and cooperative control in tracking vessels [147][103]. A technique that utilizes a laser aiming beam guided by position-based visual servoing, predicated on surface reconstruction and partitioned visual servoing, was employed to guide Micron for autonomous patterned laser photocoagulation—with fully automated motion and eye movement tracking—in ex vivo porcine eyes [136,231,232][91][180][181]. This method yielded results that were both quicker and more precise compared to semi-automated procedures and unassisted manual scanning of Micron. Additionally, image-guided MSR systems have been developed for automated cannulation of retinal vein phantoms, achieving precise targeting within 20 μm and successful drug infusion into the vascular lumen in all 30 experimental trials [22][11].

-

Potential automated methods

In the field of microsurgery, the integration of machine learning techniques, such as Learning from Demonstration (LfD) and Reinforcement Learning (RL), can enable robots to understand the surgical environment and perform appropriate operations, potentially advancing surgical automation and improving surgical outcomes [13][182]. LfD learns to perform tasks, and obtains policies from expert demonstrations [233][183]. The agent establishes a mapping relationship between state and action so that the agent can perform the appropriate action based on the state decision made at the moment. The expert is not required to provide additional data or any reinforcement signals during the training process. But it has limitations that include requiring a long training period and being highly dependent on the experience of surgeons [234][184]. On the other hand, RL enables the robot to learn actions by interacting with its environment and seeking to maximize cumulative rewards without expert demonstrations [235][185]. In this framework, the agent’s actions result in state transitions within the environment. These states are evaluated by an interpreter, which determines the corresponding reward that should be given to the agent. And then the interpreter transmits the rewards directly to the controller to maximize the total reward to control goal achievement [236][186]. However, RL’s training process is complex, and specifying the reward function can be challenging.

As a potential application in microsurgery, the integration of RL and LfD for application in microsurgery has been verified by Keller et al., using an industrial robot for OCT-guided corneal needle insertion [237][187]. Their algorithm obtained the desired results with minimal tissue deformation and was applied to multiple corneas. However, the semi-automated detection and extraction task’s success heavily depends on the quality of image segmentation of anatomical structures during DALK. Shin et al. [231][180] implemented semi-automatic extraction of lens fragments by semantic segmentation of four intraocular structures in OCT images using a convolutional neural network.

In addition, the RL can be used to realize fast motion planning and automated manipulation tasks [238,239][188][189]. And the automated surgical tool trajectory planning [240][190] and navigation tasks [131][86] were realized by robots using LfD. This trained model could achieve tens-of-microns accuracy while simplifying complex procedures and reducing the risk of tissue damage during surgical tool navigation. Simultaneously, Kim et al. [130][85] combined deep LfD with optimal control to automate the tool navigation task based on the Micron system, predicting target positions on the retinal surface from user-specified image pixel locations. This approach can estimate eye geometry and create safe trajectories within these boundaries.

References

- Mattos, L.; Caldwell, D.; Peretti, G.; Mora, F.; Guastini, L.; Cingolani, R. Microsurgery Robots: Addressing the Needs of High-Precision Surgical Interventions. Swiss Med. Wkly. 2016, 146, w14375.

- Ahronovich, E.Z.; Simaan, N.; Joos, K.M. A Review of Robotic and OCT-Aided Systems for Vitreoretinal Surgery. Adv. Ther. 2021, 38, 2114–2129.

- Gijbels, A.; Vander Poorten, E.B.; Stalmans, P.; Van Brussel, H.; Reynaerts, D. Design of a Teleoperated Robotic System for Retinal Surgery. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 2357–2363.

- Sutherland, G.; Maddahi, Y.; Zareinia, K.; Gan, L.; Lama, S. Robotics in the Neurosurgical Treatment of Glioma. Surg. Neurol. Int. 2015, 6, 1.

- Xiao, J.; Wu, Q.; Sun, D.; He, C.; Chen, Y. Classifications and Functions of Vitreoretinal Surgery Assisted Robots-A Review of the State of the Art. In Proceedings of the 2019 International Conference on Intelligent Transportation, Big Data & Smart City (ICITBS), Changsha, China, 12–13 January 2019; pp. 474–484.

- Uneri, A.; Balicki, M.A.; Handa, J.; Gehlbach, P.; Taylor, R.H.; Iordachita, I. New Steady-Hand Eye Robot with Micro-Force Sensing for Vitreoretinal Surgery. In Proceedings of the 2010 3rd IEEE RAS & EMBS International Conference on Biomedical Robotics and Biomechatronics, Tokyo, Japan, 26–29 September 2010; pp. 814–819.

- Gijbels, A.; Smits, J.; Schoevaerdts, L.; Willekens, K.; Vander Poorten, E.B.; Stalmans, P.; Reynaerts, D. In-Human Robot-Assisted Retinal Vein Cannulation, A World First. Ann. Biomed. Eng. 2018, 46, 1676–1685.

- Yang, S.; MacLachlan, R.A.; Riviere, C.N. Manipulator Design and Operation of a Six-Degree-of-Freedom Handheld Tremor-Canceling Microsurgical Instrument. IEEE/ASME Trans. Mechatron. 2015, 20, 761–772.

- Meenink, H.C.M. Vitreo-Retinal Eye Surgery Robot: Sustainable Precision. Ph.D. Thesis, Technische Universiteit Eindhoven, Eindhoven, The Netherlands, 2011.

- Hendrix, R. (Ron) Robotically Assisted Eye Surgery: A Haptic. Master Console. Ph.D. Thesis, Technische Universiteit Eindhoven, Eindhoven, The Netherlands, 2011.

- Gerber, M.J.; Hubschman, J.-P.; Tsao, T.-C. Automated Retinal Vein Cannulation on Silicone Phantoms Using Optical-Coherence-Tomography-Guided Robotic Manipulations. IEEE/ASME Trans. Mechatron. 2021, 26, 2758–2769.

- Chen, C.; Lee, Y.; Gerber, M.J.; Cheng, H.; Yang, Y.; Govetto, A.; Francone, A.A.; Soatto, S.; Grundfest, W.S.; Hubschman, J.; et al. Intraocular Robotic Interventional Surgical System (IRISS): Semi-automated OCT-guided Cataract Removal. Int. J. Med. Robot. Comput. Assist. Surg. 2018, 14.

- Taylor, R.H.; Funda, J.; Grossman, D.D.; Karidis, J.P.; Larose, D.A. Remote Center-of-Motion Robot for Surgery. 1995. Available online: https://patents.google.com/patent/US5397323A/en (accessed on 16 July 2023).

- Wang, W.; Li, J.; Wang, S.; Su, H.; Jiang, X. System Design and Animal Experiment Study of a Novel Minimally Invasive Surgical Robot. Int. J. Med. Robot. Comput. Assist. Surg. 2016, 12, 73–84.

- Nasseri, M.A.; Eder, M.; Nair, S.; Dean, E.C.; Maier, M.; Zapp, D.; Lohmann, C.P.; Knoll, A. The Introduction of a New Robot for Assistance in Ophthalmic Surgery. In Proceedings of the 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Osaka, Japan, 3–7 July 2013; pp. 5682–5685.

- Niu, G. Research on Slave Mechanism and Control of a Medical Robot System for Celiac Minimally Invasive Surgery. Ph.D. Thesis, Harbin Institute of Technology, Harbin, China, 2017. (In Chinese).

- Yang, U.; Kim, D.; Hwang, M.; Kong, D.; Kim, J.; Nho, Y.; Lee, W.; Kwon, D. A Novel Microsurgery Robot Mechanism with Mechanical Motion Scalability for Intraocular and Reconstructive Surgery. Int. J. Med. Robot. 2021, 17.

- Ida, Y.; Sugita, N.; Ueta, T.; Tamaki, Y.; Tanimoto, K.; Mitsuishi, M. Microsurgical Robotic System for Vitreoretinal Surgery. Int. J. CARS 2012, 7, 27–34.

- Essomba, T.; Nguyen Vu, L. Kinematic Analysis of a New Five-Bar Spherical Decoupled Mechanism with Two-Degrees of Freedom Remote Center of Motion. Mech. Mach. Theory 2018, 119, 184–197.

- Ma, B. Mechanism Design and Human-Robot Interaction of Endoscopic Surgery Robot. Master Thesis, Shanghai University of Engineering and Technology, Shanghai, China, 2020. (In Chinese).

- Gijbels, A.; Willekens, K.; Esteveny, L.; Stalmans, P.; Reynaerts, D.; Vander Poorten, E.B. Towards a Clinically Applicable Robotic Assistance System for Retinal Vein Cannulation. In Proceedings of the 2016 6th IEEE International Conference on Biomedical Robotics and Biomechatronics (BioRob), Singapore, 26–29 June 2016; pp. 284–291.

- Bernasconi, A.; Cendes, F.; Theodore, W.H.; Gill, R.S.; Koepp, M.J.; Hogan, R.E.; Jackson, G.D.; Federico, P.; Labate, A.; Vaudano, A.E.; et al. Recommendations for the Use of Structural Magnetic Resonance Imaging in the Care of Patients with Epilepsy: A Consensus Report from the International League Against Epilepsy Neuroimaging Task Force. Epilepsia 2019, 60, 1054–1068.

- Spin-Neto, R.; Gotfredsen, E.; Wenzel, A. Impact of Voxel Size Variation on CBCT-Based Diagnostic Outcome in Dentistry: A Systematic Review. J. Digit. Imaging 2013, 26, 813–820.

- Li, G.; Su, H.; Cole, G.A.; Shang, W.; Harrington, K.; Camilo, A.; Pilitsis, J.G.; Fischer, G.S. Robotic System for MRI-Guided Stereotactic Neurosurgery. IEEE Trans. Biomed. Eng. 2015, 62, 1077–1088.

- Chen, Y.; Godage, I.; Su, H.; Song, A.; Yu, H. Stereotactic Systems for MRI-Guided Neurosurgeries: A State-of-the-Art Review. Ann. Biomed. Eng. 2019, 47, 335–353.

- Su, H.; Shang, W.; Li, G.; Patel, N.; Fischer, G.S. An MRI-Guided Telesurgery System Using a Fabry-Perot Interferometry Force Sensor and a Pneumatic Haptic Device. Ann. Biomed. Eng. 2017, 45, 1917–1928.

- Monfaredi, R.; Cleary, K.; Sharma, K. MRI Robots for Needle-Based Interventions: Systems and Technology. Ann. Biomed. Eng. 2018, 46, 1479–1497.

- Lang, M.J.; Greer, A.D.; Sutherland, G.R. Intra-Operative Robotics: NeuroArm. In Intraoperative Imaging; Pamir, M.N., Seifert, V., Kiris, T., Eds.; Acta Neurochirurgica Supplementum; Springer Vienna: Vienna, Austria, 2011; Volume 109, pp. 231–236. ISBN 978-3-211-99650-8.

- Sutherland, G.R.; Latour, I.; Greer, A.D.; Fielding, T.; Feil, G.; Newhook, P. AN IMAGE-GUIDED MAGNETIC RESONANCE-COMPATIBLE SURGICAL ROBOT. Neurosurgery 2008, 62, 286–293.

- Sutherland, G.R.; Latour, I.; Greer, A.D. Integrating an Image-Guided Robot with Intraoperative MRI. IEEE Eng. Med. Biol. Mag. 2008, 27, 59–65.

- Fang, G.; Chow, M.C.K.; Ho, J.D.L.; He, Z.; Wang, K.; Ng, T.C.; Tsoi, J.K.H.; Chan, P.-L.; Chang, H.-C.; Chan, D.T.-M.; et al. Soft Robotic Manipulator for Intraoperative MRI-Guided Transoral Laser Microsurgery. Sci. Robot. 2021, 6, eabg5575.

- Aumann, S.; Donner, S.; Fischer, J.; Müller, F. Optical Coherence Tomography (OCT): Principle and Technical Realization. In High Resolution Imaging in Microscopy and Ophthalmology: New Frontiers in Biomedical Optics; Bille, J.F., Ed.; Springer International Publishing: Cham, Germany, 2019; pp. 59–85. ISBN 978-3-030-16638-0.

- Carrasco-Zevallos, O.M.; Viehland, C.; Keller, B.; Draelos, M.; Kuo, A.N.; Toth, C.A.; Izatt, J.A. Review of Intraoperative Optical Coherence Tomography: Technology and Applications. Biomed. Opt. Express 2017, 8, 1607.

- Fujimoto, J.; Swanson, E. The Development, Commercialization, and Impact of Optical Coherence Tomography. Invest. Ophthalmol. Vis. Sci. 2016, 57, OCT1.

- Cheon, G.W.; Huang, Y.; Cha, J.; Gehlbach, P.L.; Kang, J.U. Accurate Real-Time Depth Control for CP-SSOCT Distal Sensor Based Handheld Microsurgery Tools. Biomed. Opt. Express 2015, 6, 1942.

- Cheon, G.W.; Gonenc, B.; Taylor, R.H.; Gehlbach, P.L.; Kang, J.U. Motorized Microforceps With Active Motion Guidance Based on Common-Path SSOCT for Epiretinal Membranectomy. IEEE/ASME Trans. Mechatron. 2017, 22, 2440–2448.

- Yu, H.; Shen, J.-H.; Shah, R.J.; Simaan, N.; Joos, K.M. Evaluation of Microsurgical Tasks with OCT-Guided and/or Robot-Assisted Ophthalmic Forceps. Biomed. Opt. Express 2015, 6, 457.

- Gerber, M.J.; Hubschman, J.; Tsao, T. Robotic Posterior Capsule Polishing by Optical Coherence Tomography Image Guidance. Int. J. Med. Robot. 2021, 17, e2248.

- Cornelissen, A.J.M.; van Mulken, T.J.M.; Graupner, C.; Qiu, S.S.; Keuter, X.H.A.; van der Hulst, R.R.W.J.; Schols, R.M. Near-Infrared Fluorescence Image-Guidance in Plastic Surgery: A Systematic Review. Eur. J. Plast. Surg. 2018, 41, 269–278.

- Jaffer, F.A. Molecular Imaging in the Clinical Arena. JAMA 2005, 293, 855.

- Orosco, R.K.; Tsien, R.Y.; Nguyen, Q.T. Fluorescence Imaging in Surgery. IEEE Rev. Biomed. Eng. 2013, 6, 178–187.

- Gioux, S.; Choi, H.S.; Frangioni, J.V. Image-Guided Surgery Using Invisible Near-Infrared Light: Fundamentals of Clinical Translation. Mol. Imaging 2010, 9, 237–255.

- van den Berg, N.S.; van Leeuwen, F.W.B.; van der Poel, H.G. Fluorescence Guidance in Urologic Surgery. Curr. Opin. Urol. 2012, 22, 109–120.

- Schols, R.M.; Connell, N.J.; Stassen, L.P.S. Near-Infrared Fluorescence Imaging for Real-Time Intraoperative Anatomical Guidance in Minimally Invasive Surgery: A Systematic Review of the Literature. World J. Surg. 2015, 39, 1069–1079.

- Lee, Y.-J.; van den Berg, N.S.; Orosco, R.K.; Rosenthal, E.L.; Sorger, J.M. A Narrative Review of Fluorescence Imaging in Robotic-Assisted Surgery. Laparosc. Surg. 2021, 5, 31.

- Yamamoto, T.; Yamamoto, N.; Azuma, S.; Yoshimatsu, H.; Seki, Y.; Narushima, M.; Koshima, I. Near-Infrared Illumination System-Integrated Microscope for Supermicrosurgical Lymphaticovenular Anastomosis. Microsurgery 2014, 34, 23–27.

- van Mulken, T.J.M.; Schols, R.M.; Scharmga, A.M.J.; Winkens, B.; Cau, R.; Schoenmakers, F.B.F.; Qiu, S.S.; van der Hulst, R.R.W.J.; Keuter, X.H.A.; Lauwers, T.M.A.S.; et al. First-in-Human Robotic Supermicrosurgery Using a Dedicated Microsurgical Robot for Treating Breast Cancer-Related Lymphedema: A Randomized Pilot Trial. Nat. Commun. 2020, 11, 757.

- Wang, K.K.; Carr-Locke, D.L.; Singh, S.K.; Neumann, H.; Bertani, H.; Galmiche, J.-P.; Arsenescu, R.I.; Caillol, F.; Chang, K.J.; Chaussade, S.; et al. Use of Probe-based Confocal Laser Endomicroscopy (pCLE) in Gastrointestinal Applications. A Consensus Report Based on Clinical Evidence. United Eur. Gastroenterol. J. 2015, 3, 230–254.

- Payne, C.J.; Yang, G.-Z. Hand-Held Medical Robots. Ann. Biomed. Eng. 2014, 42, 1594–1605.

- Tun Latt, W.; Chang, T.P.; Di Marco, A.; Pratt, P.; Kwok, K.-W.; Clark, J.; Yang, G.-Z. A Hand-Held Instrument for in Vivo Probe-Based Confocal Laser Endomicroscopy during Minimally Invasive Surgery. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October 2012; pp. 1982–1987.

- Li, Z.; Shahbazi, M.; Patel, N.; O’Sullivan, E.; Zhang, H.; Vyas, K.; Chalasani, P.; Deguet, A.; Gehlbach, P.L.; Iordachita, I.; et al. Hybrid Robot-Assisted Frameworks for Endomicroscopy Scanning in Retinal Surgeries. IEEE Trans. Med. Robot. Bionics 2020, 2, 176–187.

- Nishiyama, K. From Exoscope into the Next Generation. J. Korean Neurosurg. Soc. 2017, 60, 289–293.

- Wan, J.; Oblak, M.L.; Ram, A.; Singh, A.; Nykamp, S. Determining Agreement between Preoperative Computed Tomography Lymphography and Indocyanine Green near Infrared Fluorescence Intraoperative Imaging for Sentinel Lymph Node Mapping in Dogs with Oral Tumours. Vet. Comp. Oncol. 2021, 19, 295–303.

- Montemurro, N.; Scerrati, A.; Ricciardi, L.; Trevisi, G. The Exoscope in Neurosurgery: An Overview of the Current Literature of Intraoperative Use in Brain and Spine Surgery. JCM 2021, 11, 223.

- Mamelak, A.N.; Nobuto, T.; Berci, G. Initial Clinical Experience with a High-Definition Exoscope System for Microneurosurgery. Neurosurgery 2010, 67, 476–483.

- Khalessi, A.A.; Rahme, R.; Rennert, R.C.; Borgas, P.; Steinberg, J.A.; White, T.G.; Santiago-Dieppa, D.R.; Boockvar, J.A.; Hatefi, D.; Pannell, J.S.; et al. First-in-Man Clinical Experience Using a High-Definition 3-Dimensional Exoscope System for Microneurosurgery. Neurosurg. 2019, 16, 717–725.

- Dada, T.; Sihota, R.; Gadia, R.; Aggarwal, A.; Mandal, S.; Gupta, V. Comparison of Anterior Segment Optical Coherence Tomography and Ultrasound Biomicroscopy for Assessment of the Anterior Segment. J. Cataract. Refract. Surg. 2007, 33, 837–840.

- Kumar, R.S.; Sudhakaran, S.; Aung, T. Angle-Closure Glaucoma: Imaging. In Pearls of Glaucoma Management; Giaconi, J.A., Law, S.K., Nouri-Mahdavi, K., Coleman, A.L., Caprioli, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2016; pp. 517–531. ISBN 978-3-662-49042-6.

- Chen, W.; He, S.; Xiang, D. Management of Aphakia with Visual Axis Opacification after Congenital Cataract Surgery Based on UBM Image Features Analysis. J. Ophthalmol. 2020, 2020, 9489450.

- Ursea, R.; Silverman, R.H. Anterior-Segment Imaging for Assessment of Glaucoma. Expert. Rev. Ophthalmol. 2010, 5, 59–74.

- Smith, B.R.; Gambhir, S.S. Nanomaterials for In Vivo Imaging. Chem. Rev. 2017, 117, 901–986.

- Vander Poorten, E.; Riviere, C.N.; Abbott, J.J.; Bergeles, C.; Nasseri, M.A.; Kang, J.U.; Sznitman, R.; Faridpooya, K.; Iordachita, I. Robotic Retinal Surgery. In Handbook of Robotic and Image-Guided Surgery; Elsevier: Amsterdam, The Netherlands, 2020; pp. 627–672. ISBN 978-0-12-814245-5.

- Jeppesen, J.; Faber, C.E. Surgical Complications Following Cochlear Implantation in Adults Based on a Proposed Reporting Consensus. Acta Oto-Laryngol. 2013, 133, 1012–1021.

- Sznitman, R.; Richa, R.; Taylor, R.H.; Jedynak, B.; Hager, G.D. Unified Detection and Tracking of Instruments during Retinal Microsurgery. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1263–1273.

- Sznitman, R.; Ali, K.; Richa, R.; Taylor, R.H.; Hager, G.D.; Fua, P. Data-Driven Visual Tracking in Retinal Microsurgery. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2012; Ayache, N., Delingette, H., Golland, P., Mori, K., Eds.; Lecture Notes in Computer Science; Springer Berlin Heidelberg: Berlin/Heidelberg, Germany, 2012; Volume 7511, pp. 568–575. ISBN 978-3-642-33417-7.

- Sznitman, R.; Becker, C.; Fua, P. Fast Part-Based Classification for Instrument Detection in Minimally Invasive Surgery. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2014; Golland, P., Hata, N., Barillot, C., Hornegger, J., Howe, R., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Germany, 2014; Volume 8674, pp. 692–699. ISBN 978-3-319-10469-0.

- Rieke, N.; Tan, D.J.; Alsheakhali, M.; Tombari, F.; Di San Filippo, C.A.; Belagiannis, V.; Eslami, A.; Navab, N. Surgical Tool Tracking and Pose Estimation in Retinal Microsurgery. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Germany, 2015; Volume 9349, pp. 266–273. ISBN 978-3-319-24552-2.

- Rieke, N.; Tan, D.J.; Tombari, F.; Vizcaíno, J.P.; Di San Filippo, C.A.; Eslami, A.; Navab, N. Real-Time Online Adaption for Robust Instrument Tracking and Pose Estimation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2016; Ourselin, S., Joskowicz, L., Sabuncu, M.R., Unal, G., Wells, W., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Germany, 2016; Volume 9900, pp. 422–430. ISBN 978-3-319-46719-1.

- Rieke, N.; Tombari, F.; Navab, N. Computer Vision and Machine Learning for Surgical Instrument Tracking. In Computer Vision for Assistive Healthcare; Elsevier: Amsterdam, The Netherlands, 2018; pp. 105–126. ISBN 978-0-12-813445-0.

- Lu, W.; Tong, Y.; Yu, Y.; Xing, Y.; Chen, C.; Shen, Y. Deep Learning-Based Automated Classification of Multi-Categorical Abnormalities From Optical Coherence Tomography Images. Trans. Vis. Sci. Tech. 2018, 7, 41.

- Loo, J.; Fang, L.; Cunefare, D.; Jaffe, G.J.; Farsiu, S. Deep Longitudinal Transfer Learning-Based Automatic Segmentation of Photoreceptor Ellipsoid Zone Defects on Optical Coherence Tomography Images of Macular Telangiectasia Type 2. Biomed. Opt. Express 2018, 9, 2681.