You're using an outdated browser. Please upgrade to a modern browser for the best experience.

Please note this is a comparison between Version 2 by Fanny Huang and Version 1 by ZHIHAO SU.

Deep learning models have shown great promise in diagnosing skeletal fractures from X-ray images. Deep learning algorithms are always applied in X-rays and CT image processing, such as assessing the mineral bone density (BMD), detecting bone fractures, and recommending treatment

- deep learning algorithms

- skeletal fracture detection

1. Introduction

In recent years, medical image processing based on machine learning algorithms has gained more attention, especially deep learning algorithms [1,2][1][2]. Compared with traditional methods, deep learning algorithms have the strength to extract features automatically [3,4][3][4]. Deep learning algorithms are always applied in X-rays and CT image processing [5[5][6],6], such as assessing the mineral bone density (BMD), detecting bone fractures [7], and recommending treatment [8]. In clinical practice, it is a tiring and time-consuming job for doctors to mark fracture parts manually, so deep learning applied in the computer vision field has inspired many scholars to solve issues in the medical image field.

In the past, many bone fracture detection studies [9,10,11,12][9][10][11][12] utilized manual feature extractors to generate feature vectors, including color features, texture features, and shape features. Afterward, more and more related research [13,14,15,16,17][13][14][15][16][17] was inclined to cooperate with machine learning classifiers to recognize fractures, which improved bone fracture image processing technologies. However, traditional manual feature extraction algorithms and machine learning classifiers use complex mathematical operations but have limited performance [18,19,20][18][19][20]. So, deep learning algorithms have become increasingly popular in recent years making traditional methods obsolescent gradually.

Selecting the appropriate references is important. They should be closely related to the main topic and written by known experts. In fast-changing areas like tech or medicine, it is good to choose newer materials. Also, opt for sources that many people have reviewed and approved. It is a plus if a lot of researchers have cited them in their work. Make sure the references are of good quality and that the ways in which they obtained their information are solid. Researchers identified 337 records from database searches and other sources. After screening 267 of these records, 198 were excluded, leaving 57 full-text articles for assessment. Ultimately, 40 articles were chosen for analysis.

The hot directions in bone fracture diagnosis not only include the application of deep learning algorithms for different specific tasks but also include the evolvement of deep learning algorithms, from traditional bounding boxes to semantically rich representations, emphasizing attention mechanisms and heat maps for comprehensive fracture visualization [19,20,21,22,23][19][20][21][22][23]. Although there are existing reviews on the topic, the precious understanding of the various domains of this rapidly evolving domain is missing. The review in [19] does not analyze the architecture and process of these AI models, while another review [21] only selected eleven studies, which cannot cover the entire research field. Additionally, the review in [22] is too wide to provide precise guidance. Furthermore, while foundational knowledge is vital, its presentation without the correlation to performance metrics, as seen in the review in [23], explains much basic knowledge but is lacking in showing the key indicators of each AI model.

2. Skeletal Fracture Detection with Deep Learning

2.1. Task Definition

Publications related to computer vision generally use recognition tasks to decide whether the object is the target or not, while the classification task is to classify the object into a specific category. The detection task is to find the target with the bounding box, while the localization task is to specify the location information of the target [24]. At present, most papers do not define the concept of bone fracture recognition, classification, detection, and localization clearly, so the search results for specific tasks were mixed. Additionally, deep learning algorithms for computer vision have systemic models to address different tasks. However, the definition of the four tasks in many papers is inconsistent with the mainstream terminology rule.

Publications related to computer vision generally use recognition tasks to decide whether the object is the target or not, while the classification task is to classify the object into a specific category. The detection task aims to find the target with a bounding box, whereas the localization task specifies the location information of the target [24].

However, the current landscape faces several pain points. Most notably, there’s significant ambiguity in many research papers regarding the definitions of bone fracture recognition, classification, detection, and localization. This lack of standardized terminologies and concepts has led to confusion in research methodologies and outcomes, resulting in mixed search results for specific tasks. For instance, the paper by Yang T H et al. in 2017 was filled with images of fractures or non-fractures, but instead detected through classifying [25].

Also, while deep learning algorithms for computer vision have set definitions for different tasks, many articles do not follow this clear path. A big problem is that different studies use different terminologies for the same tasks, making them different from what most people use. This difference makes it hard to combine knowledge from different places and slows down the development of common approaches and answers in this area.

The dominant challenge, therefore, is developing a universally accepted definitional formula for these tasks, ensuring that research outputs are both consistent and comparable, quickening the progress in the field. If researchers define the formula of bone diagnosis task as Y=f(x)Y=f(x), and here, xx is the input image, and YY is the output diagnosis result. For the four different tasks, YY has different meanings. For the recognition task, Y∈{fracture or non−fracture}Y∈{fracture or non−fracture}. For the classification task, Y∈{type A fracture,type B fracture,...,or non−fracture}Y∈{type A fracture,type B fracture,...,or non−fracture}. For the detection task, Y={y1,y2,y3,...,yn|yi=(x,y,w,h)},Y={y1,y2,y3,...,yn|yi=(x,y,w,h)}, which is a collection of fracture bounding boxes. For the localization task, Y={ai,j}m×nY={ai,j}m×n, which is the matrix of the probability of fracture at each location.

2.2. Basic Knowledge

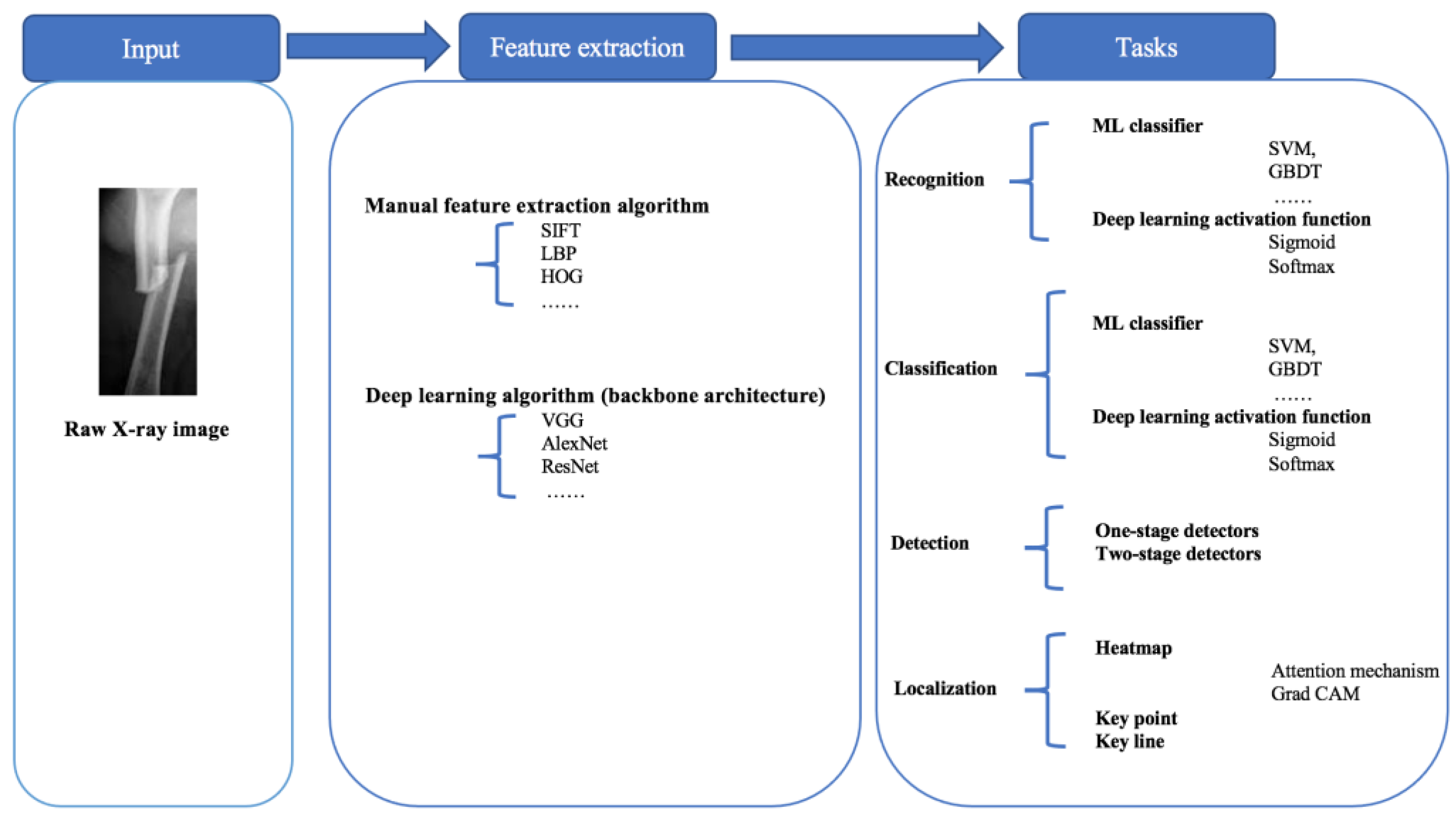

Image processing algorithms usually have two main steps as illustrated in Figure 21. The first step is feature extraction and the second step is the specific modeling task, such as recognition, classification, detection, and localization. Feature extraction usually has two methods, i.e., manual feature extraction and deep learning algorithms. Bone fracture diagnosis research needs to draw lessons from the state-of-the-art in deep learning [22] because the application of deep learning algorithms always lags behind theory research. In deep learning algorithms, feature extraction is carried out on backbone architecture, which is the most basic part of deep learning algorithms [26]. Once the features have been extracted, only the specific modeling tasks will be performed, such as classification, detection, etc. Both machine learning classifiers and deep learning models play a key role in solving the specific problem after extracting features.

Figure 21. The processing graph of the four modeling tasks.

The recognition task is a binary classification task that identifies whether the X-ray bone images are fractured or not, based on feature extraction. The classification task induces only multi-classification, such as transverse fractures, oblique fractures, spiral fractures, etc. [27]. On the other hand, the purposes of detection and localization are to find the fracture position, and they exploit different tools. The detection task makes use of bounding boxes and recognizes fractures within the bounding boxes, while localization marks the fracture position with the help of heat maps, key points, or key lines.

References

- Cai, L.; Gao, J.; Zhao, D. A review of the application of deep learning in medical image classification and segmentation. Ann. Transl. Med. 2020, 8, 713.

- Latif, J.; Xiao, C.; Imran, A.; Tu, S. Medical imaging using machine learning and deep learning algorithms: A review. In Proceedings of the 2019 2nd International Conference on Computing, Mathematics and Engineering Technologies (iCoMET), Sukkur, Pakistan, 30–31 January 2019; pp. 1–5.

- Fourcade, A.; Khonsari, R.H. Deep learning in medical image analysis: A third eye for doctors. J. Stomatol. Oral Maxillofac. Surg. 2019, 120, 279–288.

- Singh, A.; Sengupta, S. Lakshminarayanan, Explainable deep learning models in medical image analysis. J. Imaging 2020, 6, 52.

- Khalid, H.; Hussain, M.; Al Ghamdi, M.A.; Khalid, T.; Khalid, K.; Khan, M.A.; Fatima, K.; Masood, K.; Almotiri, S.H.; Farooq, M.S.; et al. A comparative systematic literature review on knee bone reports from mri, x-rays and ct scans using deep learning and machine learning methodologies. Diagnostics 2020, 10, 518.

- Minnema, J.; Ernst, A.; van Eijnatten, M.; Pauwels, R.; Forouzanfar, T.; Batenburg, K.J.; Wolff, J. A review on the application of deep learning for ct reconstruction, bone segmentation and surgical planning in oral and maxillofacial surgery. Dentomaxillofacial Radiol. 2022, 51, 20210437.

- Wong, S.; Al-Hasani, H.; Alam, Z.; Alam, A. Artificial intelligence in radiology: How will we be affected? Eur. Radiol. 2019, 29, 141–143.

- Bujila, R.; Omar, A.; Poludniowski, G. A validation of spekpy: A software toolkit for modeling X-ray tube spectra. Phys. Medica 2020, 75, 44–54.

- Lim, S.E.; Xing, Y.; Chen, Y.; Leow, W.K.; Howe, T.S.; Png, M.A. Detection of femur and radius fractures in X-ray images. In Proceedings of the 2nd International Conference on Advances in Medical Signal and Information Processing, Valletta, Malta, 5–8 September 2004; Volume 65.

- Linda, C.H.; Jiji, G.W. Crack detection in X-ray images using fuzzy index measure. Appl. Soft Comput. 2011, 11, 3571–3579.

- Umadevi, N.; Geethalakshmi, S. Multiple classification system for fracture detection in human bone X-ray images. In Proceedings of the 2012 Third International Conference on Computing, Communication and Networking Technologies (ICCCNT’12), Coimbatore, India, 26–28 July 2012; pp. 1–8.

- Al-Ayyoub, M.; Hmeidi, I.; Rababah, H. Detecting hand bone fractures in X-ray images. J. Multimed. Process. Technol. 2013, 4, 155–168.

- He, J.C.; Leow, W.K.; Howe, T.S. Hierarchical classifiers for detection of fractures in X-ray images. In Computer Analysis of Images and Patterns, Proceedings of the 12th International Conference, CAIP 2007, Vienna, Austria, 27–29 August 2007; Proceedings 12; Springer: Cham, Switzerland, 2007; pp. 962–969.

- Lum, V.L.F.; Leow, W.K.; Chen, Y.; Howe, T.S.; Png, M.A. Combining classifiers for bone fracture detection in X-ray images. In Proceedings of the IEEE International Conference on Image Processing 2005, Genova, Italy, 14 September 2005; Volume 1, p. I-1149.

- Ghaderzadeh, M.; Aria, M.; Hosseini, A.; Asadi, F.; Bashash, D.; Abolghasemi, H. A fast and efficient CNN model for B-ALL diagnosis and its subtypes classification using peripheral blood smear images. Int. J. Intell. Syst. 2022, 37, 5113–5133.

- Hosseini, A.; Eshraghi, M.A.; Taami, T.; Sadeghsalehi, H.; Hoseinzadeh, Z.; Ghaderzadeh, M.; Rafiee, M. A mobile application based on efficient lightweight CNN model for classification of B-ALL cancer from non-cancerous cells: A design and implementation study. Inform. Med. Unlocked 2023, 39, 101244.

- Cao, Y.; Wang, H.; Moradi, M.; Prasanna, P.; Syeda-Mahmood, T.F. Fracture detection in X-ray images through stacked random forests feature fusion. In Proceedings of the 2015 IEEE 12th International Symposium on Biomedical Imaging (ISBI), Brooklyn, NY, USA, 16–19 April 2015; pp. 801–805.

- Myint, W.W.; Tun, K.S.; Tun, H.M. Analysis on leg bone fracture detection and classification using X-ray images. Mach. Learn. Res. 2018, 3, 49–59.

- Langerhuizen, D.W.; Janssen, S.J.; Mallee, W.H.; Van Den Bekerom, M.P.; Ring, D.; Kerkhoffs, G.M.; Jaarsma, R.L.; Doornberg, J.N. What are the applications and limitations of artificial intelligence for fracture detection and classification in orthopaedic trauma imaging? a systematic review. Clin. Orthop. Relat. Res. 2019, 477, 2482.

- Adam, A.; Rahman, A.H.A.; Sani, N.S.; Alyessari, Z.A.A.; Mamat, N.J.Z.; Hasan, B. Epithelial layer estimation using curvatures and textural features for dysplastic tissue detection. CMC-Comput. Mater. Contin. 2021, 67, 761–777.

- LTanzi; Vezzetti, E.; Moreno, R.; Moos, S. X-ray bone fracture classification using deep learning: A baseline for designing a reliable approach. Appl. Sci. 2020, 10, 1507.

- Joshi, D.; Singh, T.P. A survey of fracture detection techniques in bone X-ray images. Artif. Intell. Rev. 2020, 53, 4475–4517.

- Rainey, C.; McConnell, J.; Hughes, C.; Bond, R.; McFadden, S. Artificial intelligence for diagnosis of fractures on plain radiographs: A scoping review of current literature. Intell.-Based Med. 2021, 5, 100033.

- Hassaballah, M.; Awad, A.I. Deep Learning in Computer Vision: Principles and Applications; CRC Press: Boca Raton, FL, USA, 2020.

- Dimililer, K. Ibfds: Intelligent bone fracture detection system. Procedia Comput. Sci. 2017, 120, 260–267.

- Zaidi, S.S.A.; Ansari, M.S.; Aslam, A.; Kanwal, N.; Asghar, M.; Lee, B. A survey of modern deep learning based object detection models. Digit. Signal Process. 2022, 126, 103514.

- Abiodun, O.I.; Jantan, A.; Omolara, A.E.; Dada, K.V.; Mohamed, N.A.; Arshad, H. State-of-the-art in artificial neural network applications: A survey. Heliyon 2018, 4, e00938.

More