Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 2 by Jason Zhu and Version 1 by Alireza Mofidi.

Machine learning approaches, in particular graph learning methods, have achieved great results in the field of natural language processing, in particular text classification tasks. However, many of such models have shown limited generalization on datasets in different languages.

- non-English text classification

- graph machine learning

- ensemble learning method

1. Introduction

In the last decade, there has been a tremendous growth in the number of digital documents and complex textual data. Text classification is a classically important task in many natural language processing (NLP) applications, such as sentiment analysis, topic labeling, and question answering. Sentiment analysis is particularly and significantly important in the realms of business and sales, as it enables organizations to gain valuable insights and make informed decisions. In the age of information explosion, processing and classifying large volumes of textual data manually is time-consuming and challenging. Moreover, the accuracy of manual text classification can be easily influenced by human factors such as fatigue and insufficient domain knowledge. It is desirable to automate classification methods using machine learning techniques, which are more reliable. Additionally, this can contribute to increase the efficiency of information retrieval and reduce the burden of information overload by effectively locating the required information [1]. See some of the works on text classification as well as some of its numerous real-world applications in [2,3,4,5][2][3][4][5].

On the other hand, graphs provide an important data representation that is used in a wide range of real-world problems. Effective graph analysis enables users to gain a deeper understanding of the underlying information in the data and can be applied in various useful applications such as node classification, community detection, link prediction, and more. Graph representation is an efficient and effective method for addressing graph analysis tasks. It compresses graph data into a lower-dimensional space while attempting to preserve the structural information and characteristics of the graph to the maximum extent.

Graph neural networks (GNNs) represent today central notions and tools in a wide range of machine learning areas since they are able to employ the power of both graphs and neural networks in order to operate on data and perform machine learning tasks such as text classification.

2. Preprocessing

The preprocessing stage for the textual dataset before graph construction involves several steps. It is also worth mentioning that, for non-English datasets, the pre-processing stage may be different from some technical aspects relevant to that particular language. Researchers explain the approach here, although similar processes have been followed in earlier works such as in [21][6]. First, stop words are removed. These are common words that carry little to no semantic importance and their removal can enhance the performance and efficiency of natural language processing (NLP) models. Next, punctuation marks such as colons, semicolons, quotation marks, parentheses, and others are eliminated. This simplifies the text and facilitates processing by NLP models. Stemming is applied to transform words into their base or root form, ensuring standardization of the textual data. Finally, tokenization is performed, which involves dividing the text into smaller units known as tokens, which is usually achieved by splitting it into words. These pre-processing steps prepare the text for subsequent graph construction tasks.3. Graph Construction

There exist different methods for graph construction in the context of text classification. An important, known method is to construct a graph for a corpus based on word co-occurrence and document–word relations. This graph is a heterogeneous graph that includes word nodes and document nodes, allowing the modeling of global word co-occurrence and the adaptation of graph convolution techniques [20][7]. After constructing the graph, the next step is to create initial feature vectors for each node in the graph based upon pre-trained models, namely BERT and ParsBERT. It is worth noting that all the unique words obtained from the pre-processing stage, along with all the documents, altogether form the set of nodes of the graph. Edges are weighted and defined to belong to one of three groups. One group consists of those edges between document–word pairs based upon word occurrence in documents. The second group consists of those edges between word–word pairs based upon word co-occurrence in the entire corpus. The third group consists of those edges between document–document pairs based upon word co-occurrence in the two documents. As shown in Equation (1), the weight 𝐴𝑖,𝑗 of the edges (𝑖,𝑗) is defined using the term frequency-inverse document frequency (TF-IDF) [8] measure (for document–word edges) and the point-wise mutual information (PMI) measure (for word–word edges). The PMI measure captures the semantic correlation between words in the corpus. Moreover, Jaccard similarity is used to define the edge weight by calculating the similarity between two documents [21][6].𝐴𝑖,𝑗=⎧⎩⎨𝑃𝑀𝐼𝑖,𝑗𝑇𝐹−𝐼𝐷𝐹𝑖,𝑗𝐽𝑎𝑐𝑎𝑟𝑑𝑖,𝑗1𝑖and𝑗arebothwords,𝑖isadocand𝑗isaword,𝑖and𝑗arebothdocuments,𝑖=𝑗,

ℎ(𝑘)𝑢=𝜎(𝑊(𝑘)𝑠𝑒𝑙𝑓ℎ(𝑘−1)𝑢+𝑊(𝑘)𝑛𝑒𝑖𝑔ℎ∑𝑣𝜖𝑁(𝑢)ℎ(𝑘−1)𝑣+𝑏(𝑘))

4. Graph Partitioning

Researchers now address another ingredient of the combination of techniques, which posses both conceptual as well as technical significance in the results. A fundamental challenge in graph neural networks is the need for a large space to store the graph and the representation vectors created for each node. To address this issue, one of the contributions is to apply Cluster-GCN algorithm [23][9], which partitions the graph into smaller clusters, as will be explained below. The Cluster-GCN algorithm utilizes a graph-clustering structure to address the challenge posed by large-scale graph neural networks. In order to overcome the need for extensive memory and storage for the graph and its node representation vectors, the algorithm divides the graph into smaller subsets by using graph-clustering algorithms like METIS [24][10]. METIS aims to partition the graph into subgraphs of approximately equal size while minimizing the edge connections between them. The process involves graph coarsening, wherein vertices in the original graph are merged to create a smaller yet representative graph for efficient partitioning. After generating the initial subgraphs using the graph partitioning algorithm, the algorithm refines the partitioning in a recursive manner by the application of an uncoarsening algorithm. This recursive process propagates partitioning information from smaller to larger levels while maintaining the balance and size of the subgraphs. By dividing the graph into smaller clusters, the model’s performance improves in terms of computational space and time. The decision to employ graph-clustering methods is driven by the aim to create partitions wherein connections within each group are strong, capturing the clustering and community structure of the graph effectively. This approach is particularly beneficial in node embeddings, as nodes and their neighbors usually belong to the same cluster; it enables efficient batch processing.5. Ensemble Learning

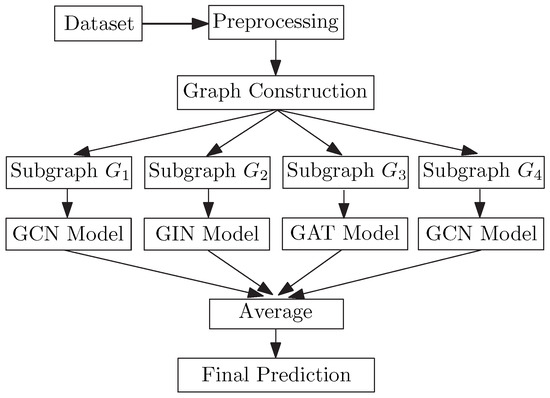

One of the other ingredients of the combination of techniques is using ideas from the theory of ensemble learning. Bagging and stacking are important techniques in neural networks. After the preprocessing stage in the dataset, researchers construct the graph in the way that was described above. Then, using the Cluster-GCN algorithm, researchers divide the input graph into four disjoint-induced subgraphs. Each of these subgraphs is then fed to a separate graph neural network. According to the experiences, graph convolutional networks (GCNs) have shown better performance in text classification processing in many of the attempts. Therefore, researchers highlight the use of GCNs in the combination. In addition to GCNs, researchers also utilize a graph isomorphism networks (GINs) framework as well as graph attention networks (GATs) as two other parts of the ensemble learning in the combination. It is worth noting that the GIN part in the combination here intends to capture the global structure of the graph. An overview of the algorithm used is shown in Figure 1. Once training was conducted over separated individual models, after which researchers obtained four different trained GNN models. In the test stage, test samples pass through all these models and each one creates its own classification output vector. Then, the results of all of these models are combined by taking the average of those output vectors. Via this process, the ensemble method can help to enhance the prediction accuracy by amalgamating the strengths of multiple models.

Figure 1. Using group algorithms in graph neural networks.

- Li, Q.; Peng, H.; Li, J.; Xia, C.; Yang, R.; Sun, L.; Yu, P.S.; He, L. A Survey on Text Classification: From Traditional to Deep Learning. ACM Trans. Intell. Syst. Technol. 2022, 13, 1–41. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, S.; Liu, B. Deep learning for sentiment analysis: A survey. Wiley Interdiscip. Rev. Data Min. Knowl. Dis. 2018, 8, e1253. [Google Scholar] [CrossRef]

- Aggarwal, C.C.; Zhai, C.; Aggarwal, C.C.; Zhai, C. A survey of text classification algorithms. In Mining Text Data; Springer: Berlin/Heidelberg, Germany, 2012; pp. 163–222. [Google Scholar]

- Zeng, Z.; Deng, Y.; Li, X.; Naumann, T.; Luo, Y. Natural language processing for ehr-based computational phenotyping. IEEE/ACM Trans. Comput. Biol. Bioinform. 2018, 16, 139–153. [Google Scholar] [CrossRef] [PubMed]

- Dai, Y.; Liu, J.; Ren, X.; Xu, Z. Adversarial training based multi-source unsupervised domain adaptation for sentiment analysis. In Proceedings of the AAAI Conference on Artificial Intelligence 2020, New York, NY, USA, 7–12 February 2020; pp. 7618–7625. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the NAACL-HLT, Minneapolis, MN, USA, 3–5 June 2019; pp. 4171–4186. [Google Scholar]

- Farahani, M.; Gharachorloo, M.; Farahani, M.; Manthouri, M. Parsbert: Transformer-based model for persian language understanding. Neural Process. Lett. 2021, 53, 3831–3847. [Google Scholar] [CrossRef]

- Salton, G.; Buckley, C. Term-weighting approaches in automatic text retrieval. Inf. Process. Manag. 1988, 24, 513–523. [Google Scholar] [CrossRef]

- Medsker, L.R.; Jain, L.C. Recurrent Neural Networks: Design and Applications; CRC Press: Boca Raton, FL, USA, 1999. [Google Scholar]

- Liu, P.; Qiu, X.; Huang, X. Recurrent neural network for text classification with multi-task learning. In Proceedings of the Twenty-Fifth International Joint Conference on Artificial Intelligence (IJCAI), New York, NY, USA, 9–15 July 2016; AAAI Press: Washington, DO, USA, 2016; pp. 2873–2879. [Google Scholar]

- Luo, Y. Recurrent neural networks for classifying relations in clinical notes. J. Biomed. Inform. 2017, 72, 85–95. [Google Scholar] [CrossRef]

- Lai, S.; Xu, L.; Liu, K.; Zhao, J. Recurrent convolutional neural networks for text classification. In Proceedings of the Twenty-Ninth AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Tai, K.S.; Socher, R.; Manning, C.D. Improved semantic representations from tree-structured long short-term memory networks. In Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing, Beijing, China, 26–31 July 2015. [Google Scholar]

- Zhang, S.; Tong, H.; Xu, J.; Maciejewski, R. Graph convolutional networks: A comprehensive review. Comput. Soc. Netw. 2019, 6, 11. [Google Scholar] [CrossRef]

- Kim, Y. Convolutional Neural Networks for Sentence Classification. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014. [Google Scholar]

- Hamilton, W.; Ying, Z.; Leskovec, J. Inductive representation learning on large graphs. Adv. Neural Inf. Process. Syst. 2017, 30, 1–11. [Google Scholar]

- Velickovic, P.; Cucurull, G.; Casanova, A.; Romero, A.; Lio, P.; Bengio, Y. Graph Attention Networks. Stat 2018, 1050, 4. [Google Scholar]

- Xu, K.; Hu, W.; Leskovec, J.; Jegelka, S. How Powerful are Graph Neural Networks? In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019.

- Yao, L.; Mao, C.; Luo, Y. Graph convolutional networks for text classification. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27–28 January 2019; Volume 33, pp. 7370–7377. [Google Scholar]

- Han, S.C.; Yuan, Z.; Wang, K.; Long, S.; Poon, J. Understanding Graph Convolutional Networks for Text Classification. arXiv 2022, arXiv:2203.16060. [Google Scholar]

- Lin, Y.; Meng, Y.; Sun, X.; Han, Q.; Kuang, K.; Li, J.; Wu, F. BertGCN: Transductive Text Classification by Combining GNN and BERT. In Proceedings of the Findings of the Association for Computational Linguistics: ACL-IJCNLP 2021, Online, 1–6 August 2021; pp. 1456–1462. [Google Scholar]

- Chiang, W.-L.; Liu, X.; Si, S.; Li, Y.; Bengio, S.; Hsieh, C. Cluster-GCN: An efficient algorithm for training deep and large graph convolutional networks. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 257–266. [Google Scholar]

- Karypis, G.; Kumar, V. A fast and high quality multilevel scheme for partitioning irregular graphs. SIAM J. Sci. Comput. 1998, 20, 359–392. [Google Scholar] [CrossRef]

- Dashtipour, K.; Gogateb, M.; Lia, J.; Jiangc, F.; Kongc, B.; Hussain, A. A Hybrid Persian Sentiment Analysis Framework: Integrating Dependency Grammar Based Rules and Deep Neural Networks. Neurocomputing 2020, 380, 1–10. [Google Scholar] [CrossRef]

- Ghasemi, R.; Ashrafi Asli, S.A.; Momtazi, S. Deep Persian sentiment analysis: Cross-lingual training for low-resource languages. J. Inf. Sci. 2022, 48, 449–462. [Google Scholar] [CrossRef]

- Dai, Y.; Shou, L.; Gong, M.; Xia, X.; Kang, Z.; Xu, Z.; Jiang, D. Graph fusion network for text classification. Knowl.-Based Syst. 2022, 236, 107659. [Google Scholar] [CrossRef]

References

- Li, Q.; Peng, H.; Li, J.; Xia, C.; Yang, R.; Sun, L.; Yu, P.S.; He, L. A Survey on Text Classification: From Traditional to Deep Learning. ACM Trans. Intell. Syst. Technol. 2022, 13, 1–41.

- Zhang, L.; Wang, S.; Liu, B. Deep learning for sentiment analysis: A survey. Wiley Interdiscip. Rev. Data Min. Knowl. Dis. 2018, 8, e1253.

- Aggarwal, C.C.; Zhai, C.; Aggarwal, C.C.; Zhai, C. A survey of text classification algorithms. In Mining Text Data; Springer: Berlin/Heidelberg, Germany, 2012; pp. 163–222.

- Zeng, Z.; Deng, Y.; Li, X.; Naumann, T.; Luo, Y. Natural language processing for ehr-based computational phenotyping. IEEE/ACM Trans. Comput. Biol. Bioinform. 2018, 16, 139–153.

- Dai, Y.; Liu, J.; Ren, X.; Xu, Z. Adversarial training based multi-source unsupervised domain adaptation for sentiment analysis. In Proceedings of the AAAI Conference on Artificial Intelligence 2020, New York, NY, USA, 7–12 February 2020; pp. 7618–7625.

- Han, S.C.; Yuan, Z.; Wang, K.; Long, S.; Poon, J. Understanding Graph Convolutional Networks for Text Classification. arXiv 2022, arXiv:2203.16060.

- Yao, L.; Mao, C.; Luo, Y. Graph convolutional networks for text classification. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27–28 January 2019; Volume 33, pp. 7370–7377.

- Salton, G.; Buckley, C. Term-weighting approaches in automatic text retrieval. Inf. Process. Manag. 1988, 24, 513–523.

- Chiang, W.-L.; Liu, X.; Si, S.; Li, Y.; Bengio, S.; Hsieh, C. Cluster-GCN: An efficient algorithm for training deep and large graph convolutional networks. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 257–266.

- Karypis, G.; Kumar, V. A fast and high quality multilevel scheme for partitioning irregular graphs. SIAM J. Sci. Comput. 1998, 20, 359–392.

More