- infrared and visible image fusion

- image fusion

- multi-scale decomposition

- compressed sensing

1. Introduction

Image fusion attempts to use various techniques as enhancement strategies to create rich images with many aspects and information. Combining multiple sensors to produce an image is the process of image fusion. The continuous progress of current science and technology has led to the development of image fusion technology because an image with a single piece of information cannot meet the needs of people[1]. Infrared and visible image fusion[2], multi-focus image fusion, medical image fusion, and remote sensing image fusion are the main branches of image fusion.

Infrared and visible image fusion are commonly used in the above four image fusion techniques. The visible light band, with its high resolution and unusually detailed texture, is most consistent with the visual field of the human eye, producing images very similar to those people see in their daily lives. However, it will be severely disrupted by shielding, weather, and other factors. The ability to recognize and identify targets in infrared images captures thermal targets even in the most challenging weather conditions, such as heavy rain or smoke. On the other hand, low resolution, fuzziness, and poor contrast are further disadvantages of infrared images.

In practical applications, the combination of infrared and visible images can solve various problems. For example, in some cases, operators must simultaneously monitor a large number of visible and infrared images from the same scene, each with its own unique requirements. Humans have found it very challenging to combine information from visible and infrared images just by staring at various visible and infrared images. In some cases with complex backgrounds, infrared images can overcome the constraints of visible images, obtain target information at night or in low-illumination environments, and improve target recognition abilities. By fusing infrared and visible light photos, workflow efficiency and convenience can be greatly improved. At the same time, infrared and visible image fusion is widely used in the fields of night vision, biometric recognition, detection, and tracking [4]. This highlights the importance of infrared and visible image fusion research.

2. Application of night vision

The thermal radiation information of the target object or scene is usually converted to false color images because the human visual system is more sensitive to color images than grayscale photos. Thanks to the use of color transfer technology, the resulting color images have a realistic daytime color appearance, which makes the scene more intuitive and helps the viewer understand the image. Figure 1 shows an example of blending visible and infrared images at night to achieve color vision.

Figure 1. Example of infrared and visible image fusion for color vision at night. (a) visible light images; (b) infrared images; (c) reference images; (d) fusion of images.

Grayscale images are less responsive to human vision than color images. Human eyes are able to distinguish between thousands of colors, but they can only distinguish between about 100 grayscale images. Therefore, it is necessary to color grayscale images, especially because the fusion method of infrared and visible images with color contrast enhancement has been widely adopted in military equipment[3]. In addition, due to the rapid growth of multi-band infrared and night vision systems, there is now greater interest in the color fusion ergonomics of many image sensor signals.

3. Application in the field of biometrics

The subject of facial recognition research is progressing rapidly. The face recognition technology for visual images has been developed to a very advanced stage and has achieved great success[4]. In the case of low light, the face recognition rate using visual technology will be reduced. However, thermal infrared face recognition technology can perform well. Figure 2 shows images of faces captured in infrared and visible light.

Figure 2. Examples of infrared and visible face images. (a) visible light images; (b) infrared images.

Although face recognition technology based on visible images has been well studied, there are still significant problems with its practical implementation. For example, the recognition effect can be significantly affected by changes in lighting, facial expressions, background, and so on in the actual scene. To recognize faces, infrared photos can complement information hidden in visible-light photos. In recent years, the application of infrared and visible image fusion based on biometric optimization algorithms has increased. By increasing the amount of computation, this approach can improve identification accuracy and provide more supplementary data for biometrics. The future application of infrared and visible light fusion technology in the field of biometrics will also become more extensive.

However, the growing use of facial recognition technology has also raised some ethical and privacy concerns. For example, while surveillance systems in public places contribute to social security, they also raise questions about whether people's facial information could be stolen and improperly used by outside parties. Various countries where governments are using facial recognition technology to monitor citizens' activities have raised concerns about abuses and human rights violations.

4. Application in the field of detection and tracking

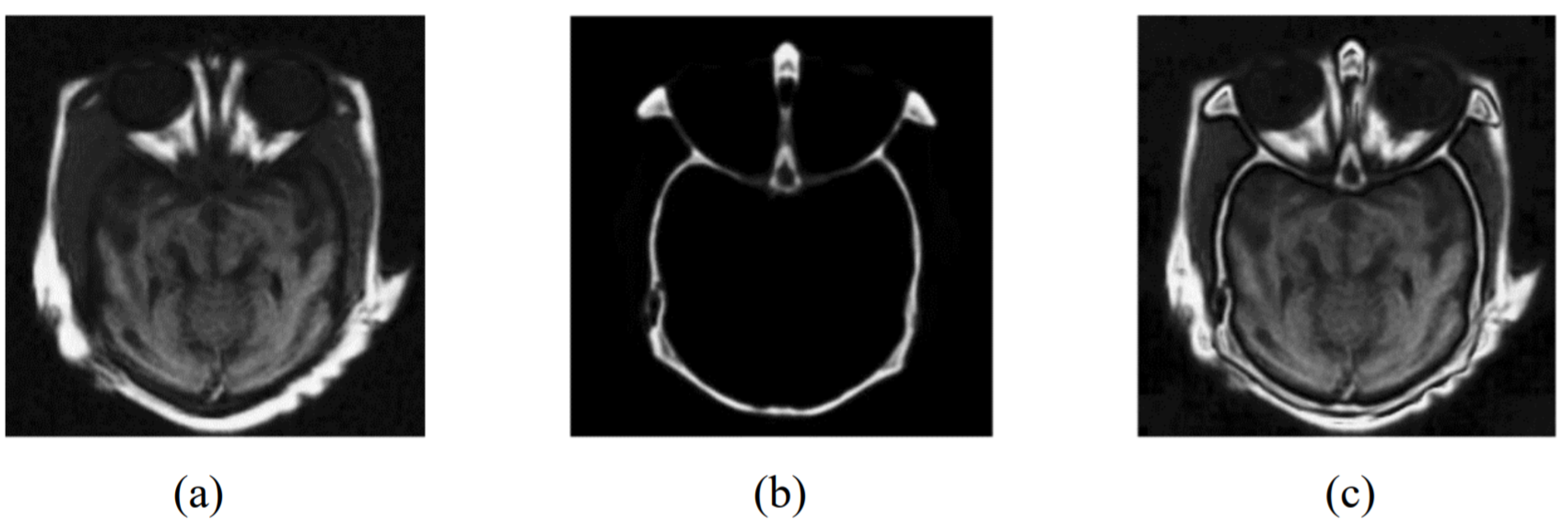

5. Application in the field of medical diagnosis

6. Applications in the Field of Autonomous Vehicles

References

- Zhang, H.; Xu, H.; Tian, X.; Jiang, J.; Ma, J. Image fusion meets deep learning: A survey and perspective. Inf. Fusion 2021, 76, 323–336.

- Ma, J.; Ma, Y.; Li, C. Infrared and visible image fusion methods and applications: A survey. Inf. Fusion 2019, 45, 153–178.

- Muller, A.C.; Narayanan, S. Cognitively-engineered multisensor image fusion for military applications. Inf. Fusion 2009, 10, 137–149.

- Kong, S.G.; Heo, J.; Abidi, B.R.; Paik, J.; Abidi, M.A. Recent advances in visual and infrared face recognition—A review. Comput. Vis. Image Underst. 2005, 97, 103–135.

- Bulanon, D.; Burks, T.; Alchanatis, V. Image fusion of visible and thermal images for fruit detection. Biosyst. Eng. 2009, 103, 12–22.

- Elguebaly, T.; Bouguila, N. Finite asymmetric generalized Gaussian mixture models learning for infrared object detection. Comput. Vis. Image Underst. 2013, 117, 1659–1671.

- Liu, H.; Sun, F. Fusion tracking in color and infrared images using sequential belief propagation. In Proceedings of the 2008 IEEE International Conference on Robotics and Automation, Pasadena, CA, USA, 19–23 May 2008; pp. 2259–2264.

- Wellington, S.L.; Vinegar, H.J. X-ray computerized tomography. J. Pet. Technol. 1987, 39, 885–898.

- Degen, C.; Poggio, M.; Mamin, H.; Rettner, C.; Rugar, D. Nanoscale magnetic resonance imaging. Proc. Natl. Acad. Sci. USA 2009, 106, 1313–1317. Degen, C.; Poggio, M.; Mamin, H.; Rettner, C.; Rugar, D. Nanoscale magnetic resonance imaging. Proc. Natl. Acad. Sci. USA 2009, 106, 1313–1317.

- Gambhir, S.S. Molecular imaging of cancer with positron emission tomography. Nat. Rev. Cancer 2002, 2, 683–693. Degen, C.; Poggio, M.; Mamin, H.; Rettner, C.; Rugar, D. Nanoscale magnetic resonance imaging. Proc. Natl. Acad. Sci. USA 2009, 106, 1313–1317.

- Li, G.; Qian, X.; Qu, X. SOSMaskFuse: An Infrared and Visible Image Fusion Architecture Based on Salient Object Segmentation Mask. IEEE Trans. Intell. Transp. Syst. 2023, 24, 10118–10137. Gambhir, S.S. Molecular imaging of cancer with positron emission tomography. Nat. Rev. Cancer 2002, 2, 683–693.

- Choi, J.D.; Kim, M.Y. A sensor fusion system with thermal infrared camera and LiDAR for autonomous vehicles and deep learning based object detection. ICT Express 2023, 9, 222–227. Gambhir, S.S. Molecular imaging of cancer with positron emission tomography. Nat. Rev. Cancer 2002, 2, 683–693.

- Li, G.; Qian, X.; Qu, X. SOSMaskFuse: An Infrared and Visible Image Fusion Architecture Based on Salient Object Segmentation Mask. IEEE Trans. Intell. Transp. Syst. 2023, 24, 10118–10137.

- Choi, J.D.; Kim, M.Y. A sensor fusion system with thermal infrared camera and LiDAR for autonomous vehicles and deep learning based object detection. ICT Express 2023, 9, 222–227.