Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 2 by Sirius Huang and Version 1 by Bader Alsharif.

Historically, individuals with hearing impairments have faced neglect, lacking the necessary tools to facilitate effective communication. Building a sign language recognition system using deep learning technology plays a vital role in interpreting sign language to ordinary individuals and the reverse. This system would ease the process of communication between deaf and normal people.

- image-based

- American sign language

- deep learning

- transfer learning

1. Introduction

Throughout history, humans have employed a variety of communication techniques including gesturing, sounds, drawing, writing, and speaking. However, for people with deafness or hearing impairments, sign language is the primary means of communication and interaction with others, breaking down all barriers of hearing loss condition which severely limits their verbal communication. Because of communication barriers, people with these disabilities have fewer opportunities for development. Sign language is a spontaneous non-verbal language expressed by using manual gestures, facial expressions, and body language to convey messages and meaning. These signs may vary from one country to another, although they have some similarities in using sign language. Unfortunately, there is no universal sign language that can be used for all people with hearing impairments around the world [1]. According to the World Health Organization’s (WHO) most recent research, 5% of the world’s population—432 million adults and 34 million children—have disabling hearing loss, not to mention more than 1 billion people are susceptible to hearing loss due to extended and excessive to loud sounds [2]. In fact, people who lose their hearing sense under any circumstances will lose their ability to speak. The enormous number of deafness and hearing loss conditions has garnered the attention of many researchers and developers in the field of speech recognition and other multidisciplinary fields to conduct their study to assist people with hearing impairments. Their goal is to facilitate the daily life of people with hearing disabilities for communication and social interaction with other individuals. Consequently, with the rapidly growing deaf community, building a sign language recognition system using deep learning technology plays a vital role in interpreting sign language to ordinary individuals and the reverse. This system would ease the process of communication between deaf and normal people. As a result, people with hearing impairments will have the opportunity to become more engaged in society, developing social interaction and relationships [3,4][3][4]. Nowadays, the sole means for people with hearing impairments to communicate with other ordinary people is through interpreters. However, it is very costly to hire interpreters who have expertise in interpreting for the deaf, because of the limited number of such interpreters. Nevertheless, there are several obstacles in implementing a sign language recognition system to support the deaf and hearing loss community that should be discussed. Firstly, not all hearing-impaired individuals use sign language as a method of communication, which may give them a sense of isolation and depression. Secondly, there are more than 200 different sign languages and dialects from different countries which may delay the process of implementing a sign language recognition system which would be applicable in various countries [4,5][4][5]. Lastly, not everyone is proficient in using today’s modern technology due to a lack of education and development, which can be neglected. Numerous studies and research should be oriented to address and comprehend the obstacles that deaf and hard-of-hearing people encounter, which hinder their societal engagement [6].

2. Sign Language Recognition Techniques

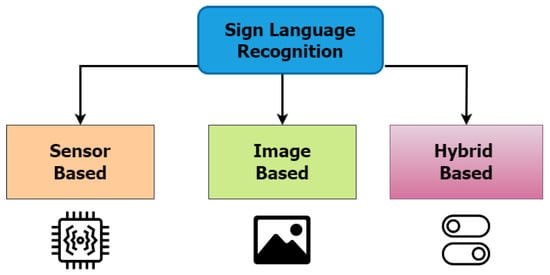

This section explores an influx of related publications on sign language recognition techniques. According to [20[7][8][9][10],21,22,23], the implementation of a sign language recognition system can be carried out either by using a sensor-based approach, an image-based approach, or both approaches (hybrid), as can be seen in Figure 1.

Figure 1.

Main approaches for sign language recognition system.

References

- Zwitserlood, I.; Verlinden, M.; Ros, J.; Van Der Schoot, S.; Netherlands, T. Synthetic signing for the deaf: Esign. In Proceedings of the Conference and Workshop on Assistive Technologies for Vision and Hearing Impairment, CVHI, Granada, Spain, 29 June–2 July 2004.

- World Health Organisation. Deafness and Hearing Loss. 2021. Available online: http://https://www.who.int/news-room/fact-sheets/detail/deafness-and-hearing-loss (accessed on 8 November 2022).

- Alsaadi, Z.; Alshamani, E.; Alrehaili, M.; Alrashdi, A.A.D.; Albelwi, S.; Elfaki, A.O. A real time Arabic sign language alphabets (ArSLA) recognition model using deep learning architecture. Computers 2022, 11, 78.

- Alsharif, B.; Ilyas, M. Internet of Things Technologies in Healthcare for People with Hearing Impairments. In Proceedings of the IoT and Big Data Technologies for Health Care: Third EAI International Conference, IoTCare 2022, Virtual Event, 12–13 December 2022; Proceedings. Springer: Berlin/Heidelberg, Germany, 2023; pp. 299–308.

- Farooq, U.; Rahim, M.S.M.; Sabir, N.; Hussain, A.; Abid, A. Advances in machine translation for sign language: Approaches, limitations, and challenges. Neural Comput. Appl. 2021, 33, 14357–14399.

- Latif, G.; Mohammad, N.; AlKhalaf, R.; AlKhalaf, R.; Alghazo, J.; Khan, M. An automatic Arabic sign language recognition system based on deep CNN: An assistive system for the deaf and hard of hearing. Int. J. Comput. Digit. Syst. 2020, 9, 715–724.

- Mummadi, C.K.; Philips Peter Leo, F.; Deep Verma, K.; Kasireddy, S.; Scholl, P.M.; Kempfle, J.; Van Laerhoven, K. Real-time and embedded detection of hand gestures with an IMU-based glove. Informatics 2018, 5, 28.

- Elons, A.; Ahmed, M.; Shedid, H.; Tolba, M. Arabic sign language recognition using leap motion sensor. In Proceedings of the 2014 9th International Conference on Computer Engineering & Systems (ICCES), Cairo, Egypt, 21–23 December 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 368–373.

- Kammoun, S.; Darwish, D.; Althubeany, H.; Alfull, R. ArSign: Toward a mobile based Arabic sign language translator using LMC. In Proceedings of the Universal Access in Human–Computer Interaction. Applications and Practice: 14th International Conference, UAHCI 2020, Held as Part of the 22nd HCI International Conference, HCII 2020, Copenhagen, Denmark, 19–24 July 2020; Proceedings, Part II 22; Springer: Berlin/Heidelberg, Germany, 2020; pp. 92–101.

- Ahmed, M.A.; Zaidan, B.B.; Zaidan, A.A.; Salih, M.M.; Lakulu, M.M.B. A review on systems-based sensory gloves for sign language recognition state of the art between 2007 and 2017. Sensors 2018, 18, 2208.

- El-Alfy, E.S.M.; Luqman, H. A comprehensive survey and taxonomy of sign language research. Eng. Appl. Artif. Intell. 2022, 114, 105198.

- Alzohairi, R.; Alghonaim, R.; Alshehri, W.; Aloqeely, S. Image based Arabic sign language recognition system. Int. J. Adv. Comput. Sci. Appl. 2018, 9, 3.

- Al Ani, L.A.; Al Tahir, H.S. Classification Performance of TM Satellite Images. Al-Nahrain J. Sci. 2020, 23, 62–68.

- Nazari, K.; Ebadi, M.J.; Berahmand, K. Diagnosis of alternaria disease and leafminer pest on tomato leaves using image processing techniques. J. Sci. Food Agric. 2022, 102, 6907–6920.

- Mohandes, M.; Liu, J.; Deriche, M. A survey of image-based arabic sign language recognition. In Proceedings of the 2014 IEEE 11th International Multi-Conference on Systems, Signals & Devices (SSD14), Barcelona, Spain, 11–14 February 2014; pp. 1–4.

- Al-Obodi, A.H.; Al-Hanine, A.M.; Al-Harbi, K.N.; Al-Dawas, M.S.; Al-Shargabi, A.A. A Saudi Sign Language recognition system based on convolutional neural networks. Build. Serv. Eng. Res. Technol. 2020, 13, 3328–3334.

- Hayani, S.; Benaddy, M.; El Meslouhi, O.; Kardouchi, M. Arab sign language recognition with convolutional neural networks. In Proceedings of the 2019 International Conference of Computer Science and Renewable Energies (ICCSRE), Agadir, Morocco, 22–24 July 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–4.

- Alawwad, R.A.; Bchir, O.; Ismail, M.M.B. Arabic sign language recognition using Faster R-CNN. Int. J. Adv. Comput. Sci. Appl. 2021, 12, 3.

- Vanaja, S.; Preetha, R.; Sudha, S. Hand Gesture Recognition for Deaf and Dumb Using CNN Technique. In Proceedings of the 2021 6th International Conference on Communication and Electronics Systems (ICCES), Coimbatore, India, 8–10 July 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–4.

- Pan, T.Y.; Lo, L.Y.; Yeh, C.W.; Li, J.W.; Liu, H.T.; Hu, M.C. Real-time sign language recognition in complex background scene based on a hierarchical clustering classification method. In Proceedings of the 2016 IEEE Second International Conference on Multimedia Big Data (BigMM), Taipei, Taiwan, 20–22 April 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 64–67.

- Almasre, M.A.; Al-Nuaim, H. A real-time letter recognition model for Arabic sign language using kinect and leap motion controller v2. Int. J. Adv. Eng. Manag. Sci. 2016, 2, 239469.

- Ibrahim, N.B.; Selim, M.M.; Zayed, H.H. An automatic Arabic sign language recognition system (ArSLRS). J. King Saud Univ.-Comput. Inf. Sci. 2018, 30, 470–477.

- Kasapbaşi, A.; ELBUSHRA, A.E.A.; Omar, A.H.; Yilmaz, A. DeepASLR: A CNN based human computer interface for American Sign Language recognition for hearing-impaired individuals. Comput. Methods Programs Biomed. Update 2022, 2, 100048.

- AlKhuraym, B.Y.; Ismail, M.M.B.; Bchir, O. Arabic sign language recognition using lightweight cnn-based architecture. Int. J. Adv. Comput. Sci. Appl. 2022, 13, 4.

- Cayamcela, M.E.M.; Lim, W. Fine-tuning a pre-trained convolutional neural network model to translate American sign language in real-time. In Proceedings of the 2019 International Conference on Computing, Networking and Communications (ICNC), Honolulu, HI, USA, 18–21 February 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 100–104.

- Ma, Y.; Xu, T.; Kim, K. Two-Stream Mixed Convolutional Neural Network for American Sign Language Recognition. Sensors 2022, 22, 5959.

More