Triple Modular Redundancy (TMR) has been traditionally used to ensure complete tolerance to a single fault(s) or a faulty processing unit, where the processing unit may be a circuit or a system. However, TMR incurs more than 200% overhead in terms of area and power compared to a single processing unit. Hence, alternative redundancy approaches were proposed in the literature to mitigate the design overheads associated with TMR, but they provide only partial or moderate fault tolerance. This research presents a new fault-tolerant design approach based on approximate computing called FAC that has the same fault tolerance as TMR and achieves significant reductions in the design metrics for physical implementation. FAC is ideally suited for error-tolerant applications, for example, digital image/video/audio processing. TResearchers evaluate the performance of TMR and FAC has been evaluated for a digital image processing application. The image processing results obtained confirm the usefulness of FAC. When an example processing unit is implemented using a 28-nm CMOS technology, FAC achieves a 15.3% reduction in delay, a 19.5% reduction in area, and a 24.7% reduction in power compared to TMR.

- fault tolerance

- triple modular redundancy

- approximate computing

- digital circuits

- logic design

- arithmetic circuits

1. Introduction

2. Literature Survey

3. Proposed Redundancy Approach

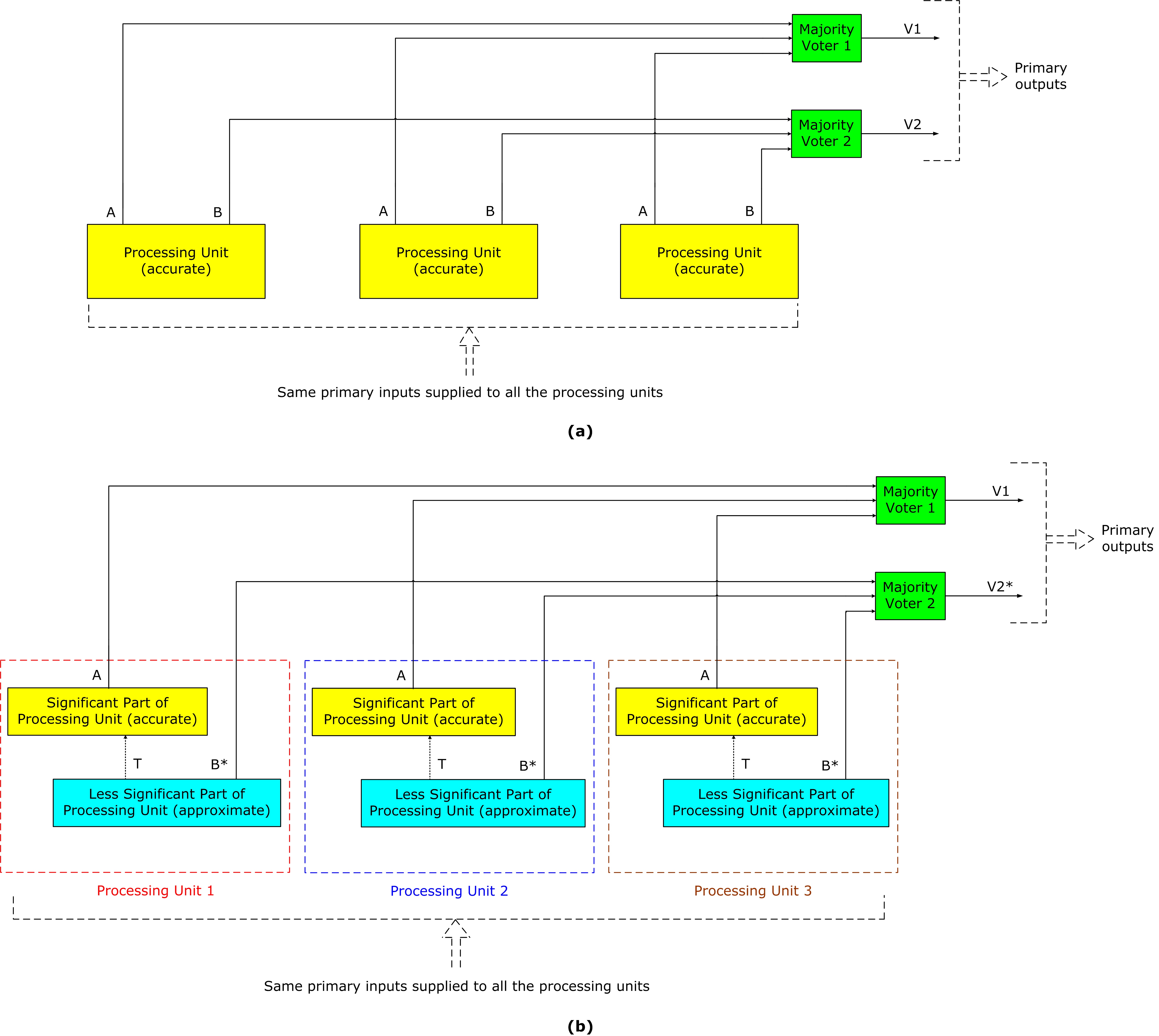

Researchers introduce a novel Fault-tolerant design approach based on Approximate Computing, abbreviated as FAC. Before delving into the details of FAC, researchers briefly discuss the merits of approximate computing. Approximate computing is a promising alternative to traditional accurate computing, especially for inherently error-tolerant applications. By accepting a certain level of compromise on the computation accuracy, approximate computing offers advantages such as reduced area, lower power dissipation, higher processing speed, and improved energy efficiency [13][14]. The benefits of approximate computing have been demonstrated for various practical applications, particularly those that exhibit inherent error resilience, such as multimedia encompassing digital signal processing, computer graphics, computer vision, neuromorphic computing, and the implementation of hardware for AI, machine learning, and neural networks, etc. [15]. Consequently, leveraging the potential of approximate computing becomes an appealing prospect for designing fault-tolerant processing units, especially in resource-constrained environments like space, where area efficiency, low power dissipation, high processing speed, and energy efficiency are critical factors. Previous works [8][9][10][11] have suggested alternative (approximate/accurate) implementations of redundancy. However, as discussed in Section 2, these alternative approaches suffer from drawbacks and are unlikely to be used for practical applications. In contrast, the proposed FAC could be a practical alternative. FAC is generic and can be applied to address any order of NMR. Nonetheless, researchers consider a 3-tuple version of FAC to facilitate a direct comparison with TMR. To showcase the distinction between TMR and FAC, researchers illustrate their representative architectures in Figures 1a and b. In TMR, three identical processing units are used, as shown in Figure 1a, and the processing units are all accurate. The majority voters utilized in TMR are also accurate. The outputs of each processing unit are represented by A and B, with output A assumed to hold more significance than output B. This assumption is reasonable, particularly for arithmetic circuits, where the output bits vary in significance from most significant to least significant. The outputs A and B from the processing units are subjected to voting using majority voters 1 and 2, respectively. A 3-input majority voter synthesizes the Boolean function F = XY + YZ + XZ, where F denotes the output, and X, Y, and Z denote the inputs. Various majority voter designs relevant to TMR are available [16][17], with the majority voter typically assumed to be perfect. However, if the majority voter cannot be assumed to be perfect, redundancy can be applied to the majority voter, like the processing units. The primary outputs of the TMR implementation, denoted as V1 and V2, are the outputs of majority voters 1 and 2, respectively. By triplicating the processing units and using majority voters, a TMR implementation would effectively conceal a single fault(s) or a faulty processing unit. Figure 1. Example block-level illustrations of (a) TMR architecture, and (b) proposed FAC architecture. B* of (b) may or may not be equal to B of (a), depending upon the inputs supplied.

Referring to Figure 1b, the proposed redundancy approach (FAC) involves partitioning a processing unit into two parts based on the significance of their corresponding outputs with respect to the primary output. FAC involves dividing the processing unit into two tailored parts based on an application’s specific requirement. This implies that the two parts could be of equal or unequal size, depending upon the application. FAC triplicates both the significant and less significant parts. Further, in FAC, the less significant part of the processing unit is approximated instead of being retained accurately. The constituents of processing units 1, 2, and 3 are depicted within red, blue, and brown boxes in dashed lines in Figure 1b. Nevertheless, all three processing units are identical. It should be noted that each processing unit’s less significant part in FAC is identical and may or may not be connected to its corresponding significant part. The connection depends on the manner of logical approximation applied to the less significant part of the processing unit. The connections between the less significant and significant parts of each processing unit in FAC are represented by dotted black lines in Figure 1b, with the intermediate output being denoted as T. FAC possesses fault tolerance like TMR, as it can mask any single fault in the significant or less significant part of any processing unit or tolerate a faulty processing unit. However, because the triplicated less significant parts are approximated, the practical applicability of FAC hinges on two factors: (i) The manner of logical approximation applied to the less significant parts of the processing unit, (ii) The extent of approximation incorporated in these less significant parts.

In Figure 1b, output A from processing units 1, 2, and 3 is subjected to voting using majority voter 1, resulting in output V1. This voting mechanism is also employed in TMR. As mentioned earlier, the triplicated less significant parts of processing units 1, 2, and 3 in FAC are identical but approximate. Consequently, the output B* from processing units 1, 2, and 3 may or may not be equivalent to the output B of the accurate processing unit, and this depends on the inputs supplied. For instance, if researchers assume that a 2-input EXOR gate having inputs X and Y was used to generate output B in TMR, the EXOR gate will output 1 if X ≠ Y and 0 if X = Y. If, due to approximation, the 2-input EXOR function (of TMR) is replaced by a 2-input OR function in FAC, the OR gate will output 1 when X = Y = 1, and X ≠ Y, and output 0 only when X = Y = 0. Thus, for the conditions where X = Y = 0 and X ≠ Y, both EXOR and OR gates will produce the same output; however, when X = Y = 1, the outputs of the two gates will differ. Hence, B* may or may not be equal to B based on the inputs supplied. In FAC, the output B* from the less significant parts of processing units 1, 2, and 3 are subjected to voting using majority voter 2, resulting in the output V2*. It should be noted that V2* may or may not be equal to V2, and V1 and V2* represent the primary outputs of an FAC implementation.

Arithmetic circuits, including adders, multipliers, dividers, and data paths containing functions like the discrete Cosine transform, finite/infinite impulse response filter, and sum of absolute difference, etc. exhibit varying degrees of significance in their output bits. This characteristic allows researchers to partition these processing units into significant and less significant parts, presenting an opportunity for implementing them according to FAC. Depending on the target application, the less significant part of a processing unit can be approximated to a suitable degree. Similarly, logic functions can be redundantly implemented according to FAC, and again, the level of logic approximation for the less significant part should be determined based on the specific application.

Figure 1. Example block-level illustrations of (a) TMR architecture, and (b) proposed FAC architecture. B* of (b) may or may not be equal to B of (a), depending upon the inputs supplied.

Referring to Figure 1b, the proposed redundancy approach (FAC) involves partitioning a processing unit into two parts based on the significance of their corresponding outputs with respect to the primary output. FAC involves dividing the processing unit into two tailored parts based on an application’s specific requirement. This implies that the two parts could be of equal or unequal size, depending upon the application. FAC triplicates both the significant and less significant parts. Further, in FAC, the less significant part of the processing unit is approximated instead of being retained accurately. The constituents of processing units 1, 2, and 3 are depicted within red, blue, and brown boxes in dashed lines in Figure 1b. Nevertheless, all three processing units are identical. It should be noted that each processing unit’s less significant part in FAC is identical and may or may not be connected to its corresponding significant part. The connection depends on the manner of logical approximation applied to the less significant part of the processing unit. The connections between the less significant and significant parts of each processing unit in FAC are represented by dotted black lines in Figure 1b, with the intermediate output being denoted as T. FAC possesses fault tolerance like TMR, as it can mask any single fault in the significant or less significant part of any processing unit or tolerate a faulty processing unit. However, because the triplicated less significant parts are approximated, the practical applicability of FAC hinges on two factors: (i) The manner of logical approximation applied to the less significant parts of the processing unit, (ii) The extent of approximation incorporated in these less significant parts.

In Figure 1b, output A from processing units 1, 2, and 3 is subjected to voting using majority voter 1, resulting in output V1. This voting mechanism is also employed in TMR. As mentioned earlier, the triplicated less significant parts of processing units 1, 2, and 3 in FAC are identical but approximate. Consequently, the output B* from processing units 1, 2, and 3 may or may not be equivalent to the output B of the accurate processing unit, and this depends on the inputs supplied. For instance, if researchers assume that a 2-input EXOR gate having inputs X and Y was used to generate output B in TMR, the EXOR gate will output 1 if X ≠ Y and 0 if X = Y. If, due to approximation, the 2-input EXOR function (of TMR) is replaced by a 2-input OR function in FAC, the OR gate will output 1 when X = Y = 1, and X ≠ Y, and output 0 only when X = Y = 0. Thus, for the conditions where X = Y = 0 and X ≠ Y, both EXOR and OR gates will produce the same output; however, when X = Y = 1, the outputs of the two gates will differ. Hence, B* may or may not be equal to B based on the inputs supplied. In FAC, the output B* from the less significant parts of processing units 1, 2, and 3 are subjected to voting using majority voter 2, resulting in the output V2*. It should be noted that V2* may or may not be equal to V2, and V1 and V2* represent the primary outputs of an FAC implementation.

Arithmetic circuits, including adders, multipliers, dividers, and data paths containing functions like the discrete Cosine transform, finite/infinite impulse response filter, and sum of absolute difference, etc. exhibit varying degrees of significance in their output bits. This characteristic allows researchers to partition these processing units into significant and less significant parts, presenting an opportunity for implementing them according to FAC. Depending on the target application, the less significant part of a processing unit can be approximated to a suitable degree. Similarly, logic functions can be redundantly implemented according to FAC, and again, the level of logic approximation for the less significant part should be determined based on the specific application.

4. Digital Image Processing Application

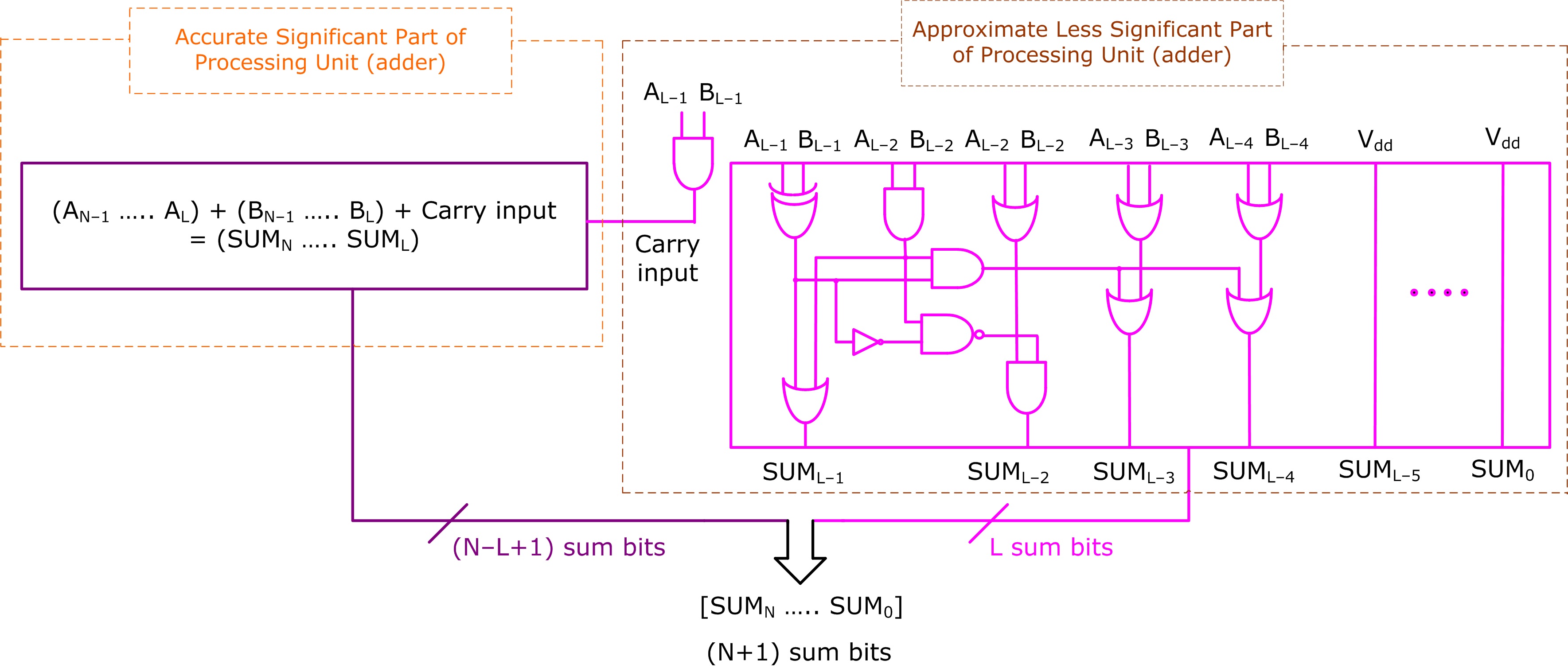

To compare the performance of TMR and the proposed FAC, a digital image processing case study involving fast Fourier transform (FFT) and inverse fast Fourier transform (IFFT) [18] was considered. A set of 8-bit grayscale images with a spatial resolution of 512 × 512 was randomly chosen for evaluation. Each image was converted into a matrix format and subjected to FFT computation, followed by image reconstruction using IFFT. The FFT and IFFT computations were carried out in integer precision with scaling to ensure that no data loss or overflow occurred during the computations. Multiplication was performed accurately, while the addition was performed accurately using the precise adder and inaccurately using an imprecise adder, separately. The architecture of the imprecise adder [19] used in the FAC approach is depicted in Figure 2 which contains two parts viz. a violet section representing the accurate part and a pink section representing the approximate part. The accurate and approximate parts of the imprecise adder are marked in Figure 2 for easy comparison with Figure 1b. The accurate part is considered significant, while the approximate part is regarded as less significant. In Figure 2, the adder size is N bits, the size of the approximate adder part is L bits, and the size of the accurate adder part is (N–L) bits. The accurate part adds (N–L) input bits along with a carry input provided by the approximate part and produces (N–L+1) sum bits. In the approximate part, sum bits SUML–1 up to SUML–4 have reduced logic while the remainder of the sum bits SUML–5 up to SUM0 are assigned a constant 1 (binary). For synthesis, SUML–5 up to SUM0 are individually connected to tie-to-high standard library cells. The value of L is typically determined based on the maximum error tolerable for a given application. The adder inputs are represented by AN–1 up to A0 and BN–1 up to B0, while the adder output is denoted by SUMN up to SUM0. Subscripts (N–1) and 0 indicate the most significant and least significant bit of the adder inputs, and subscripts N and 0 signify the most significant and least significant bit of the adder’s sum outputs, respectively.

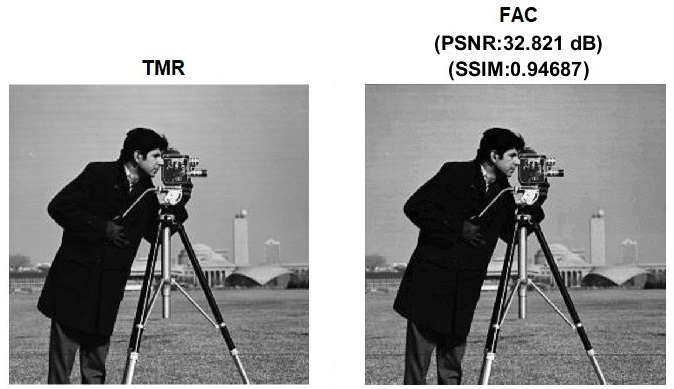

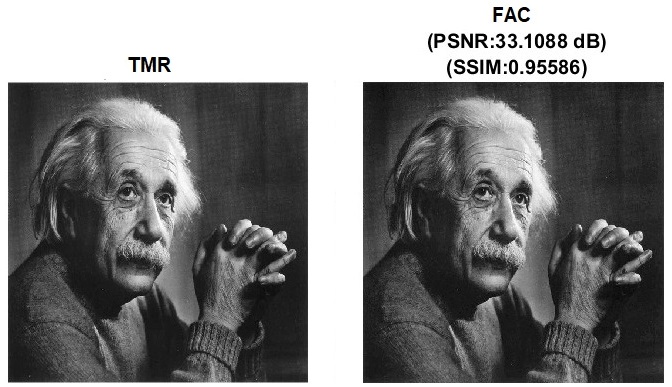

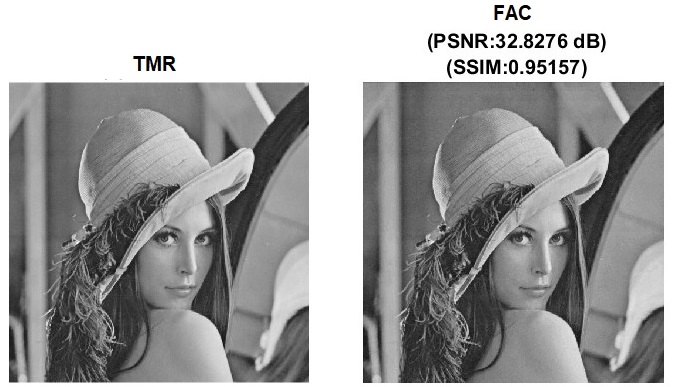

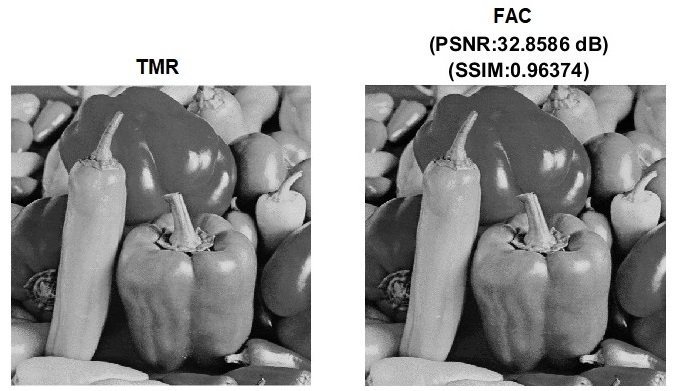

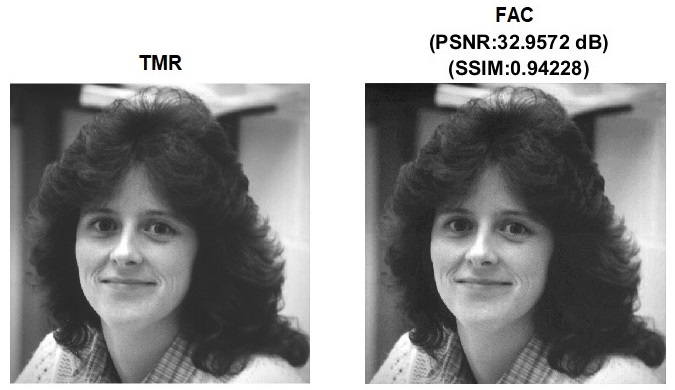

Figure 3. Results of digital image processing corresponding to TMR and the proposed FAC based on experimentation with some images. PSNR = ∞ and SSIM = 1 for the images processed according to TMR. FAC is seen to consistently yield images with PSNR > 30 dB and SSIM close to unity, which are acceptable.

Figure 3. Results of digital image processing corresponding to TMR and the proposed FAC based on experimentation with some images. PSNR = ∞ and SSIM = 1 for the images processed according to TMR. FAC is seen to consistently yield images with PSNR > 30 dB and SSIM close to unity, which are acceptable.

5. Implementation and Design Metrics

To physically implement the adders used for digital image processing, researchers structurally described each of them in Verilog. The adders considered are (i) An accurate 32-bit carry-lookahead adder (CLA) [21], representing a single adder, (ii) A 32-bit TMR adder, and (iii) A 32-bit FAC adder having a 22-bit significant part and a 10-bit less significant part (since the 22-10 input partition was found to be optimum for digital image processing, as noted in the previous section). The TMR adder utilized the accurate CLA structure [21], and the accurate part of the FAC adder was also realized based on the same CLA structure. All adders were synthesized using a 28-nm CMOS standard digital cell library [22]. A typical low-leakage library specification featuring a 1.05 V supply voltage and a 25 °C operating junction temperature was considered. During simulation and synthesis, default wire load and a fanout-of-4 drive strength were assigned to all sum bits. Synopsys EDA tools were used for synthesis, simulation, and the estimation of design metrics. Design Compiler was used for synthesis and to estimate the total area of the adders, including cells and interconnect area. To evaluate the performance of the adders, a test bench comprising over one thousand random inputs was supplied at a latency of 2 ns (500 MHz) to simulate their functionality using VCS. The switching activity was recorded while performing functional simulation, which was used to estimate the total power dissipation using Prime Power. Prime Time was used to estimate the critical path delay of each implementation. The design metrics of the adders, including area, power dissipation, and critical path delay, are given in Table 1.|

Implementation |

Area (µm2) |

Delay (ns) |

Power (µW) |

|

Single adder (CLA) |

527.45 |

1.13 |

91.7 |

|

TMR adder |

1752.43 |

1.24 |

291.8 |

|

FAC adder |

1410.06 |

1.05 |

219.8 |

6. Conclusions

A novel fault-tolerant design approach called FAC was introduced which has the same fault tolerance as NMR. Specifically, a 3-tuple version of FAC was examined, allowing for a direct comparison with TMR. A digital image processing application (representative of an error-tolerant application) was considered as the case study and the results obtained demonstrate the usefulness of FAC. For the example implementation considered, FAC was found to achieve reductions in all design metrics compared to TMR, without compromising the fault tolerance. To cope with multiple faults (i.e., with more than one corresponding output bit affected or more than one processing unit failing), according to NMR, a higher-order version such as quintuple modular redundancy, septuple modular redundancy, etc., may have to be used; the corresponding equivalent according to the proposed architecture would be a 5-tuple version of FAC, a 7-tuple version of FAC, etc.References

- Miskov-Zivanov, N.; Marculescu, D. Multiple transient faults in combinational and sequential circuits: A systematic approach. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2010, 29, 1614–1627.

- Baumann, R.C. Radiation-induced soft errors in advanced semiconductor technologies. IEEE Trans. Device Mater. Reliab. 2005, 5, 305–316.

- Rossi, D.; Omana, M.; Metra, C.; Paccagnella, A. Impact of aging phenomena on soft error susceptibility. In Proceedings of the IEEE International Symposium on Defect and Fault Tolerance in VLSI and Nanotechnology Systems, Vancouver, BC, Canada, 3–5 October 2011.

- Mahatme, N.N.; Bhuva, B.; Gaspard, N.; Assis, T.; Xu, Y.; Marcoux, P.; Vilchis, M.; Narasimham, B.; Shih, A.; Wen, S.-J.; et al. Terrestrial SER characterization for nanoscale technologies: A comparative study. In Proceedings of the IEEE International Reliability Physics Symposium, Monterey, CA, USA, 19–23 April 2015.

- Balasubramanian, P.; Maskell, D.L. A fault-tolerant design strategy utilizing approximate computing. In Proceedings of the IEEE Region 10 Symposium (TENSYMP), Canberra, Australia, 6–8 September 2023.

- Balasubramanian, P.; Maskell, D.L. FAC: A fault-tolerant design approach based on approximate computing. Electronics 2023, 12, Article #3819.

- Quinn, H.; Graham, P.; Krone, J.; Caffrey, M.; Rezgui, S. Radiation-induced multi-bit upsets in SRAM-based FPGAs. IEEE Trans. Nucl. Sci. 2005, 52, 2455–2461.

- Gomes, I.A.C.; Martins, M.G.A.; Reis, A.I.; Kastensmidt, F.L. Exploring the use of approximate TMR to mask transient faults in logic with low area overhead. Microelectron. Reliab. 2015, 55, 2072–2076.

- Arifeen, T.; Hassan, A.S.; Moradian, H.; Lee, J.A. Input vulnerability-aware approximate triple modular redundancy: Higher fault coverage, improved search space, and re-duced area overhead. Electron. Lett. 2019, 54, 934–936.

- Ruano, O.; Maestro, J.A.; Reviriego, P. A methodology for automatic insertion of selective TMR in digital circuits affected by SEUs. IEEE Trans. Nucl. Sci. 2009, 56, 2091–2102.

- Ullah, A.; Reviriego, P.; Pontarelli, S.; Maestro, J.A. Majority voting-based reduced precision redundancy adders. IEEE Trans. Device Mater. Reliab. 2018, 18, 122–124.

- Balasubramanian, P. Analysis of redundancy techniques for electronics design—Case study of digital image processing. Technologies 2023, 11, Article #80.

- Han, J.; Orshansky, M. Approximate computing: An emerging paradigm for energy-efficient design. In Proceedings of the 18th IEEE European Test Symposium, Avignon, France, 27–31 May 2013.

- Venkataramani, S.; Chakradhar, S.T.; Roy, K.; Raghunathan, A. Approximate computing and the quest for computing effi-ciency. In Proceedings of the 52nd ACM/EDAC/IEEE Design Automation Conference, San Francisco, CA, USA, 8–12 June 2015.

- Mittal, S. A survey of techniques for approximate computing. ACM Comput. Surv. 2016, 48, 1–33.

- Balasubramanian, P.; Mastorakis, N.E. Power, delay and area comparisons of majority voters relevant to TMR architectures. In Proceedings of the 10th International Conference on Circuits, Systems, Signal and Telecommunications, Barcelona, Spain, 13–15 February 2016.

- Balasubramanian, P.; Prasad, K. A fault tolerance improved majority voter for TMR system architectures. WSEAS Transactions on Circuits and Systems 2016, 15, 108–122.

- Zhu, N.; Goh, W.L.; Zhang, W.; Yeo, K.S.; Kong, Z.H. Design of low-power high-speed truncation-error-tolerant adder and its application in digital signal processing. IEEE Trans. VLSI Syst. 2010, 18, 1225–1229.

- Balasubramanian, P.; Nayar, R.; Maskell, D.L. An approximate adder with reduced error and optimized design metrics. In Proceedings of the IEEE Asia Pacific Conference on Circuits and Systems, Penang, Malaysia, 22–26 November 2021.

- Balasubramanian, P.; Nayar, R.; Maskell, D.L.; Mastorakis, N.E. An approximate adder with a near-normal error distribution: Design, error analysis and practical application. IEEE Access 2021, 9, 4518–4530.

- Balasubramanian, P.; Mastorakis, N.E. High-speed and energy-efficient carry look-ahead adder. J. Low Power Electron. Appl. 2022, 12, Article #46.

- Synopsys SAED_EDK32/28_CORE Databook. Revision 1.0.0. January 2012. Available online: https://www.synopsys.com/academic-research/university.html (accessed on 26 April 2023).