Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 2 by Camila Xu and Version 1 by Robin Chhabra.

Use of Spiking Neural Networks (SNNs) that can capture a model of organisms’ nervous systems, may be simply justified by their unparalleled energy/computational efficiency.

- neuromorphics

- spiking neural networks

- embodied cognition

1. Introduction

Understanding how living organisms function within their surrounding environment reveals the key properties essential to designing and operating next-generation intelligent systems. A central actor enabling organisms’ complex behavioral interactions with the environment is indeed their efficient information processing through neuronal circuitries. Although biological neurons transfer information in the form of trains of impulses or spikes, Artificial Neural Networks (ANNs) that are mostly employed in the current Artificial Intelligence (AI) practices do not necessarily admit a binary behavior. In addition to the obvious scientific curiosity of studying intelligent living organisms, the interest in the biologically plausible neuronal models, i.e., Spiking Neural Networks (SNNs) that can capture a model of organisms’ nervous systems, may be simply justified by their unparalleled energy/computational efficiency.

Inspired by the spike-based activity of biological neuronal circuitries, several neuromorphic chips, such as Intel’s Loihi chip [1], have been emerging. These pieces of hardware process information through distributed spiking activities across analog neurons, which, to some extent, trivially assume biological plausibility; hence, they can inherently leverage neuroscientific advancements in understanding neuronal dynamics to improve the computational solutions for, e.g., robotics and AI applications. Due to their unique processing characteristics, neuromorphic chips fundamentally differ from traditional von Neumann computing machines, where a large energy/time cost is incurred whenever data must be moved from memory to processor and vice versa. On the other hand, coordinating parallel and asynchronous activities of a massive SNN on a neuromorphic chip to perform an algorithm, such as a real-time AI task, is non-trivial and requires new developments of software, compilers, and simulations of dynamical systems. The main advantages of such hardware are (i) computing architectures organized closely to known biological counterparts may offer energy efficiency, (ii) well-known theories in cognitive science can be leveraged to define high-level cognition for artificial systems, and (iii) robotic systems equipped with neuromorphic technologies can establish an empirical testbench to contribute to the research on embodied cognition and to explainability of SNNs. Particularly, this arranged marriage between biological plausibility and computational efficiency perceived from studying embodied human cognition has greatly influenced the field of AI.

2. SNNs and Neuromorphic Modeling

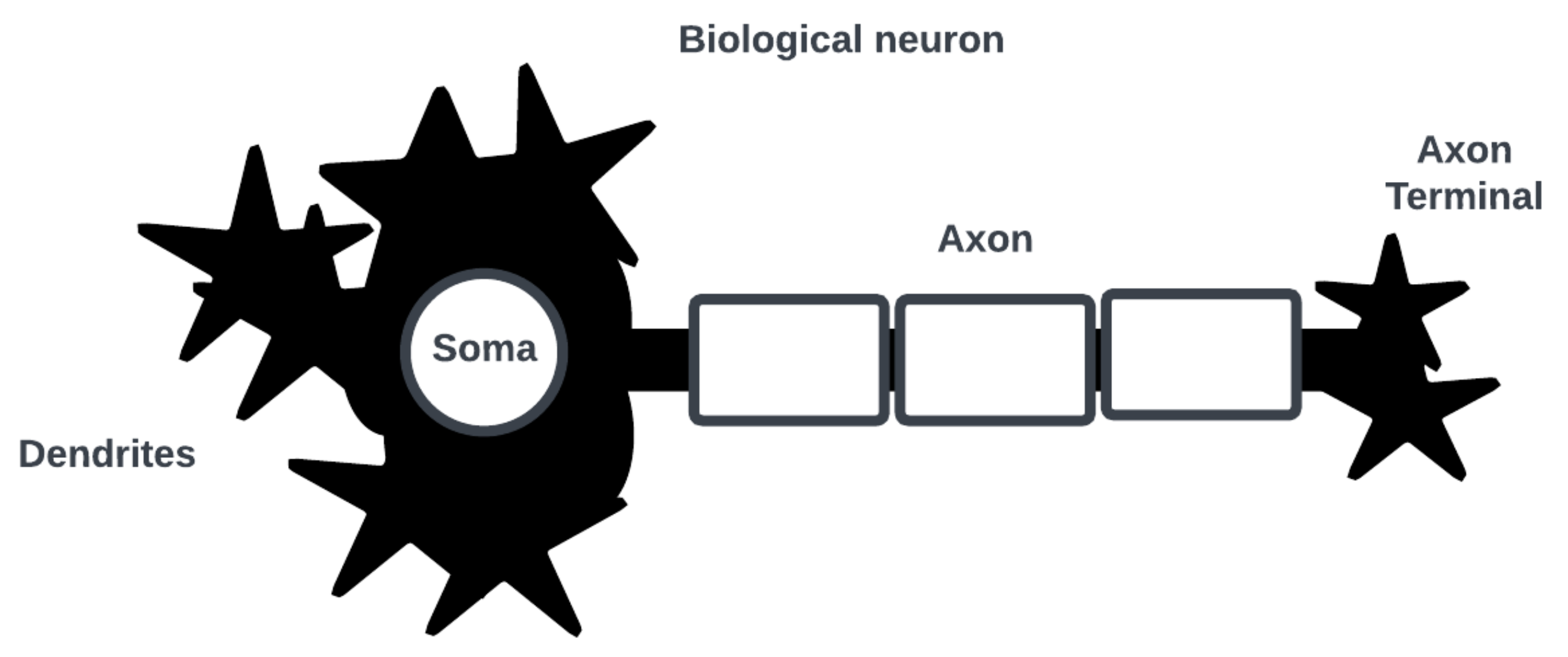

Note that the category of bio-inspired computing is an unsettled one, and it is unclear how such systems can be designed to integrate into existing computing architectures and solutions [12][2]. Consider the neuron as the basic computational element of a SNN [13][3]. Figure 1 shows a simplified biological neuron consisting of dendrites where post-synaptic interactions with other neurons occur, a soma where an action potential (i.e., a spike in cell membrane voltage potential) is generated, and an axon with a terminal where an action potential is triggered for pre-synaptic interactions.

Figure 1.

Simplified diagram of biological neuron and its main parts of interest.

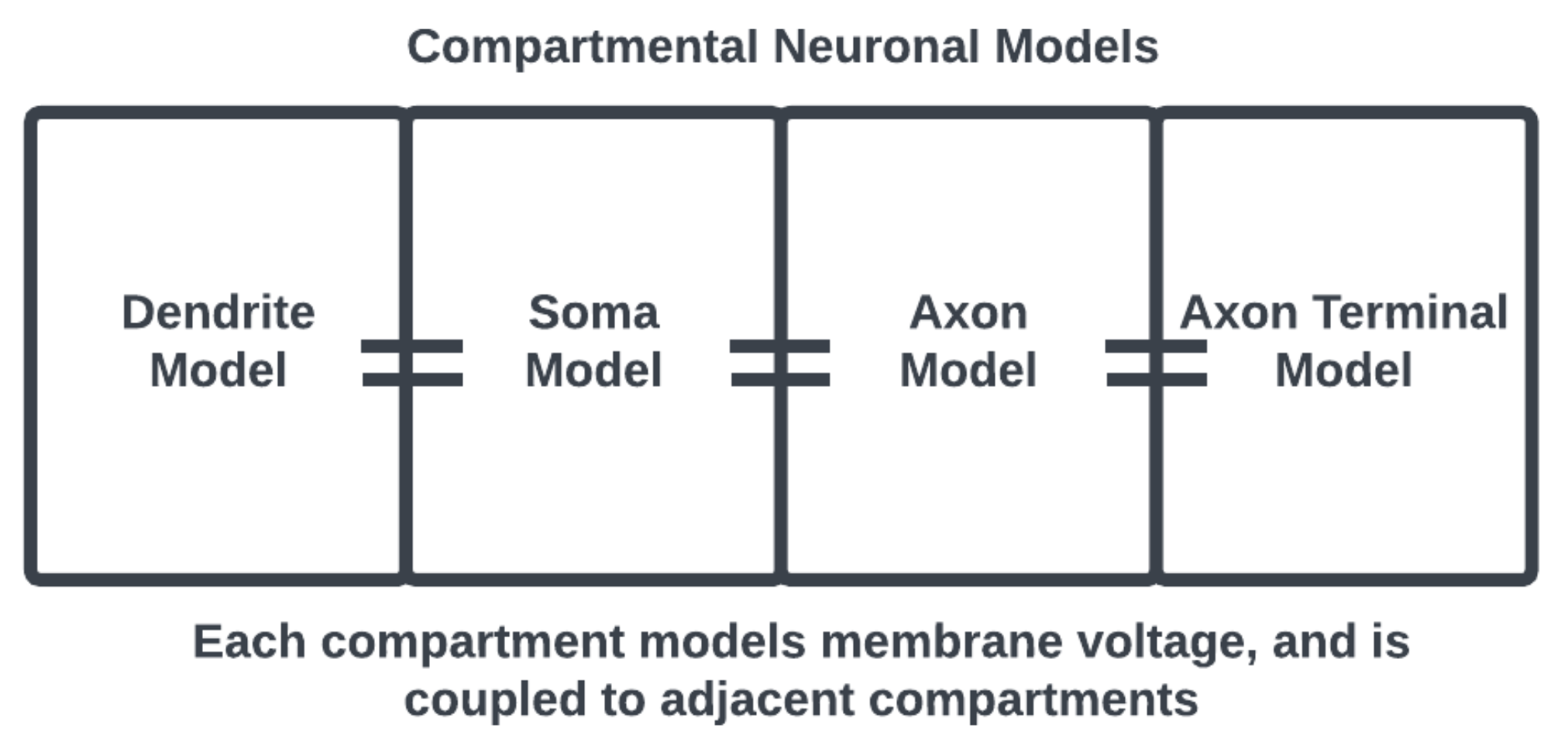

Figure 2. Compartmental neuronal model, the adjacency of compartments considers the physical structure of a neuron. Compartments may also be prescribed with appropriate subcellular morphology.

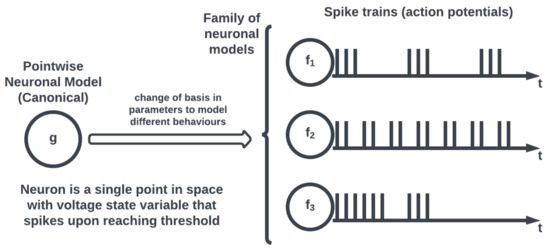

Figure 3.

Pointwise neuronal models and canonical equations.

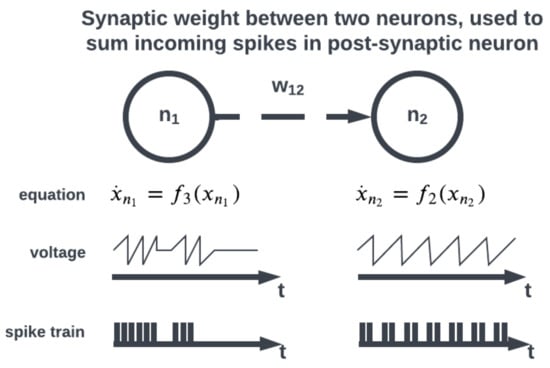

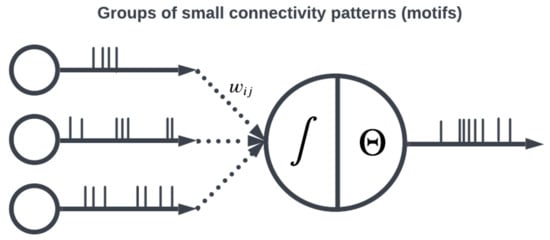

Figure 4.

Incoming spikes between neurons are summed using a synaptic weight.

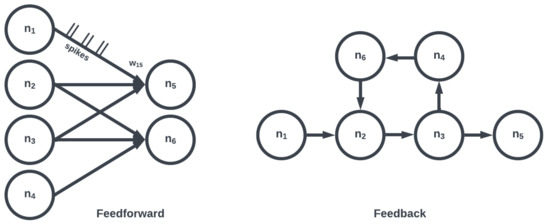

Figure 5.

Patterns of connectivity are determined by weights between neurons.

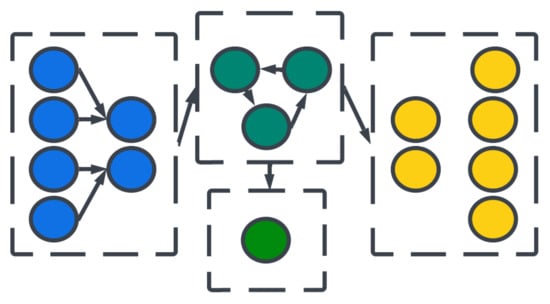

Figure 6. Large collections of motifs form layers or populations (e.g., convolutional, recurrent). Two networks consisting of the same number of neurons and edges may differ depending on organization.

Figure 7.

Organizations of neural layers and populations form architectures.

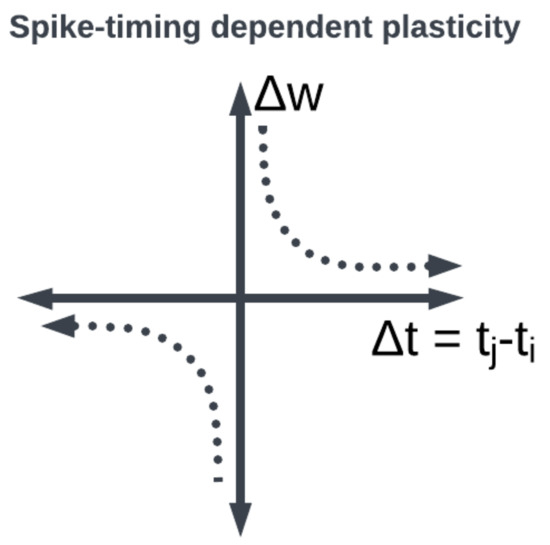

Figure 8. STDP synaptic learning rule window function: the weight between neurons is modified depending on when the pre- and post-synaptic neurons fired.

References

- Davies, M.; Srinivasa, N.; Lin, T.H.; Chinya, G.; Cao, Y.; Choday, S.H.; Dimou, G.; Joshi, P.; Imam, N.; Jain, S.; et al. Loihi: A Neuromorphic Manycore Processor with On-Chip Learning. IEEE Micro 2018, 38, 82–99.

- Mehonic, A.; Kenyon, A.J. Brain-inspired computing needs a master plan. Nature 2022, 604, 255–260.

- Yuste, R. From the neuron doctrine to neural networks. Nat. Rev. Neurosci. 2015, 16, 487–497.

- Yuste, R.; Tank, D.W. Dendritic integration in mammalian neurons, a century after Cajal. Neuron 1996, 16, 701–716.

- Ferrante, M.; Migliore, M.; Ascoli, G.A. Functional impact of dendritic branch-point morphology. J. Neurosci. 2013, 33, 2156–2165.

- Ward, M.; Rhodes, O. Beyond LIF Neurons on Neuromorphic Hardware. Front. Neurosci. 2022, 16, 881598.

- Bishop, J.M. Chapter 2: History and Philosophy of Neural Networks. In Computational Intelligence; Eolss Publishers: Paris, France, 2015.

- Bateson, G. Steps to an Ecology of Mind: Collected Essays in Anthropology, Psychiatry, Evolution, and Epistemology; University of Chicago Press: Chicago, IL, USA, 1972.

- Izhikevich, E.M. Which model to use for cortical spiking neurons? IEEE Trans. Neural Netw. 2004, 15, 1063–1070.

- Izhikevich, E.M. Simple model of spiking neurons. IEEE Trans. Neural Netw. 2003, 14, 1569–1572.

- Izhikevich, E.M. Dynamical Systems in Neuroscience: The Geometry of Excitability and Bursting; MIT Press: Cambridge, MA, USA, 2007; p. 441.

- Hoppensteadt, F.C.; Izhikevich, E. Canonical neural models. In Brain Theory and Neural Networks; The MIT Press: Cambridge, MA, USA, 2001.

- Brette, R. Philosophy of the spike: Rate-based vs. spike-based theories of the brain. Front. Syst. Neurosci. 2015, 9, 151.

- Reich, D.S.; Mechler, F.; Purpura, K.P.; Victor, J.D. Interspike Intervals, Receptive Fields, and Information Encoding in Primary Visual Cortex. J. Neurosci. 2000, 20, 1964–1974.

- Song, S.; Sjöström, P.J.; Reigl, M.; Nelson, S.; Chklovskii, D.B. Highly nonrandom features of synaptic connectivity in local cortical circuits. PLoS Biol. 2005, 3, e68.

- Sporns, O. The non-random brain: Efficiency, economy, and complex dynamics. Front. Comput. Neurosci. 2011, 5, 5.

- Milo, R.; Shen-Orr, S.; Itzkovitz, S.; Kashtan, N.; Chklovskii, D.; Alon, U. Network motifs: Simple building blocks of complex networks. Science 2002, 298, 824–827.

- Suárez, L.E.; Richards, B.A.; Lajoie, G.; Misic, B. Learning function from structure in neuromorphic networks. Nat. Mach. Intell. 2021, 3, 771–786.

- Hebb, D. The Organization of Behavior: A Neuropsychological Theory, 1st ed.; Psychology Press: London, UK, 1949.

- Song, S.; Miller, K.D.; Abbott, L.F. Competitive Hebbian learning through spike-timing-dependent synaptic plasticity. Nat. Neurosci. 2000, 3, 919–926.

- Markram, H.; Gerstner, W.; Sjöström, P.J. A history of spike-timing-dependent plasticity. Front. Synaptic Neurosci. 2011, 3, 4.

- Gavrilov, A.V.; Panchenko, K.O. Methods of learning for spiking neural networks. A survey. In Proceedings of the 2016 13th International Scientific-Technical Conference on Actual Problems of Electronics Instrument Engineering (APEIE), Novosibirsk, Russia, 3–6 October 2016; IEEE: Piscataway, NJ, USA, 2016; Volume 2, pp. 455–460.

- Taherkhani, A.; Belatreche, A.; Li, Y.; Cosma, G.; Maguire, L.P.; McGinnity, T.M. A review of learning in biologically plausible spiking neural networks. Neural Netw. 2020, 122, 253–272.

- Midya, R.; Wang, Z.; Asapu, S.; Joshi, S.; Li, Y.; Zhuo, Y.; Song, W.; Jiang, H.; Upadhay, N.; Rao, M.; et al. Artificial neural network (ANN) to spiking neural network (SNN) converters based on diffusive memristors. Adv. Electron. Mater. 2019, 5, 1900060.

- Rueckauer, B.; Liu, S.C. Conversion of analog to spiking neural networks using sparse temporal coding. In Proceedings of the 2018 IEEE International Symposium on Circuits and Systems (ISCAS), Florence, Italy, 27–30 May 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–5.

- Shrestha, S.B.; Orchard, G. Slayer: Spike layer error reassignment in time. arXiv 2018, arXiv:1810.08646.

- Neftci, E.O.; Mostafa, H.; Zenke, F. Surrogate gradient learning in spiking neural networks: Bringing the power of gradient-based optimization to spiking neural networks. IEEE Signal Process. Mag. 2019, 36, 51–63.

More