You're using an outdated browser. Please upgrade to a modern browser for the best experience.

Please note this is a comparison between Version 3 by Junming Chen and Version 2 by Junming Chen.

The outstanding buildings designed by master architects are the common wealth of mankind. They reflect their design skills and concepts and are not possessed by ordinary architectural designers. Compared with traditional methods that rely on a lot of mental labor for innovative design and drawing, artificial intelligence (AI) methods have greatly improved the creativity and efficiency of the design process. It overcomes the difficulty in specifying styles for generating high-quality designs in traditional diffusion models.

- architectural design

- text to design

- design process optimization

- design quality

- design style

- diffusion model

1. Introduction

1.1. Background and Motivation

Often the icon of a city, excellent architecture can attract tourists and promote local economic development [ 1 ]. However, designing outstanding architecture via conventional design methods poses multiple challenges. For one thing, conventional design methods involve a significant amount of manual drawing and design modifications [ 2 , 3 , 4 ], resulting in low design efficiency [ 4 ]. For another thing, cultivating designers with superb skills and ideas usually proves difficult [ 4 , 5 ], hence low-quality and inefficient architectural designs [ 5 , 6 ]. Such issues in the construction industry warrant urgent solutions.

1.2. Problem Statement and Objectives

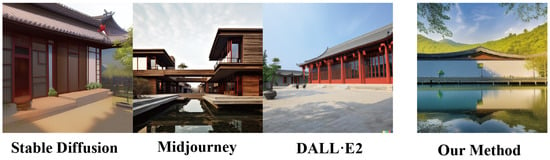

Artificial intelligence (AI) has been widely used in daily life [ 7 , 8 , 9 , 10 ]. Specifically, diffusion models can assist in addressing the low efficiency and quality in architectural design. Based on the machine learning concept, diffusion models are trained by learning knowledge from a vast amount of data [ 11[ 11 , 12 ] , 12 ] to generate diverse designs based on text prompts [ 13 ]. Nevertheless, the current mainstream diffusion models, such as Stable Diffusion [ 14 ], Midjourney [ 15 ], and DALL E2 [ 11], have limited applications in architectural design due to their inability to embed specific design style and form in the generated architectural designs ( Figure 1 ).

Figure 1. Mainstream diffusion models compared with the proposed method for generating architectural designs. Stable Diffusion [ 14 ] fails to generate an architectural design with a specific style, and the image is not aesthetically pleasing (left panel). The architectural design styles generated by Midjourney [ 15 ] (second from the left) and DALL E2 [ 11 ] (third from the left) are incorrect. None of these generated images met the design requirements. The proposed method (far right) generates architectural design in the correct design style . (Prompt: “An architectural photo in the Shu Wang style, photo, realistic, high definition”).

2. Architectural Design

Architectural designing relies on the professional skills and concepts of designers. Outstanding architectural designs play a crucial role in showcasing the image of a city [ 2 , 3 , 4 ]. Moreover, iconic landmark architecture in a city stimulates local employment and boosts the tourism industry [ 1 , 25 ].

Designers typically communicate architectural design proposals with clients through visual renderings. However, this conventional method has low efficiency and low quality. The inefficiency stems from the complexity of the conventional design process involving extensive manual drawing tasks [2, 5], such as creating 2D drawings, building 3D models, applying material textures, and rendering visual effects [ 26 ]. This linear design process restricts client involvement in the decision-making until producing the final rendered images. If clients find the design not to meet their expectations upon viewing the final images, designers must redo the entire design, leading to repetitive modifications [ 2 , 3 , 4]. Consequently, the efficiency of this design practice needs improvement [ 26 ].

The reason for the low quality of architectural design is that it is difficult to train excellent designers and the process of improving design capabilities is long. Designers' lack of design skills makes it difficult to improveit difficult to improve design quality [4,5,6[4,5,6 ] ] . However, the improvement of design capabilities is a gradual process , andprocess , and designers must constantly learn new design methods and explore different design stylesdesign [styles [ 2,3,6,27,28,29 ]. 2,3,6,27,28,29 ] . At the same time, seeking the best design solution under complex conditions also brings huge challenges to designers [ 2 , 5 ].

All these factors ultimately lead to inefficient and low-quality architectural designs [ 2 , 5 ]. Therefore, new technologies must be introduced into the construction industry in a timely manner to solve these problems.

3.Diffusion Model

In recent years, the diffusion model has rapidly developed into a mainstream image generation model [[ 19, 20, 21 , 22, 30, 31 ], which 19, 20, 21 , 22, 30, 31 ] , which allowsallows designers to quickly obtain images, thereby significantly improving the efficiency and quality of architectural design [ 13 , 32 ].

The traditional diffusion model includes forward process and backward process. During the forward process, noise is continuously added to the input image, transforming it into a noisy image. The purpose of the backward process is to restore the original image from the noisy image [ 33 ]. By learning the image denoising process, the diffusion model acquires the ability to generate images [ 32 , 34 ]. When there is a need to generate images with specific design elements, designers can incorporate text cues into the denoising process of the diffusion model to generate consistent images and achieve controllability over the generated results [ 35 , 36, 37[ 35 , 36, 37 , 38, 39 , 40 ]. ,The 38, 39 ],advantage of using 40text-guided ].diffusion The advantage of using text-guided diffusion models models for image generation is that they allow simple control of image generation [ 12, 14, 41, 42 , 43 ] .

Although diffusion models perform well in most fields, there is still room for improvement in their application in architectural design [ 35 , 44 ]. Specifically, the limitation comes from obtaining large amounts of Internet data for training, which lacks high-quality annotations with professional architectural terminology. As a result, the model fails to establish connections between architectural design and architectural language during the learning process, which makes it challengingAs a result, the model fails to establish connections between architectural design and architectural language during the learning process, which makes it challenging to use professional design vocabulary to guide architectural design generation [ 45, 46, 47, 48 ] . Therefore, it is necessary to collect high-quality architectural design images, annotate them with relevant information, and then fine-tune the model to adapt it to the architectural design task.

4. Model Fine-tuning

Diffusion models learn new knowledge and concepts through complete retraining or fine-tuning for new scenarios. Due to the huge cost of retraining the entire model, the need for large image datasets, and the long training time [ 11 , 15 ], model fine-tuning is currently the most feasible.

There are four standard methods of fine-tuning. The first is text inversion [ 11, 36, 46 , 49], which freezes the text-to-image model and provides only the most suitable embedding vectors to embed new knowledge. . This method provides fast model training and minimal generative models, but the image generation effect is mediocre. The second is the Hypernetwork [ 47 ] method, which inserts a separate small neural network in the middle layer of the original diffusion model to affect the output. This method is faster to train, but the image generation effect is average. The third one is LoRA [ 48 ], which assigns weights to cross-layer attention to allow learning new knowledge. This method can generate models with an average size of hundreds of MB after moderate training time, and the image generation effect is good. The fourth is the Dreambooth[ 45 ] method, which is an overall fine-tuning of the original diffusion model. Using this method, a prior-preserving loss was designed to train the diffusion model, enabling it to generate images consistent with the cues while preventing overfitting [ 50 , 51 ]. It is recommended to use rare vocabulary when naming new knowledge to avoid language drift due to similarity with the original model vocabulary [ 50 , 51 ]. This method requires only 3 to 5 images of a specific subject and corresponding text descriptions, can be fine-tuned for specific situations, and matches specific text descriptions to the characteristics of the input images. Fine-tuned models generate images based on specific topic terms and general descriptors [ 31 , 46]. Since the entire model is fine-tuned using the Dreambooth method, the results produced are usually the best of these methods.