Artificial Intelligence (AI) describes computer systems able to perform tasks that normally require human intelligence, such as visual perception, speech recognition, decision-making, and language translation. AI can be applied in many different areas, such as econometrics, biometry, e-commerce, and the automotive industry. AI has found its way into healthcare as well, helping doctors make better decisions, localizing tumors in magnetic resonance images, reading and analyzing reports written by radiologists and pathologists, and much more. However, AI has one big risk: it can be perceived as a “black box”, limiting trust in its reliability, which is a very big issue in an area in which a decision can mean life or death. As a result, the term Explainable Artificial Intelligence (XAI) has been gaining momentum. XAI tries to ensure that AI algorithms (and the resulting decisions) can be understood by humans.

- XAI

- AI

- artificial intelligence

- healthcare

- medicine

- explainability

- data science

- machine learning

- deep learning

- neural networks

1. Introduction

2. Central Concepts of XAI

2.1. From “Black Box” to “(Translucent) Glass Box”

With explainable AI, we try to progress from a “black box” to a transparent “glass box” [16][11] (sometimes also referred to as a “white box” [17][12]). In a glass box model (such as a decision tree or linear regression model), all parameters are known, and we know exactly how the model comes to its conclusion, giving full transparency. In the ideal situation, the model is fully transparent, but in many situations (e.g., deep learning models), the model might be explainable only to a certain degree, which could be described as a “translucent glass box” with an opacity level somewhere between 0% and 100%. A low opacity of the translucent glass box (or high transparency of the model) can lead to a better understanding of the model, which, in turn, could increase trust. This trust can exist on two levels, trust in the model versus trust in the prediction, as explained by Ribeiro et al. [18][13]. In healthcare, there are many different stakeholders who have different explanation needs [19][14]. For example, data scientists are usually mostly interested in the model itself, whereas users (often clinicians, but sometimes patients) are mostly interested in the predictions based on that model. Therefore, trust for data scientists generally means trust in the model itself, while trust for clinicians and patients means trust in its predictions. The “trusting a prediction” problem can be solved by providing explanations for individual predictions, whereas the “trusting the model” problem can be solved by selecting multiple such predictions (and explanations) [18][13]. Future research could determine in which context either of these two approaches should be applied.2.2. Explainability: Transparent or Post-Hoc

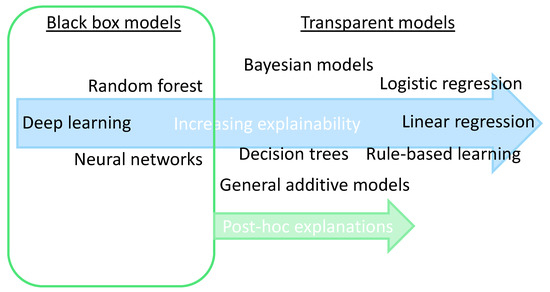

Arrieta et al. [20][15] classified studies on XAI into two approaches—some works focus on creating transparent models, while most works wrap black-box models with a layer of explainability, the so-called post-hoc models (Figure 21). The transparent models are based on linear or logistic regression, decision trees, k-nearest neighbors, rule-based learning, general additive models, and Bayesian models. These models are considered to be transparent because they are understandable by themselves. The post-hoc models (such as neural networks, random forest, and deep learning) need to be explained by resorting to diverse means to enhance their interpretability, such as text explanations, visual explanations, local explanations, explanations by example, explanations by simplification, and feature relevance explanations techniques. Phillips et al. [21][16] define four principles for explainable AI systems: (1) explanation: explainable AI systems deliver accompanying evidence or reasons for outcomes and processes; (2) meaningful: provide explanations that are understandable to individual users; (3) explanation accuracy: provide explanations that correctly reflect the system’s process for generating the output; and (4) knowledge limits: a system only operates under conditions for which it was designed and when it reaches sufficient confidence in its output. Vale et al. [22][17] state that machine learning post-hoc explanation methods cannot guarantee the insights they generate, which means that they cannot be relied upon as the only mechanism to guarantee the fairness of model outcomes in high-stake decision-making, such as in healthcare.

2.3. Collaboration between Humans and AI

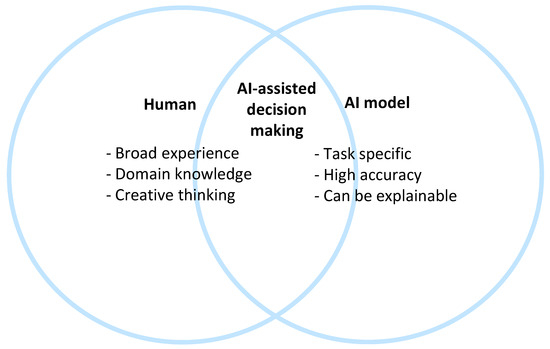

It is important for clinicians (but also patients, researchers, etc.) to realize that humans can and should not be replaced by an AI algorithm [23][18]. An AI algorithm could outscore humans in specific tasks, but humans (at this moment in time) still have added value with their domain expertise, broad experience, and creative thinking skills. It might be the case that when the accuracy of an AI algorithm on a specific task is compared to the accuracy of the clinician, the AI gets better results. However, the AI model should not be compared to the human alone but to the combination of the AI model and a human because, in clinical practice, they will almost always work together. In most cases, the combination (also known as “AI-assisted decision making”) will obtain the best results [24][19]. The combination of an AI model with human expertise also makes the decision more explainable: the clinician can combine the explainable AI with his/her own domain knowledge. In CDS, explainability allows developers to identify shortcomings in a system and allows clinicians to be confident in the decisions they make with the support of AI. [25][20]. Amann et al. state that if we would move in the opposite direction toward opaque algorithms in CDSS, this may inadvertently lead to patients being passive spectators in the medical decision-making process [26][21]. Figure 32 shows what qualities a human and an AI model can offer in clinical decision-making, with the combination offering the best results. In the future, there might be a shift to the right side of the figure, but the specific qualities of humans will likely ensure that combined decision-making will still be the best option for years to come.

2.4. Scientific Explainable Artificial Intelligence (sXAI)

Durán (2021) [27][22] differentiates scientific XAI (sXAI) from other forms of XAI. He states that the current approach for XAI is a bottom-up model: it consists of structuring all forms of XAI, attending to the current technology and available computational methodologies, which could lead to confounding classifications (or “how-explanations”) with explanations. Instead, he proposes a bona fide scientific explanation in medical AI. This explanation addresses three core components: (1) the structure of sXAI, consisting of the “explanans” (the unit that carries out an explanation), the “explanandum” (the unit that will be explained), and the “explanatory relation” (the objective relation of dependency that links the explanans and the explanandum); (2) the role of human agents and non-epistemic beliefs in sXAI; and (3) how human agents can meaningfully assess the merits of an explanation. This concludes by proposing a shift from standard XAI to sXAI, together with substantial changes in the way medical XAI is constructed and interpreted. Cabitza et al. [28][23] discuss this approach and conclude that existing XAI methods fail to be bona fide explanations, which is why their framework cannot be applied to current XAI work. For sXAI to work, it needs to be integrated into future medical AI algorithms in a top–down manner. This means that algorithms should not be explained by simply describing “how” a decision has been reached, but we should also look at what other scientific disciplines, such as philosophy of science, epistemology, and cognitive science, can add to the discussion [27][22]. For each medical AI algorithm, the explanans, explanandum, and explanatory relation should be defined.2.5. Explanation Methods: Granular Computing (GrC) and Fuzzy Modeling (FM)

Many methods exist to explain AI algorithms, as described in detail by Holzinger et al. [29][24]. There is one technique that is particularly useful in XAI because it is motivated by the need to approach AI through human-centric information processing [30][25], Granular Computing (GrC), which was introduced by Zadeh in 1979 [31][26]. GrC is an “emerging paradigm in computing and applied mathematics to process data and information, where the data or information are divided into so-called information granules that come about through the process of granulation” [32][27]. GrC can help make models more interpretable and explainable by bridging the gap between abstract concepts and concrete data through these granules. Another useful technique related to GrC is Fuzzy Modeling (FM), a methodology oriented toward the design of explanatory and predictive models. FM is a technique through which a linguistic description can be transformed into an algorithm whose result is an action [33][28]. Fuzzy modeling can help explain the reasoning behind the output of an AI system by representing the decision-making process in a way that is more intuitive and interpretable. Although FM was originally conceived to provide easily understandable models to users, this property cannot be taken for granted, but it requires careful design choices [34][29]. Much research in this area is still ongoing. Zhang et al. [35][30] discuss the multi-granularity three-way decisions paradigm [36][31] and how this acts as a part of granular computing models, playing a significant role in explainable decision-making. Zhang et al. [37][32] adopt a GrC framework named “multigranulation probabilistic models” to enrich semantic interpretations for GrC-based multi-attribute group decision-making (MAGDM) approaches. In healthcare, GrC could, for example, help break down a CDS algorithm into smaller components, such as the symptoms, patient history, test results, and treatment options. This can help the clinician understand how the algorithm arrived at its diagnosis and determine if it is reliable and accurate. FM could, for example, be used in a CDS system to represent the uncertainty and imprecision in the input data, such as patient symptoms, and the decision-making process, such as the rules that are used to arrive at a diagnosis. This can help to provide a more transparent and understandable explanation of how the algorithm arrived at its output. Recent examples of the application of GrC and FM in healthcare are in the disease areas of Parkinson’s disease [38][33], COVID-19 [39][34], and Alzheimer’s disease [40][35].References

- Joiner, I.A. Chapter 1—Artificial intelligence: AI is nearby. In Emerging Library Technologies; Joiner, I.A., Ed.; Chandos Publishing: Oxford, UK, 2018; pp. 1–22.

- Hulsen, T. Literature analysis of artificial intelligence in biomedicine. Ann. Transl. Med. 2022, 10, 1284.

- Yu, K.-H.; Beam, A.L.; Kohane, I.S. Artificial intelligence in healthcare. Nat. Biomed. Eng. 2018, 2, 719–731.

- Hulsen, T.; Jamuar, S.S.; Moody, A.; Karnes, J.H.; Orsolya, V.; Hedensted, S.; Spreafico, R.; Hafler, D.A.; McKinney, E. From Big Data to Precision Medicine. Front. Med. 2019, 6, 34.

- Hulsen, T.; Friedecký, D.; Renz, H.; Melis, E.; Vermeersch, P.; Fernandez-Calle, P. From big data to better patient outcomes. Clin. Chem. Lab. Med. (CCLM) 2022, 61, 580–586.

- Biswas, S. ChatGPT and the Future of Medical Writing. Radiology 2023, 307, e223312.

- Celi, L.A.; Cellini, J.; Charpignon, M.-L.; Dee, E.C.; Dernoncourt, F.; Eber, R.; Mitchell, W.G.; Moukheiber, L.; Schirmer, J.; Situ, J. Sources of bias in artificial intelligence that perpetuate healthcare disparities—A global review. PLoS Digit. Health 2022, 1, e0000022.

- Hulsen, T. Sharing Is Caring-Data Sharing Initiatives in Healthcare. Int. J. Environ. Res. Public Health 2020, 17, 3046.

- Vega-Márquez, B.; Rubio-Escudero, C.; Riquelme, J.C.; Nepomuceno-Chamorro, I. Creation of synthetic data with conditional generative adversarial networks. In Proceedings of the 14th International Conference on Soft Computing Models in Industrial and Environmental Applications (SOCO 2019), Seville, Spain, 13–15 May 2019; Springer: Cham, Switzerlnad, 2020; pp. 231–240.

- Gunning, D.; Stefik, M.; Choi, J.; Miller, T.; Stumpf, S.; Yang, G.Z. XAI-Explainable artificial intelligence. Sci. Robot. 2019, 4, eaay7120.

- Rai, A. Explainable AI: From black box to glass box. J. Acad. Mark. Sci. 2020, 48, 137–141.

- Loyola-Gonzalez, O. Black-box vs. white-box: Understanding their advantages and weaknesses from a practical point of view. IEEE Access 2019, 7, 154096–154113.

- Ribeiro, M.T.; Singh, S.; Guestrin, C. Why should I trust you?: Explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 1135–1144.

- Gerlings, J.; Jensen, M.S.; Shollo, A. Explainable AI, but explainable to whom? An exploratory case study of xAI in healthcare. In Handbook of Artificial Intelligence in Healthcare: Practicalities and Prospects; Lim, C.-P., Chen, Y.-W., Vaidya, A., Mahorkar, C., Jain, L.C., Eds.; Springer International Publishing: Cham, Switzeralnd, 2022; Volume 2, pp. 169–198.

- Arrieta, A.B.; Díaz-Rodríguez, N.; Del Ser, J.; Bennetot, A.; Tabik, S.; Barbado, A.; García, S.; Gil-López, S.; Molina, D.; Benjamins, R. Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Inf. Fusion 2020, 58, 82–115.

- Phillips, P.J.; Hahn, C.A.; Fontana, P.C.; Broniatowski, D.A.; Przybocki, M.A. Four Principles of Explainable Artificial Intelligence; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2020; Volume 18.

- Vale, D.; El-Sharif, A.; Ali, M. Explainable artificial intelligence (XAI) post-hoc explainability methods: Risks and limitations in non-discrimination law. AI Ethics 2022, 2, 815–826.

- Bhattacharya, S.; Pradhan, K.B.; Bashar, M.A.; Tripathi, S.; Semwal, J.; Marzo, R.R.; Bhattacharya, S.; Singh, A. Artificial intelligence enabled healthcare: A hype, hope or harm. J. Fam. Med. Prim. Care 2019, 8, 3461–3464.

- Zhang, Y.; Liao, Q.V.; Bellamy, R.K.E. Effect of Confidence and Explanation on Accuracy and Trust Calibration in AI-Assisted Decision Making. In Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency, Barcelona, Spain, 27–30 January 2020; pp. 295–305.

- Antoniadi, A.M.; Du, Y.; Guendouz, Y.; Wei, L.; Mazo, C.; Becker, B.A.; Mooney, C. Current Challenges and Future Opportunities for XAI in Machine Learning-Based Clinical Decision Support Systems: A Systematic Review. Appl. Sci. 2021, 11, 5088.

- Amann, J.; Blasimme, A.; Vayena, E.; Frey, D.; Madai, V.I.; the Precise, Q.c. Explainability for artificial intelligence in healthcare: A multidisciplinary perspective. BMC Med. Inform. Decis. Mak. 2020, 20, 310.

- Durán, J.M. Dissecting scientific explanation in AI (sXAI): A case for medicine and healthcare. Artif. Intell. 2021, 297, 103498.

- Cabitza, F.; Campagner, A.; Malgieri, G.; Natali, C.; Schneeberger, D.; Stoeger, K.; Holzinger, A. Quod erat demonstrandum?—Towards a typology of the concept of explanation for the design of explainable AI. Expert Syst. Appl. 2023, 213, 118888.

- Holzinger, A.; Saranti, A.; Molnar, C.; Biecek, P.; Samek, W. Explainable AI methods—A brief overview. In Proceedings of the xxAI—Beyond Explainable AI: International Workshop, Held in Conjunction with ICML 2020, Vienna, Austria, 12–18 July 2020; Holzinger, A., Goebel, R., Fong, R., Moon, T., Müller, K.-R., Samek, W., Eds.; Springer International Publishing: Cham, Switzerland, 2022; pp. 13–38.

- Bargiela, A.; Pedrycz, W. Human-Centric Information Processing through Granular Modelling; Springer Science & Business Media: Dordrecht, The Netherlands, 2009; Volume 182.

- Zadeh, L.A. Fuzzy sets and information granularity. In Fuzzy Sets, Fuzzy Logic, and Fuzzy Systems: Selected Papers; World Scientific: Singapore, 1979; pp. 433–448.

- Keet, C.M. Granular computing. In Encyclopedia of Systems Biology; Dubitzky, W., Wolkenhauer, O., Cho, K.-H., Yokota, H., Eds.; Springer: New York, NY, USA, 2013; p. 849.

- Novák, V.; Perfilieva, I.; Dvořák, A. What is fuzzy modeling. In Insight into Fuzzy Modeling; John Wiley & Sons: Hoboken, NJ, USA, 2016; pp. 3–10.

- Mencar, C.; Alonso, J.M. Paving the way to explainable artificial intelligence with fuzzy modeling: Tutorial. In Proceedings of the Fuzzy Logic and Applications: 12th International Workshop (WILF 2018), Genoa, Italy, 6–7 September 2018; Springer International Publishing: Cham, Switzerland, 2019; pp. 215–227.

- Zhang, C.; Li, D.; Liang, J. Multi-granularity three-way decisions with adjustable hesitant fuzzy linguistic multigranulation decision-theoretic rough sets over two universes. Inf. Sci. 2020, 507, 665–683.

- Zadeh, L.A. Toward a theory of fuzzy information granulation and its centrality in human reasoning and fuzzy logic. Fuzzy Sets Syst. 1997, 90, 111–127.

- Zhang, C.; Li, D.; Liang, J.; Wang, B. MAGDM-oriented dual hesitant fuzzy multigranulation probabilistic models based on MULTIMOORA. Int. J. Mach. Learn. Cybern. 2021, 12, 1219–1241.

- Zhang, C.; Ding, J.; Zhan, J.; Sangaiah, A.K.; Li, D. Fuzzy Intelligence Learning Based on Bounded Rationality in IoMT Systems: A Case Study in Parkinson’s Disease. IEEE Trans. Comput. Soc. Syst. 2022, 10, 1607–1621.

- Solayman, S.; Aumi, S.A.; Mery, C.S.; Mubassir, M.; Khan, R. Automatic COVID-19 prediction using explainable machine learning techniques. Int. J. Cogn. Comput. Eng. 2023, 4, 36–46.

- Gao, S.; Lima, D. A review of the application of deep learning in the detection of Alzheimer's disease. Int. J. Cogn. Comput. Eng. 2022, 3, 1–8.