Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 2 by Dean Liu and Version 1 by Jingyao Wang.

Road scene understanding is crucial to the safe driving of autonomous vehicles. Comprehensive road scene understanding requires a visual perception system to deal with a large number of tasks at the same time, which needs a perception model with a small size, fast speed, and high accuracy. As multi-task learning has evident advantages in performance and computational resources, in this paper, a multi-task model YOLO-Object, Drivable Area, and Lane Line Detection (YOLO-ODL) based on hard parameter sharing is proposed to realize joint and efficient detection of traffic objects, drivable areas, and lane lines.

- autonomous vehicles

- visual perception

- multi-task learning

- traffic object detection

1. Introduction

The compositions of autonomous driving vehicles can be divided into three modules: environmental perception, decision planning, and vehicle control. Environmental perception is the most fundamental part to realize autonomous driving and is one of the critical technologies in intelligent vehicles [1]. The performance of perception will determine whether the autonomous vehicle can adapt to the complex and changeable traffic environment. The research progress of computer vision shows that visual perception will play a decisive role in the development of autonomous driving [2]. Moreover, vision sensors have the advantages of mature technologies, low prices, and comprehensive detection [3,4][3][4].

Effective and rapid detection of traffic objects in various environments will ensure the safe driving of autonomous vehicles, but the detection performance is severely limited by road scenes, lighting, weather, and other factors. The development of big data, computing power, and algorithms has continuously improved the accuracy of deep learning.

Great breakthroughs have been made in the automatic driving industry, making the above detection problems expected to be solved. At present, creating deeper learning networks as deep as possible is the main trend in current research [5], and, while significant progress has been made in that direction, the demands on computational power are becoming increasingly demanding. Runtime is becoming very important when it comes to actually deploying applications.

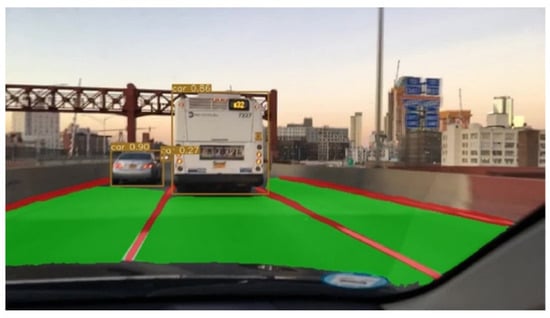

In view of this, aiming at the problems of redundant calculation, slow speed, and low accuracy in the existing perception models, wresearchers propose a multi-task model YOLO-Object, Drivable Area, and Lane Line Detection (YOLO-ODL) model based on hard parameter sharing, which realizes the joint and efficient detection of objects, drivable areas, and lane lines, as shown in Figure 1. The accelerated deployment application of the model is also introduced, so as to provide stable and reliable conditions for decision planning and execution control.

Figure 1. OurThe road detection.

At present, the YOLOv5 object detection model has the best tradeoff between detection accuracy and speed [6]. Based on the YOLOv5s model [7], wresearche rs built the YOLO-ODL multi-task model. YOLO-ODL has a good balance of detection speed and accuracy, achieving state-of-the-art performance on the BDD100K dataset [8], reaching 94 FPS on an RTX 3090 GPU. The detection speed can reach 477 FPS using TensorRT.

2. Object Detection

Transformer-based object detection methods have had dominant performances in recent years. Zhu et al. [9] applied the transformer to YOLO and achieved better detection results. Anchor-based methods are still the mainstream of object detection at present [10]. Their core idea is to introduce anchor boxes, which can be considered as pre-defined proposals, as a priori for bounding boxes, which can be divided into one-stage object detection methods and two-stage object detection methods. As it is necessary to extract object regions from a set of object proposals and then classify them, the two-stage method is less efficient than the one-stage method [11]. The one-stage method uses the one-stage network to directly output the location and category of objects so that it has evident advantages in training and reasoning time. Liu et al. [12] presented a method of detecting objects in images using a single depth neural network SSD, which could improve the detection speed and achieve the accuracy of two-stage detectors at the same time. Lin et al. [13] designed and trained a simple dense detector called RetinaNet that introduced a new loss function Focal Loss, which effectively solved the problem of imbalanced proportions of positive and negative samples in the training process. The subsequent versions of YOLO [7,14,15][7][14][15] made a series of improvement measures to further improve the object detection accuracy on the premise of ensuring the detection speed. The actual running time of the object detection algorithm is very important for deploying the application online, so it is necessary to further balance the detection speed and accuracy. In addition, there are a large number of small objects in the autonomous driving environment, but, due to the low-resolution of small objects and the lack of sufficient appearance and structure information, small-object detection is more challenging than ordinary-object detection.3. Drivable Area Detection

With the rapid development of deep learning technologies, many effective semantic segmentation methods have been proposed and applied to drivable area detection [16]. Long et al. [17] first proposed FCN, which is an end-to-end semantic segmentation network for opening a precedent for using convolutional neural networks to deal with semantic segmentation problems. Badrinarayanan et al. [18] proposed an encoder–decoder semantic segmentation network named SegNet, which is currently widely used. Zhao et al. [19] established PSPNet, a network that extends pixel-wise features to global pyramid pooling features, thereby combining context information to improve detection performance. Unlike the above-mentioned networks, Tian et al. [20] proposed decoder-adopted, data-dependent upsampling (DUpsampling), which can recover the resolution of feature maps from the low-resolution output of the network. Takikawa et al. [21] proposed a new structure GSCNN, which uses a new structure composed of shape branches and rule branches to focus on boundary information and improves the segmentation ability of small objects. Based on deep learning, semantic segmentation effectively improves detection accuracy. However, due to the increasing number of deep learning network layers, the model becomes more complex, which leads to the low efficiency of segmentation and is difficult to apply to autonomous driving scenarios.4. Lane Line Detection

In order to significantly improve the accuracy and robustness of lane line detection, many lane line detection methods based on deep learning have been proposed. Lane line detection methods can be roughly divided into two categories; one is based on classification, the other is based on semantic segmentation. The classification-based lane detection method reduces the size and computation of the model but suffers from a loss in accuracy and cannot detect scenes with many lane lines well [22]. The method based on semantic segmentation classifies each pixel into lane or background. Pan et al. [23] proposed Spatial CNN (SCNN) that replaced the traditional layer-by-layer convolutions with slice-by-slice convolutions, which enabled message passing between pixels across rows and columns in a layer, thereby improving the segmentation ability for long continuous shape structures such as lane lines, poles, and walls. Hou et al. [24] presented a novel knowledge distillation approach named the Self Attention Distillation (SAD) mechanism, which was incorporated into a neural network to obtain significant improvements without any additional supervision or labels. Zheng et al. [25] presented a novel module named REcurrent Feature-Shift Aggregator (RESA) to collect feature map information so that the network could transfer information more directly and efficiently. Lane line detection based on semantic segmentation can effectively increase detection accuracy. However, with the increase in detection accuracy, the network becomes more complex, and each pixel needs to be classified, so the detection speed needs to be improved.5. Multi-Task Learning

Multi-task networks usually adopt the scheme of hard parameter sharing, which consists of a shared encoder and several feature task decoders. The proposed multi-task network [26] has the advantages of small size, fast speed, high accuracy, and can be used for positioning, making it highly suitable for online deployment of autonomous vehicles. Teichmann et al. [2] proposed an efficient and effective feedforward architecture called MultiNet, which combined classification, detection, and semantic segmentation; the approach shares a common encoder and has three branches on which specific tasks are built with multiple convolutional layers. DLT-Net, proposed by Qian et al. [1], and YOLOP, proposed by Wu et al. [27], simultaneously deal with the problems of traffic objects, drivable areas, and lane line detections in one framework. A multi-task network is helpful to improve model generalization and reduce computing costs. However, multi-task learning may also reduce the accuracy of the model due to the need to balance multiple tasks [28]. Models based on multi-task learning need to learn knowledge from different tasks and are highly dependent on the shared parameters of multi-task models. However, the multi-task architectures proposed by most of the current works lack balancing the relationships among the various tasks. Multi-task models are usually difficult to train to achieve the best effect, and unbalanced learning will reduce the performance of a multi-task model, so it is necessary to adopt meaningful feature representation and appropriate balanced learning styles.References

- Qian, Y.; Dolan, J.M.; Yang, M. DLT-Net: Joint detection of drivable areas, lane lines, and traffic objects. IEEE Trans. Intell. Transp. Syst. (IVS) 2019, 21, 4670–4679.

- Teichmann, M.; Weber, M.; Zollner, M.; Cipolla, R.; Urtasun, R. MultiNet: Real-time joint semantic reasoning for autonomous driving. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 1013–1020.

- Sun, Z.; Bebis, G.; Miller, R. On-road vehicle detection: A review. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 694–711.

- Owais, M. Traffic sensor location problem: Three decades of research. Expert Syst. Appl. 2022, 208, 118134.

- Bhaggiaraj, S.; Priyadharsini, M.; Karuppasamy, K.; Snegha, R. Deep Learning Based Self Driving Cars Using Computer Vision. In Proceedings of the 2023 International Conference on Networking and Communications (ICNWC), Chennai, India, 5–6 April 2023; pp. 1–9.

- Hu, L. An Improved YOLOv5 Algorithm of Target Recognition. In Proceedings of the 2023 IEEE 2nd International Conference on Electrical Engineering, Big Data and Algorithms (EEBDA), Changchun, China, 24–26 February 2023; pp. 1373–1377.

- Jocher, G. 2020. Available online: https://github.com/ultralytics/yolov5 (accessed on 1 June 2023).

- Yu, F.; Chen, H.; Wang, X.; Xian, W.; Chen, Y.; Liu, F.; Madhavan, V.; Darrell, T. BDD100K: A diverse driving video database with scalable annotation tooling. arXiv 2018, arXiv:1805.04687. Available online: https://arxiv.org/abs/1805.04687 (accessed on 1 June 2023).

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. TPH-YOLOv5: Improved YOLOv5 Based on Transformer Prediction Head for Object Detection on Drone-captured Scenarios. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) Workshops, Montreal, BC, Canada, 11–17 October 2021; pp. 2778–2788.

- Railkar, Y.; Nasikkar, A.; Pawar, S.; Patil, P.; Pise, R. Object Detection and Recognition System Using Deep Learning Method. In Proceedings of the 2023 IEEE 8th International Conference for Convergence in Technology (I2CT), Lonavla, India, 7–9 April 2023; pp. 1–6.

- JMaurya, J.; Ranipa, K.R.; Yamaguchi, O.; Shibata, T.; Kobayashi, D. Domain Adaptation using Self-Training with Mixup for One-Stage Object Detection. In Proceedings of the 2023 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 2–7 January 2023; pp. 4178–4187.

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 8–16 October 2016; pp. 21–37.

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2999–3007.

- Redmon, J.; Farhadi, A. YOLOv3: An incremental improvement. arXiv 2020, arXiv:1804.02767. Available online: https://arxiv.org/abs/1804.02767 (accessed on 1 June 2023).

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-J.M. YOLOv4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. Available online: https://arxiv.org/abs/2004.10934 (accessed on 1 June 2023).

- Miraliev, S.; Abdigapporov, S.; Kakani, V.; Kim, H. Real-Time Memory Efficient Multitask Learning Model for Autonomous Driving. IEEE Trans. Intell. Veh. 2023, 8, 1–12.

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440.

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495.

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890.

- Tian, Z.; He, T.; Shen, C.; Yan, Y. Decoders matter for semantic segmentation: Data-dependent decoding enables flexible feature aggregation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 3121–3130.

- Takikawa, T.; Acuna, D.; Jampani, V.; Fidler, S. Gated-SCNN: Gated shape cnns for semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 5228–5237.

- Zakaria, N.J.; Shapiai, M.I.; Ghani, R.A.; Yassin, M.N.M.; Ibrahim, M.Z.; Wahid, N. Lane Detection in Autonomous Vehicles: A Systematic Review. IEEE Access 2023, 11, 3729–3765.

- Pan, X.; Shi, J.; Luo, P.; Wang, X.; Tang, X. Spatial as deep: Spatial cnn for traffic scene understanding. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI), Washington DC, USA, 7–14 February 2023; pp. 7276–7283.

- Hou, Y.; Ma, Z.; Liu, C.; Loy, C.C. Learning lightweight lane detection cnns by self attention distillation. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1013–1021.

- Zheng, T.; Fang, H.; Zhang, Y.; Tang, W.; Yang, Z.; Liu, H.; Cai, D. RESA: Recurrent feature-shift aggregator for lane detection. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI), Virtual, 2–9 February 2021; pp. 3547–3554.

- Lee, T.; Seok, J. Multi Task Learning: A Survey and Future Directions. In Proceedings of the 2023 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), Virtual, 20–23 February 2023; pp. 232–235.

- Wu, D.; Liao, M.-W.; Zhang, W.-T.; Wang, X.-G.; Bai, X.; Cheng, W.-Q.; Liu, W.-Y. YOLOP: You only look once for panoptic driving perception. arXiv 2021, arXiv:2108.11250. Available online: https://arxiv.org/abs/2108.11250 (accessed on 1 June 2023).

- Kim, D.; Lan, T.; Zou, C.; Xu, N.; Plummer, B.A.; Sclaroff, S.; Eledath, J.; Medioni, G. MILA: Multi-task learning from videos via efficient inter-frame attention. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), Montreal, BC, Canada, 11–17 October 2021; pp. 2219–2229.

More