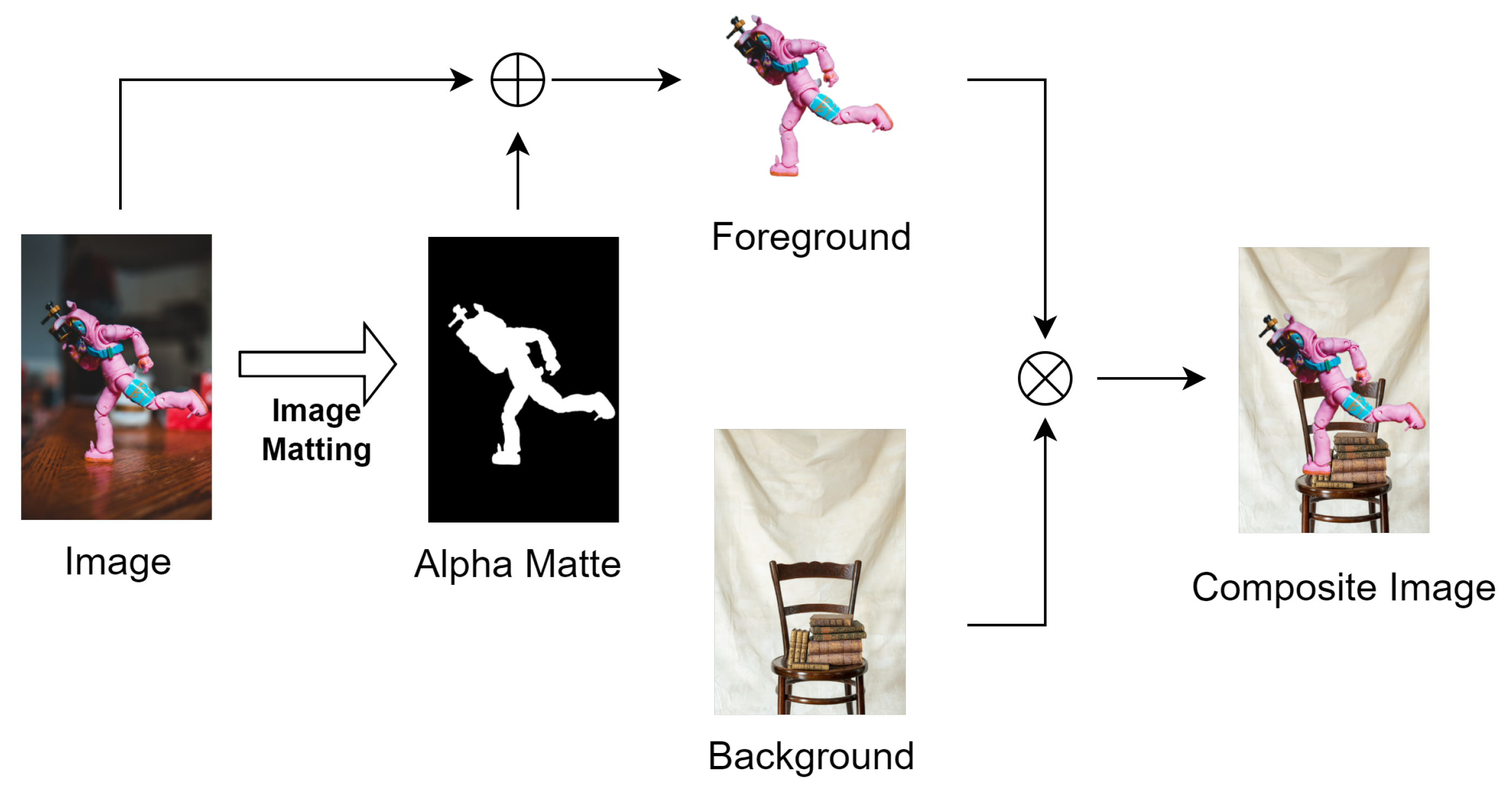

Image matting is a fundamental technique used to extract a fine foreground image from a given image by estimating the opacity values of each pixel. It is one of the key techniques in image processing and has a wide range of applications in practical scenarios, such as in image and video editing.

- image matting

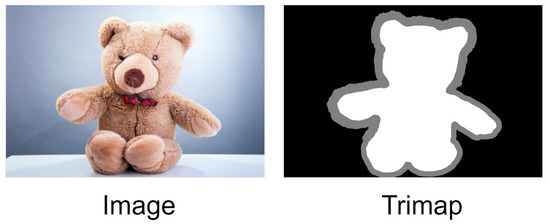

- trimap

- image editing

- medical imaging

- cloud detection

1. Image Matting

2. Trimap

3. Distinguishing Image Matting from Image Semantic Segmentation

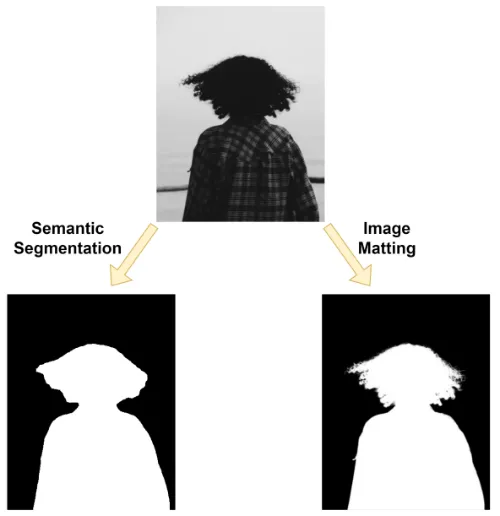

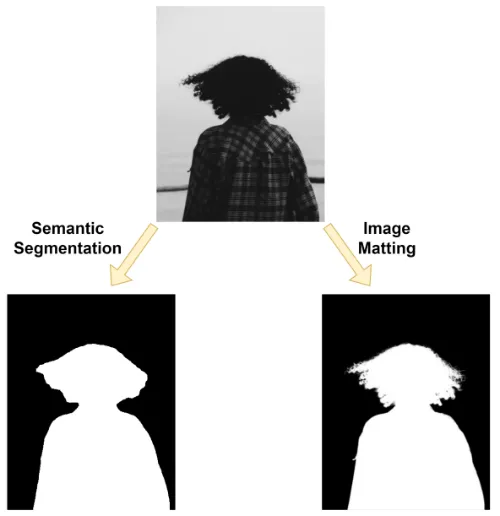

The results generated by image matting may appear similar to those of semantic segmentation; however, in reality, they are fundamentally different techniques. Semantic segmentation is a classifification task that extracts the semantic information in the input image and then classififies the pixels individually to obtain the semantic mask of the input image. When semantic segmentation only segments the foreground and background, the binary nature of segmentation leads to a strict boundary near the foreground edge. Image matting is a regression task that involves estimating the opacity of each pixel in an input image, which results in the extraction of the foreground via the alpha matte. A comparison of the results of semantic segmentation and image matting is shown in Figure 3.

Figure 3. Comparison of the image semantic segmentation and image matting results.

4. Classifification of Image Matting Methods

Over the years of research and development, researchers have designed a series of effective algorithms for various application scenarios of image matting; these algorithms can be categorized into three groups: sampling-based, propagation-based, and learning-based methods. Sampling-based algorithms predict the opacity of the unknown region by collecting a series of pixels or pixel blocks from the known regions of the trimap. Propagation-based algorithms typically establish connections between adjacent pixels and then use an optimization strategy to propagate the opacity information of the known regions to the unknown regions in order to predict the opacity of each pixel in the unknown region. Learning-based algorithms learn the features of the image by using a considerable amount of data and use these features to predict the opacity. As deep learning algorithms have already been applied to various visual tasks and have completely surpassed traditional learning-based algorithms, they are gradually being introduced into image matting.

References

- Smith, A.R.; Blinn, J.F. Blue screen matting. In Proceedings of the 23rd Annual Conference on Computer Graphics and Interactive Techniques, New Orleans, LA, USA, 4–9 August 1996; pp. 259–268.

- Mishima, Y. Soft Edge Chroma-Key Generation Based Upon Hexoctahedral Color Space. US Patent 5,355,174, 11 October 1994.

- Sun, J.; Jia, J.; Tang, C.K.; Shum, H.Y. Poisson matting. In ACM SIGGRAPH 2004 Papers; ACM: New York, NY, USA, 2004; pp. 315–321.