Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 1 by Hriday Bavle and Version 3 by Camila Xu.

Situational Awareness (SA) is a fundamental capability of humans that has been deeply studied in various fields, such as psychology, military, aerospace, and education. Nevertheless, it has yet to be considered in robotics, which has focused on single compartmentalized concepts such as sensing, spatial perception, sensor fusion, state estimation, and Simultaneous Localization and Mapping (SLAM).

- simultaneous localization and mapping

- scene understanding

- scene graphs

- mobile robots

1. Introduction

The robotics industry is experiencing an exponential growth, embarking on newer technological advancements and applications. Mobile robots have gained interest from a commercial perspective due to their capabilities to replace or aid humans in repetitive or dangerous tasks [1]. Already, many industrial and civil-related applications employ mobile robots [2]. For example, industrial machines and underground mines’ inspections, surveillance and road-traffic monitoring, civil engineering, agriculture, healthcare, search, and rescue interventions in extreme environments, e.g., natural disasters, for exploration and logistics [3].

On one hand, mobile robots can be controlled in manual teleoperation or semi-autonomous mode with constant human intervention in the loop. Furthermore, teleoperated mobile robots can be apprehended using applications such as augmented reality (AR) [4], ref. [5] to enhance human–robot interactions. On the other hand, in fully autonomous mode, a robot performs an entire mission based on its understanding of the environment given only a few commands [6]. Remarkably, autonomy reduces the costs and risks while increasing productivity and is the goal of current research to solve the main challenges that it raises [7]. Unlike the industrial scenario, where autonomous agents can act in a controlled environment, mobile robots operate in the dynamic, unstructured, and cluttered world domain with little or zero previous knowledge of the scene structure.

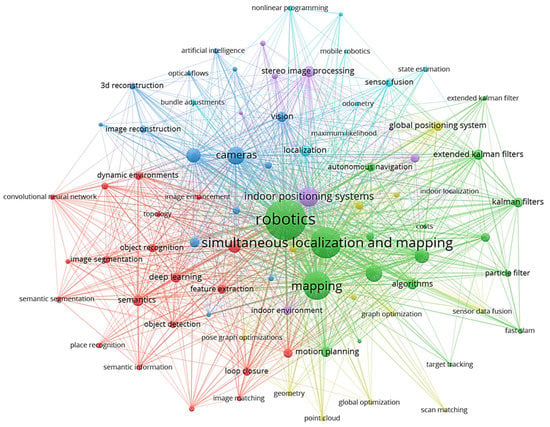

Up to now, the robotics community has focused chiefly on research areas such as sensing, perception, sensor fusion, data analysis, state estimation, localization and mapping, i.e., Simultaneous Localization and Mapping (SLAM), and Artificial Intelligence (AI) applied to various image processing problems, in a compartmentalized manner. Figure 1 shows the mentioned targets’ data obtained from Scopus abstract and citation database. However, autonomous behavior entails understanding the situation encompassing multiple interdisciplinary aspects of robotics, from perception, control, and planning to human–robot interaction. Although SA [8] is a holistic concept widely studied in fields such as psychology, the military, and in aerospace, it has been barely considered in robotics. Notably, Endsley [9] formally defined SA in the 1990s as “the perception of the elements in the environment within a volume of time and space, the comprehension of their meaning and the projection of their status shortly”, which remains valid to date [10].

Figure 1. Scopus database since 2015 covering the research in Robotics and SLAM. All the works focused on independent research areas which could be efficiently encompassed in one field of Situational Awareness for robots.

Therefore, a robot’s Situational Awareness (SA) system must continuously acquire new observations of the surroundings, understand its essential elements and make complex reasoning, and project the world state into a possible future outcome to make decisions and execute actions that would let it accomplish its goals.

2. Situational Perception

Situational perception enables robots to perceive their known state as well as the situation around them using a single or a combination of onboard proprioceptive and exteroceptive sensors. The continuous technological advances regarding chip developments have made many sensors suited for use onboard mobile robots [11], as they come with a small form factor and possibly low power consumption. The primary sensor suite of the average robot can count on a wide array of devices, such as Inertial Measurement Units (IMUs), magnetometers, barometers, and Global Navigation Satellite Systems (GNSS) receivers, e.g., for the typical Global Positioning System (GPS) satellite constellation. Sensors such as IMUs, which can be utilized by robotic platforms to measure their attitude, angular velocities, and linear accelerations, are cheap and lightweight, making them ideal for running onboard the platforms, though the performance of these sensors can degrade over time due to the accumulation of errors coming from white Gaussian noise [12]. Magnetometers are generally integrated within an IMU sensor, measuring the accurate heading of the robotics platform relative to the Earth’s magnetic field. The sensor measurements from a magnetometer can be corrupted when the robot navigates in environments with constant magnetic fields interfering with the Earth’s magnetic field. Barometers can be utilized by flying mobile robots to measure their altitude changes through measured pressure changes, but they suffer from bias and random noise in measurements in indoor environments due to ground/ceiling effects [13]. Wheel encoders are utilized by ground mobile robots to measure the velocities of the platform and obtain its relative position. GNSS receivers, as well as their higher-precision variants, such as Real-Time Kinematic (RTK) or differential GNSS, provide reliable position measurements of robots in a global frame of reference relative to the Earth in outdoor environments. However, these sensors can work reliably in uncluttered outdoor environments with multiple satellites connected or within a direct line of sight with the RTK base station [14].

The adoption of cameras as exteroceptive sensors in robotics has become increasingly prevalent due to their ability to provide a vast range of information in a compact and cost-effective manner [15]. In particular, RGB cameras, including monocular cameras, have been widely used in robotics as primary sensor as it provides the robots with colored images which can be further processed to extract meaningful information from their environment. Additionally, cameras with depth information, such as stereo or RGB-D cameras, have emerged as a dominant sensor type in robotics given that they provide the robot with additional capabilities of perceiving the depth of the different objects within the environment. As such, the use of standard cameras is expected to continue playing a crucial role in developing advanced robotic systems. These standard cameras suffer from the disadvantage of motion blur in the presence of a rapid motion of the robot, and the perceived quality can degrade as the robot navigates in changing lighting conditions.

In robotics, RGB and RGB-D (i.e., with depth) cameras are thus complemented by infrared (IR) cameras, also referred to as thermal cameras when detecting long-wave radiation, for gaining extended visibility during nighttime or adverse weather conditions. These sensors can provide valuable information not detectable by human eyes or traditional cameras, such as heat signatures and thermal patterns. By incorporating thermal and IR cameras into the sensor suite, mobile robots can detect and track animated targets by following heat signatures, and navigating low-visibility environments, thus operating in a broader range of conditions. These specialized sensors can significantly enhance a robotic system’s situational awareness and overall performance.

Neuromorphic vision sensors [16], also known as event cameras [17], such as the Dynamic Vision Sensor (DVS) [18], overcome the limitations of standard cameras by encoding pixel intensity changes rather than an absolute brightness value and providing very high dynamic ranges as well as no motion blur during rapid motions. However, due to the asynchronous nature of event cameras, measurements of the situations are only provided in case of variations in the perceived scene brightness that are often caused by the motion of the sensor itself. Hence, they can measure a sparse set of points, usually in correspondence with edges. To perceive a complete dense representation of the environment, such sensors onboard mobile robots are typically combined with the traditional pixels of RGB cameras, as in the case of the Dynamic and Active-Pixel Vision Sensor (DAVIS) [19] or Asynchronous Time-Based Image Sensor (ATIS) [20] cameras. Nevertheless, algorithms have also been proposed to reconstruct traditional intensity frames by integrating events over time to facilitate the reuse of preexisting image processing approaches [21] or even produce a high-frame-rate video by interpolating new frames [22].

Ranging sensors, such as small-factor solid-state Light Detection and Rangings (LIDARs) or ultrasound sensors, are the second most dominant group of employed exteroceptive sensors onboard mobile robots. One-dimensional LIDARs and ultrasound sensors are used mainly in flying mobile robots to measure their flight altitude but only measure limited information about their environments, while ground mobile robots can utilize the sensor to measure the distance to nearest object. Two- and three-dimensional LIDARs accurately perceive the surroundings in 360°, and the newer technological advancements have reduced their size and weight. However, utilizing these sensors onboard small-sized robotic platforms is still not feasible, and the high acquisition cost hampers the adoption of this sensor by the broad commercial market. Even for autonomous cars, a pure-vision system, which may include event cameras, is often more desirable from an economic perspective.

Frequency-modulated continuous wave (FMCW) radio detection and ranging (RADAR) systems transmit a continuous waveform with a changing frequency over time. This changing frequency creates a frequency sweep, or chirp, continuously transmitted and reflected off objects in the radar’s field of view. An FMCW radar can determine the detected objects’ range, velocity, and angle by measuring the frequency shift between the transmitted and received signals. Millimeter-wave (mmWave) radars use short-wavelength electromagnetic waves in the GHz range to obtain millimeter accuracy and are a valid alternative to LIDAR for range measurements in robotics [23]. Even though they have a lower angular resolution and more limited range than LIDAR, they offer a smaller form factor and a lower cost. Additionally, they can estimate the speed of objects by leveraging the Doppler effect. Nevertheless, mmWave radars can detect transparent surfaces that are challenging to see with LIDAR. As such, they have become an attractive option for robotic applications where cost, form factor, and the detection of transparent surfaces are crucial.

A Radiofrequency (RF) signal is another technology based on signal processing that allows a robot to infer its global position by estimating its distance with one or multiple base stations. Differently from GPS, RF signals may be able to provide positioning information in indoor environments as well, even though range measurements require it to be fused with other sources of motion estimation, e.g., from an IMU. Contrary to mmWave radars, RF-based localization or mapping is far less precise, but newer technology such as 5G promises superior performance. However, a drawback of these approaches is that they require synchronization between the antenna and the receiver for computing a time of arrival (TOA) and possibly line-of-sight (LOS) communication, especially when only one antenna is available. Other RF measurements, such as the time difference of arrival (TDOA), allow the release of the synchronization requirement or the computation of the position in a non-line-of-sight (NLOS) scenario by extracting information from matrices of channel state information (CSI). Kabiri et al. [24] provide an exhaustive review of RF-based localization methods and give an outlook on current challenges and future research directions.

3. Direct Situational Comprehension

Some research works focus on transforming the complex raw measurements provided by sensors into more tractable information with different levels of abstraction, i.e., feature extraction for an accurate scene understanding, without building a complex long-term model of the situation.

3.1. Monomodal

These algorithms utilize a single sensor source to extract useful environmental information. The two primary sensor modalities used in robotics are vision-based sensors and range-based sensors for the rich and plentiful amount of information in their scene observations.

Vision-based comprehension started with the early works of Viola and Jones [25] presenting an object-based detector for face detection using Haar-like features and Adaboost feature classification. Following works for visual detection and classification tasks such as [26][27][28][29][30][26,27,28,29,30] utilized well-known image features, e.g., Scale-Invariant Feature Transform (SIFT) [31], Speeded-Up Robust Features (SURF) [32], Histogram of Oriented Gradients (HOG) [33], along with Support Vector Machine (SVM)-based classifiers [34]. The mentioned methods focused on extracting only a handful of helpful information from the environment, such as pedestrians, cars, and bicycles, showing degraded performance in difficult lighting conditions and occlusions.

With the establishment of DL in computer vision and image processing for robot vision, recent algorithms in the literature robustly extract the scene information utilizing Convolutional Neural Networks (CNNs) in the presence of different lighting conditions and occlusions. In computer vision, different types of DL-based methods exist based on the kind of scene-extracted information. Algorithms such as Mask-RCNN [35][36], RetinaNet [36][37], TensorMask [37][38], TridentNet [38][39], and Yolo [39][35] perform detection and classification of several object instances, and they either provide a bounding box around the object or perform a pixelwise segmentation for each object instances. Other algorithms such as [40][41][42][43][44][40,41,42,43,44] perform a dense semantic segmentation, being able to extract all relevant information from the scene. Additionally, Kirillov et al. [45], Cheng et al. [46] aimed to detect and categorize all object instances in an image, regardless of their size or position, through a panoptic segmentation while still maintaining a semantic understanding of the scene. This task is particularly challenging because it requires integrating pixel-level semantics and instance-level information. Two-dimensional scene graphs [47][48][47,48] could then connect the semantic elements detected by the panoptic segmentation in a knowledge graph that let one reason about relationships. Moreover, this knowledge graph facilitates the inference of single behaviors and interactions among the participants in a scene, animate or inanimate [49].

To overcome the limitations of the visible spectrum in the absence of light, thermal infrared sensors have been researched to augment situational comprehension. For instance, one of the earlier methods [50] found humans in nighttime images by extracting thermal shape descriptors that were then processed by Adaboost to identify positive detection. In contrast, newer methods [51][52][51,52] utilize deep CNNs on thermal images for identifying different objects in the scene, such as humans, bikes, and cars. Though research in the field of event-based cameras for scene understanding is not yet broad, some works such as [53][85] present an approach for dynamic object detection and tracking using event streams, whereas [54][53] present an asynchronous CNN for detecting and classifying objects in real time. Ev-SegNet [55][54] is an approach that introduced one of the first semantic segmentation pipelines based on event-only information.

Range-based comprehension methods with earlier works such as [56][55] and ref. [57][56] presented object detection for range images from 3D LIDAR using an SVM for object classification. However, the authors in [58][57] utilized range information to identify the terrain around the robot and objects and used an SVMs to classify each category. Nowadays, deep learning is also playing a fundamental role in scene understanding using range information. Some techniques utilize CNNs for analyzing range measurements translated into camera frames by projecting the 3D points onto an abstract image plane. For example, Rangenet++ [59][60][58,59], SqueezeSeg [61][60], and SqueezeSegv2 [62][61] project the 3D point-cloud information onto 2D range-based images for performing the scene understanding tasks. The above-mentioned methods argue that CNN-based algorithms can be directly applied to range images without using expensive 3D convolution operators for point cloud data. Others apply CNNs directly on the point cloud information for maximizing the preservation of spatial information. Approaches such as PointNet [63][62], PointNet++ [64][63], TangentConvolutions [65][64], DOPS [66][65], and RandLA-Net [67][66] perform convolutions directly over the 3D point cloud data to semantically label the point cloud measurements.

3.2. Multimodal

The fusion of multiple sensors for situational comprehension allows algorithms to increase their accuracy by observing and characterizing the same environment quantity but with different sensor modalities [68][86]. Algorithms combining RGB and depth information have been widely researched due to the easy availability of the sensors publishing RBG-D information. González et al. [69][67] studied and presented the improvement of the fusion of multiple sensor modalities (RGB and depth images), numerous image cues, and various image viewpoints for object detection, whereas Lin et al. [70][68] combined 2D segmentation and 3D geometry understanding methods to provide contextual information for classifying the categories of the objects and identifying the scene in which they are placed. Several algorithms classifying and estimating the pose of objects using CNNs, such as [71][69], PoseCNN [72][70], DenseFusion [73][74][75][71,72,73], rely extensively on RBG-D information. These methods are primarily employed for object manipulation tasks, using robotic manipulators fixed on static platforms or mobile robots.

Alldieck et al. [76][87] fused RGB and thermal images from a video stream using contextual information to access the quality of each image stream to combine the information from the two sensors accurately, whereas methods such as MFNet [77][74], RTFNet [78][75], PST900 [79][76], and FuseSeg [80][77] combined the potential of RGB images along with thermal images using CNN architectures for the semantic segmentation of outdoor scenes, providing accurate segmentation results even in the presence of degraded lighting conditions. Zhou et al. [81][88] proposed ECFFNet to perform the fusion of RGB and thermal images at the feature level, which provided complementary information, effectively improving object detection in different lighting conditions. Spremolla et al. [82][89], Mogelmose et al. [83][90] performed a fusion of RGB, depth, and thermal camera computing descriptors in all three image spaces and fused them in a weighted average manner for efficient human detection.

Dubeau et al. [84][91] fused the information from an RGB and depth sensor with an event-based camera cascading the output of a deep Neural Network (NN) based on event frames with the output from a deep NN for RBG-D frames for a robust pose tracking of high-speed moving objects. ISSAFE [85][78] is another approach that combines event-based CNN with an RGB-based CNN using an attention mechanism to perform semantic segmentation of a scene, utilizing the event-based information to stabilize the semantic segmentation in the presence of high-speed object motions.

To improve situational comprehension using 3D point cloud data, methods have been presented that combine information extracted over RGB images with their 3D point cloud data to accurately identify and localize the objects in the scene. Frustrum PointNets [86][79] performed 2D detection over RGB images which were projected to a 3D viewing frustum from which the corresponding 3D points were obtained, to which a PointNet [63][62] was applied for object instance segmentation, and an amodal bounding box regression was performed. Methods such as AVOD [87][88][80,81] extract features from both RGB and 3D point clouds projected to a bird’s eye view and fuse them to provide 3D bounding boxes for several object categories. MV3D [89][82] extract features from RGB images and 3D point cloud data from the front view as well as a bird’s eye view to fuse them in a Region of Interest (RoI) pooling, predicting the bounding boxes as well as the object class. PointFusion [90][83] employs an RGB and 3D point cloud fusion architecture which is unseen and object-specific and can work with multiple sensors providing depth.

Direct situational comprehension algorithms only provide the representation of the environment at a given time instant and mostly discard the previous information, not creating a long-term map of the environment. In this regard, the extracted knowledge can thus be transferred to the subsequent layer of accumulated situational comprehension.

4. Accumulated Situational Comprehension

A greater challenge consists of building a long-term multiabstraction model of the situation, including past information. Even small errors not considered at a particular time instant can cause a high divergence between the state of the robot and the map estimate over time.

4.1. Motion Estimation

The motion estimation component is responsible for estimating the state of the robot directly, using the sensor measurements from single/multiple sources and the inference provided by the direct situational comprehension component (see Section 3.1). While some motion estimation algorithms only use real-time sensor information to estimate the robot’s state, others estimate the robot’s state inside a pregenerated environment map. Early methods estimated the state of the robot based on filtering-based sensor fusion techniques such as an Extended Kalman Filter (EKF), an Unscented Kalman Filter (UKF), and Monte Carlo Localization (MCL). Methods such as those in [91][92][92,93] use MCL, providing a probabilistic hypothesis of the state of the robot directly, using the range measurements from a range sensor. Anjum et al. [93][94] performed a UKF based fusion of several sensor measurements such as gyroscopes, accelerometers, and wheel encoders to estimate the motion of the robot. Kong et al. [94][95], Teslic et al. [95][96] performed an EKF based fusion of odometry from robot wheel encoders and measurements from a prebuilt map of line segments to estimate the robot state, whereas Chen et al. [96][97] used a prebuilt map of corner features. Ganganath and Leung [97][98] presented both UKF and MCL approaches for estimating the pose of the robot using wheel odometry measurements and a sparse prebuilt map of visual markers detected with an RGB-D camera. In contrast, Kim and Kim [98][99] presented a similar approach using ultrasound distance measurements with respect to an ultrasonic transmitter.

The simplified mathematical models are subject to several assumptions that limited earlier motion estimation methods. Newer methods try to improve these limitations by providing mathematical improvements over the earlier methods and accounting for delayed measurements between different sensors, such as the UKF developed by Lynen et al. [99][100] and the EKF by Sanchez-Lopez et al. [100][101], which compensate for time-delayed measurements in an iterative nature for a quick convergence to the actual state. Moore and Stouch [101][102] presented an EKF/UKF algorithm, well-known in the robotics community, which can take an arbitrary number of heterogeneous sensor measurements for the estimation of the robot state. Wan et al. [102][103] used an improved version of a Kalman filter called the error-state Kalman filter, which used measurements from RTK GPS, LIDAR, and IMU for a robust state estimation. Liu et al. [103][104] presented a multi-innovation UKF (MI-UKF), which utilized a history of innovations in the update stage to improve the accuracy of the state estimate; it fused IMU, encoder, and GPS data and estimated the slip error components of the robot.

The motion estimation of robots using a Moving-Horizon Estimation (MHE) has also been studied in the literature where methods such as in [104][105] fuse wheel odometry and LIDAR measurements using an MHE scheme to estimate the state of the robot, claiming a robustness over any outliers in the LIDAR measurements. Liu et al. [105][106], Dubois et al. [106][107] studied a multirate MHE sensor fusion algorithm to account for sensor measurements obtained at different sampling rates. Osman et al. [107][108] presented a generic MHE-based sensor fusion framework for multiple sensors with different sampling rates, compensating for missed measurement, outlier rejection, and satisfying real-time requirements.

Recently, motion estimation algorithms of mobile robots using factor-graph-based approaches have also been extensively studied as they have the potential to provide a higher accuracy. Factor graphs can encode either the entire history of the robot state or go back up to a fixed time, i.e., fixed-lag smoothing methods, capable of handling different sensor measurements in terms of nonlinearity and varying frequencies optimally and intuitively. Ranganathan et al. [108][109] presented one of the first graph-based approaches using square-root fixed-lag smoothing [109][110], for fusing information from odometry, visual, and GPS sensors, whereas Indelman et al. [110][111] presented an improved fusion based on an incremental smoothing approach, iSAM2 [111][112], fusing IMU, GPS and visual measurements from a stereocamera setup. The methods presented in [112][113][113,114] utilized sliding-window factor graphs for estimating the robot’s state by fusing several wheel odometry sources along with global pose sources. Mascaro et al. [114][115] also presented a sliding-window factor graph combining visual odometry information, IMU, and GPS information to estimate the drift between the local odometry frame with respect to the global frame, instead of directly estimating the robot state. Qin et al. [115][116] presented a generic factor graph-based framework for fusing several sensors. Each sensor served as a factor connected with the robot’s state, quickly adding them to the optimization problem. Li et al. [116][117] proposed a novel graph-based framework for sensor fusion that combined data from a stereo visual–inertial navigation system, i.e., S-VINS, and multiple GNSS sources in a semitightly coupled manner. The S-VINS output was an initial input to the position estimate derived from the GNSS system in challenging environments where GNSS data are limited. By integrating these two data sources, the framework improved the robot’s global pose estimation accuracy.

4.2. Motion Estimation and Mapping

4.2. Motion Estimation and Mapping

This section covers the approaches which estimate not only the robot motion given the sensor measurements but also the map of the environment, i.e., they model the scene in which the robot navigates. These approaches are commonly known as SLAM, which is one the widely researched topics in the robotics industry [117][118], as it enables a robot to use scene modeling without the requirement of prior maps and in applications where initial maps cannot be obtained easily. Vision and LIDAR sensors are the two primary exteroceptive sensors used in SLAM for map modeling [15][118][15,119]. As in the case of motion estimation methods, SLAM can be performed using a single-sensor modality or using information from different sensor modalities and combining it with scene information extracted from the direct situational comprehension module (see Section 3). SLAM algorithms have a subset of algorithms that do not maintain the entire map of the environment and do not perform stages of loop closure called odometry estimation algorithms, where Visual Odometry (VO) becomes a subset of visual SLAM (VSLAM) and LIDAR odometry a subset of LIDAR SLAM.

4.2.1. Filtering

Earlier SLAM approaches such as in [119][120][121][120,121,122] applied an EKF to estimate the robot pose by simultaneously adding/updating the landmarks observed by the robots. However, these methods were quickly discarded as their computational complexity increased with the number of landmarks, and they did not efficiently handle nonlinear measurements [122][123]. Accordingly, FastSLAM 1.0 and FastSLAM 2.0 [123][124] were proposed as improvements to EKF-SLAM, which combined particle filters to calculate the trajectory of the robot with individual EKFs for landmark estimation. These techniques also suffered from the limitations of sample degeneracy when sampling the proposal distribution and problems with particle depletion.

4.2.2. Metric Factor Graphs

Modern SLAM, as described in [117][118], has moved to a more robust and intuitive representation of the state of the robot along with sensor measurements, as well as the environment map to create factor graphs as presented in [109][111][124][125][126][110,112,125,126,127]. Factor-graph-based SLAM, based on the type of map used for the environmental representation and optimization, can be divided into metric and metric–semantic factor graphs.

A metric map encodes the understanding of the scene at a geometric level (e.g., lines, points, and planes), which is utilized by a SLAM algorithm to model the environment. Parallel tracking and mapping (PTAM) was one of the first feature-based monocular algorithms which split the tracking of the camera in one thread and the mapping of the key points in another, performing a batch optimization for optimizing both the camera trajectory and the mapped 3D points. Similar extensions to the PTAM framework are ORB-SLAM [127][128] and REMODE [128][129] which create a semidense 3D geometric map of the environment while estimating the camera trajectory. As an alternative to feature-based methods, direct methods use the image intensity values instead of extracting features to track the camera trajectory even in featureless environments such as semidense direct VO, called DSO [129][130] and LDSO [130][131], improving the DSO by adding loop closure into the optimization pipeline, whereas LSD-SLAM [131][132], DPPTAM [132][133], and DSM [133][134] perform a direct monocular SLAM tracking camera trajectory along with building a semidense model of the environment. Methods have also been presented that combine the advantages of both feature-based and intensity-based methods, such as SVO [134][135] performing high-speed semi-direct VO, CPA-SLAM [135][136], and loosely coupled semidirect SLAM [136][137] utilizing image intensity values for optimizing the local structure and image features to optimize the key-frame poses.

Deep Learning models may be used effectively to learn from data to estimate the motion from sequential observations. Hence, their online prediction could be better before initializing the factor-graph optimization problem closer to the correct solution [137][138][138,139]. MagicVO [139][140] and DeepVO [140][141] study supervised end-to-end pipelines to learn monocular VO from data not requiring complex formulations and calculations for several stages, such as feature extraction and matching, keeping the VO implementation concise and intuitive. There are also some supervised approaches such as LIFT-SLAM [141][142], RWT-SLAM [142][143], and [143][144][144,145] that utilize deep neural networks for improved feature/descriptor extraction. Alternatively, unsupervised approaches [145][146][147][146,147,148] exploit the brightness constancy assumption between frames in close temporal proximity to derive a self-supervised photometric loss. The methods have gained momentum, enabling the learning from unlabeled videos and continuously adapting the DL models to newly seen data [148][149][149,150]. Nevertheless, monocular visual-only methods suffer from the considerable limitation of being unable to estimate the metric scale directly and accurately track the robot poses in the presence of pure rotational or rapid/acrobatic motion. RAUM-VO [150][151] mitigates the rotational drift by integrating an unsupervised learned pose with the motion estimated with a frame-to-frame epipolar method [151][152].

To overcome these limitations, cameras are combined with other sensors, for example, synchronizing them with an IMU, giving rise to the research line working on monocular Visual–Inertial Odometry (VIO). Methods such as OKVIS [152][153], SVO-Multi [153][154], VINS-mono [154][155], SVO+GTSAM [155][156], VI-DSO [156][157], BASALT [157][158] are among the most outstanding examples. Delmerico and Scaramuzza [158][159] benchmarked all the open-source VIO algorithms and compared their performance on computationally demanding embedded systems. Furthermore, VINS-fusion [159][160] and ORB-SLAM2 [160][161] provide a complete framework capable of fusing either monocular, stereo, or RGB-D cameras with an IMU to improve the overall tracking accuracy of the algorithms. ORB-SLAM3 [161][162] presents improvement over ORB-SLAM2 by performing even multimap SLAM using different visual sensors along with an IMU.

Methods have been presented that perform thermal inertial odometry for performing autonomous missions using robots in visually challenging environments [162][163][164][165][163,164,165,166]. The authors in TI-SLAM [166][167] not only performed thermal inertial odometry but also provided a complete SLAM back end with thermal descriptors for loop closure detection. Mueggler et al. [167][168] presented a continuous-time integration of event cameras with IMU measurements, improving by almost a factor of four the accuracy over event-only EVO [168][169]. Ultimate SLAM [169][170] combines RGB cameras with event cameras along with IMU information to provide a robust SLAM system in high-speed camera motions.

LIDAR odometry and SLAM for creating metric maps have been widely researched in robotics to create metric maps of the environment such as Cartographer [170][171] and Hector-SLAM [171][172], performing a complete SLAM using 2D LIDAR measurements, and LOAM [172][173] and FLOAM [173][174] providing a parallel LIDAR odometry and mapping technique to simultaneously compute the LIDAR velocity while creating accurate 3D maps of the environment. SUMA [174][175] improves the performance over LOAM using dense projective ICP over surfel-based maps. To further improve the accuracy, techniques have been presented which combine vision and LIDAR measurement as in LIDAR-monocular visual odometry (LIMO) [175][176] and LVI-SLAM [176][177], combining monocular image tracking with precise depth estimates from LIDAR measurements for motion estimation. Methods such as LIRO [177][178] and VIRAL-SLAM [178][179] couple additional measurements such as ultrawide band (UWB) with visual and IMU sensors for robust pose estimation and map building. Other methods such as HDL-SLAM [179][180] and LIO-SAM [180][181] tightly couple IMU, LIDAR, and GPS measurements for globally consistent maps.

While significant progress has been demonstrated using metric SLAM techniques, one of the limitations of these methods is the lack of information extracted from the metric representation, such as (1) a lack of semantic knowledge of the environment, (2) an inefficiency in identifying static and moving objects, and (3) an inefficiency in distinguishing different object instances.

4.2.3. Metric–Semantic Factor Graphs

As explained in Section 3, the advancements in direct situational comprehension techniques have enabled a higher-level understanding of the environments around the robot, leading to the evolution of metric–semantic SLAM overcoming the limitations of traditional metric SLAM and providing the robot with the capabilities of human-level reasoning. Several approaches to address these solutions have been explored, which are discussed in the following.

Object-based metric–semantic SLAM builds a map of the instances of the different detected object classes on the given input measurements. The pioneer works SLAM++ [181][199] and [182][190] created a graph using camera pose measurements and the objects detected from previously stored database to jointly optimize the camera and the object poses. Following these methods, many object-based metric–semantic SLAM techniques were presented, such as [183][184][185][186][187][188][189][190][191,192,200,201,202,203,204,205] not requiring a previously stored database and jointly optimizing the camera poses, 3D geometric landmarks, as well as the semantic object landmarks. LIDAR-based metric–semantic SLAM techniques such as LeGO-LOAM [191][196] extract planar/edges features from semantics such as ground planes to improve the performance over metric SLAM LOAM [172][173]. SA-LOAM [192][206] utilizes semantically segmented 3D LIDAR measurements to generate a semantic graph for robust loop closures. The primary sources of inaccuracies of these techniques are due to an extreme dependence on the existence of objects, as well as (1) the uncertainty in object detection, (2) the partial views of the objects which are still not handled efficiently, and (3) no consideration of the topological relationship between the objects. Moreover, most of the previously presented approaches cannot handle dynamic objects. Research works on filtering dynamic objects from the scene, such as DynaSLAM [193][207], or adding dynamic objects to the graph, such as VDO-SLAM [194][193] and RDMO-SLAM [195][208], reduce the influence of the dynamic objects on the robot pose estimate obtained from the optimized graph. Nevertheless, they cannot handle complex dynamic environments and only generate a sparse map without topological relationships between these dynamic elements.

SLAM with a metric–semantic map augments the output metric map given by SLAM algorithms with semantic information provided by scene understanding algorithms, as in [196][197][198][209,210,211] or with SemanticFusion [199][212], Kimera [200][195], and Kimera-Multi [201][213]. These methods assume a static environment around the robot; thus, the quality of the metric–semantic map of the environment can degrade in the presence of moving objects in the background. Another limitation of these methods is that they do not utilize useful semantic information from the environment to improve the robot’s pose estimation and thus the map quality.

SLAM with semantics to filter dynamic objects utilizes the available semantic information of the input images provided by the scene understanding module only to filter badly conditioned objects (i.e., moving objects) from pictures given to the SLAM algorithms, as in [202][203][204][214,215,216] for image-based approaches or SUMA++ [205][182] for a LIDAR-based approach. Although these methods increase the accuracy of the SLAM system by filtering moving objects, they neglect the rest of the semantic information from the environment to improve the robot’s pose estimation.

4.3. Mapping

This section covers the recent works which focus only on the complex high-level representations of the environment. Most of these methods assume the SLAM problem to be solved and focus only on the scene representation. An ideal environmental representation must be efficient concerning the required resources, capable of reasonably estimating regions not directly observed, and flexible enough to perform reasonably well in new environments without any significant adaptations.

Occupancy mapping is a method for constructing an environment map in robotics. It involves dividing the environment into a grid of cells, each representing a small portion of the space. The occupancy of a cell represents the likelihood of that cell being occupied by an obstacle or not. Initially, all cells in the map can be considered unknown or unoccupied. As the robot moves and senses the environment, the occupancy of cells is updated based on sensor data. One of the most popular approaches in this category is Octomap [206][217]. It represents the grid of cells through a hierarchical structure that allows a more efficient query of the occupancy probability in a specific location.

The adoption of Signed-Distance Field (SDF)-based approaches in robotics is well-established to represent the robot’s surroundings [207][218] and enable a planning of a safe trajectory towards the mission goal [208][219]. In general, an SDF is a three-dimensional function that maps points of a metric space to the distance to the nearest surface. SDFs can represent distances in any number of dimensions, define complex geometries and shapes with an arbitrary curvature, and are widely used in computer graphics. However, a severe limitation of SDFs is that they can only represent watertight surfaces, i.e., surfaces that divide the space between inside and outside [209][220].

An SDF has two main variations, Euclidean Signed-Distance Field (ESDF) and Truncated Signed-Distance Field (TSDF), which usually apply to a discretized space made of voxels. On the one hand, an ESDF gives the distance to the closest obstacle for free voxels and the opposite for occupied ones. They have been used for mapping in FIESTA [210][221], where the authors exploited the property of direct modeling free space for collision checking and the gradient information for planning [211][222], while dramatically improving their efficiency. On the other hand, a TSDF relies on the projective distance, which is the length measured along the sensor ray from the camera to the observed surface. The distances are calculated only within a specific radius around the surface boundary, known as the truncation radius [212][223]. This helps improve computational efficiency and reduce storage requirements while accurately reconstructing the observed scene. TSDFs have been demonstrated in multiple works such as Voxgraph [213][224], Freetures [214][225], Voxblox++ [215][226], or the more recent Voxblox-Field [216][227]. They can create and maintain globally consistent volumetric maps that are lightweight enough to run on computationally constrained platforms and demonstrate that the resulting representation can navigate unknown environments. A panoptic segmentation was rated with TSDFs by Narita et al. [217][228] for labeling each voxel semantically while differentiating between stuff, e.g., the background wall and floor, from things, e.g., movable objects. Furthermore, Schmid et al. [218][229] leveraged pixelwise semantics to maintain temporal consistency and detect changes in the map caused by movable objects, hence surpassing the limitations of a static environment assumption.

Implicit neural representations (INR) (sometimes also referred to as coordinate-based representations) are a novel way to parameterize signals of all kinds, even environments parameterized as 3D points clouds, voxels, or meshes. With this in mind, scene representation networks (SRNs) [219][230] have been proposed as a continuous scene representation that encodes both geometry and appearance and can be trained without any 3D supervision. It has been shown that SRNs generalize well across scenes, can learn geometry and appearance priors, and are helpful for novel view synthesis, few-shot reconstruction, joint shape, and appearance interpolation in the unsupervised discovery of nonrigid models. In [220][231], a new approach was presented, capable of modeling signals with fine details and accurately capturing their spatial and temporal derivatives. Based on periodic activation functions, that approach demonstrated that the resulting neural networks referred to as sinusoidal representation networks (SIRENs) were well suited for representing complex signals, including 3D scenes.

Neural radiance fields (NeRF) [221][232] exploit the framework of INRs to render realistic 3D scenes by a differential process that takes as input a ray direction and predicts the color and density of the scene’s structure along that ray. Sucar et al. [222][233] pioneered the first application of NeRF to SLAM for representing the knowledge of the 3D structure inside the weights of a deep NN. For their promising results, that research prospect attracted numerous following works that continuously improved the fidelity of the reconstructions and the possibility of updating the knowledge of the scene while maintaining previously stored information [223][224][225][226][227][234,235,236,237,238].

Differently from the previous dense environment representation methods, which are helpful for autonomous navigation, sparser scene representations also exist, such as point clouds and surfel maps, which are more commonly used for more straightforward tasks such as localization. Remarkably, a surfel, i.e., a surface element, is defined by its position in 3D space, the surface normal, and other attributes such as color and texture. Their use has been extensively explored in recent LIDAR-based SLAM to efficiently represent a 3D map that can be performed as a consequence of optimization following revisited places, i.e., loop closure [228][229][239,240].

Three-dimensional scene graphs have also been researched to represent a scene, such as in [230][231][232][241,242,243], which build a model of the environment, including not only metric and semantic information but also essential topological relationships between the objects of the environment. They can construct an environmental graph spanning an entire building, including the semantics of objects (class, material, and shape), rooms, and the topological relationships between these entities. However, these methods are executed offline and require an available 3D mesh of the building with the registered RGB images to generate the 3D scene graphs. Consequently, they can only work in static environments.

Dynamic Scene Graphs (DSGs) are an extension of the aforementioned scene graphs to include dynamic elements (e.g., humans) of the environment in an actionable representation of the scene that captures geometry and semantics [233][244]. Rosinol et al. [234][245] presented the first method to build a DSG automatically using the input of a VIO [200][195]. Furthermore, it allowed the tracking of the pose of humans and optimized the mesh based on the deformation of the space induced by detected loop closures. Although these results were promising, their main drawback was that the DSG was built offline, and the VIO first created a 3D-mesh-based semantic map fed to the dynamic scene generator. Consequently, the SLAM did not use these topological relationships to improve the accuracy of the spatial reconstruction of the robot trajectory. Moreover, except for humans, the remaining topological relationships were considered purely static, e.g., chairs or other furniture were fixed to the first detection location.

More recently, Hydra [235][249] has implemented the scene graph construction into a real-time capable system relying on a highly parallelized architecture. Moreover, it can optimize an embedded deformation graph online, after a loop closure detection. Remarkably, the information in the graph allows the creation of descriptors based on histograms of objects and visited places that can be matched robustly with previously seen locations.

Therefore, DSGs, although in their infancy stage, are shown to be a practical decision-making tool that enables robots to perform autonomous tasks. For example, Ravichandran et al. [236][250] demonstrated how they could be used for learning a trajectory policy by turning a DSG into a graph observation that served as input to a Graph Neural Network (GNN). A DSG may also be used for planning challenging robotic tasks, as proposed in the Taskography benchmark [237][251].

Lastly, one of the main features of a DSG is the possibility to perform queries and predictions of the future evolution of the scene based on dynamic models linked with the agents or physical elements [233][244]. In addition, an even more intriguing property is their application to scene change detection or to the newly formalized semantic scene variability estimation task, which sets as a goal the prediction of long-term variation in location, semantic attributes, and topology of the scene objects [238][252].

5. Situational Projection

In robotics, the projection of the situation is essential for reasoning and the execution of a planned mission [239][253]. The comprehended information can be projected in the future to predict the future state of the robot by using a dynamic model [100][101] as well as the dynamic entities in the environment. In order to predict the future state of a robot, the projection component requires more effort in producing models that can forecast the dynamic agents’ behavior and how the scene is affected by changes that shift its appearance over time. Remarkably, numerous research areas address specific forecast models, the interactions between agents, and the surrounding environment’s evolution.

Behavior Intention Prediction

Behavior intention prediction (BIP) focuses on developing methods and techniques to enable autonomous agents, such as robots, to predict the intentions and future behaviors of humans and other agents they interact with. This research is essential for effective communication, collaboration, and decision-making. BIP typically involves integrating information from multiple sources, such as visual cues, speech, and contextual information, e.g., coming from the comprehension layer. This research has numerous applications, including human–robot collaboration, autonomous driving, and healthcare.

Scene contextual factors, such as traffic rules, uncertainties, and interpretation of goals, are crucial for inferring the interaction among road actors [240][255] and the safety of the current driving policy [241][256]. Specifically, interaction may be due to social behavior or physical events such as obstacles or dynamic clues, e.g., traffic lights, that influence the decision of the driver [242][257]. Multimodal perception is exploited to infer whether pedestrians are about to cross [243][258] or vehicles to change lanes [244][259]. Mostly, recent solutions rely on DL models such as CNNs [245][246][260,261], Recurrent Neural Networks (RNNs) [242][247][257,262], GNNs [248][263], or on the transformer attention mechanism, which can estimate the crossing intention using only pedestrian bounding boxes as input features [249][264]. Otherwise, causality relations are studied by explainable AI models to make risk assessment more intelligible [250][265]. Lastly, simulation tools of road traffic and car driving, such as CARLA [251][266], can be used as forecasting models provided that mechanisms to adapt synthetically generated data to the reality are put in place [252][267].

BIP then requires fulfilling the task of predicting the trajectory of the agents. For an AV, the input to the estimation is represented by the historical sequence of coordinates of all traffic participants and possibly other contextual information, e.g., velocity. The task is then to generate a plausible progression of the future position of other pedestrian vehicles. Methods for predicting human motion have been exhaustively surveyed by Rudenko et al. [253][268], and regarding vehicles, by Huang et al. [254][269], who classified the approaches into four main categories: physics-based, machine learning, deep learning, and reinforcement learning. Moreover, the authors determined the various contextual factors that may constitute additional inputs for the algorithms similar to those previously described. Finally, they acknowledged that complex deep learning architectures were the de facto solution for real-world implementation for their performance.

Additionally, DL allows for multimodal outputs, i.e., the generation of a diverse trajectory with an associated probability, and for multitask learning, i.e., simultaneously producing a likelihood of a specific behavior. Behavior prediction is, in fact, a separate task, more concerned with assigning to the road participants an intention of performing a particular action.