Breslow thickness, i.e., the penetration of malignant melanocytes in the skin measured in millimeters, is the most important prognostic factor in melanoma patients [

7]. Currently, the diagnosis of melanoma is made by naked-eye and dermoscopic examination using the ABCDE classification by Nachbar and Stolz [

8], which stands for Asymmetry, Border irregularities, Colour differences, large Diameter, Evolution over time. Even when performed by skilled dermatologists the technique has a relatively low specificity (56–65%) and moderate sensitivity (47–89%) [

9]. Introduction of dermoscopy to trained dermatologists increased sensitivity from 69% to 87% and specificity from 88% to 91% [

10]. In general, dermoscopy with polarized light is used as an additional technique to highlight additional features in skin lesions [

11]. However, to exclude false-negative findings that could lead to metastasis and death, excisions are performed. These excisions are invasive and often unnecessary, as melanoma is detected for every 10 to 60 biopsies performed [

9,

12].

In this review, the focus is on studies that have been performed using various measurement setups, procedures and equipment for infrared thermography to diagnose skin cancer in humans. Some basic findings and concepts of thermography are also presented. Post-processing of IR images, processing algorithms and classification are outside the scope of this review. The purpose of this review is to summarize recent developments and new perspectives for future research on skin cancer diagnosis with infrared thermography.

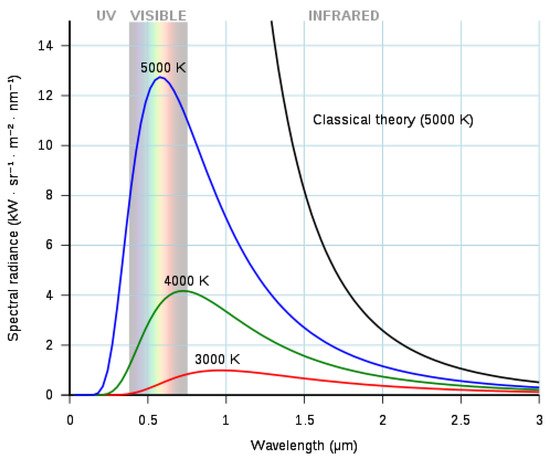

where λ is the wavelength, T is the absolute temperature, h is the Planck constant, k is the Boltzmann constant and c is the speed of light in a vacuum.

Figure 1. Radiance of blackbodies for various temperatures.

According to Vollmer [

24], emissivity

ϵ is a measure of the efficiency in which an object radiates thermal energy and is characterized by the percentage of thermal energy (radiation) from the material’s surface being emitted relative to that radiated by a perfect emitter (a black body) at the same wavelength and temperature. Emissivity is a dimensionless number between 0 and 1. An emissivity value of 1 corresponds with a black body being a perfect emitter of heat energy and a perfect thermal mirror corresponds with an emissivity value of 1. As reported by Lee and Minkina, the commonly accepted emissivity

ϵ of human skin, independent of the skin pigmentation, is

0.98±0.01 for

λ>2μm, which makes human skin a close to perfect black body [

15,

25,

26,

27]. Many studies in the past have confirmed the emissivity of

0.98±0.01 [

28,

29,

30,

31,

32,

33]. In 2009, Sanchez-Marin et al. proposed a new approach to evaluate the emissivity of human skin [

34]. They concluded that human skin follows Lambert’s law of diffuse reflectance and that the emissivity of skin is almost constant between 8 and 14

μm [

34]. Charlton et al. conducted a study on the influence of constitutive pigmentation on the measured emissivity of human skin [

35]. They collected data from participants with varying pigmentation according to the Fitzpatrick scale. Charlton et al. concluded that human skin emissivity is not affected by skin pigmentation [

35]. They also advocate the use of an emissivity

ϵ=0.98 for general use [

35]. Several other parameters also affect the emissivity

ϵ. Material, surface structure, angle of observation, wavelength, and temperature all have an effect on emissivity, with material being the most important parameter [

23]. Bernard et al. showed that topical treatment of human skin with various substances such as ultrasound gel, disinfection, ointment, etc. has an effect on the emissivity of human skin [

36]. They showed that it is necessary to integrate emissivity into the calculation of human skin temperature considering the environment and its temperature since the measured surface temperature is a function of emissivity [

36]. Spurious radiation originating from the environment and reflected from the sample can be ignored because human skin is a nearly perfect blackbody. Hardy and Muschenheim concluded that dead skin can be considered as a perfectly black surface with an emissivity of

ϵ=1 [

31].

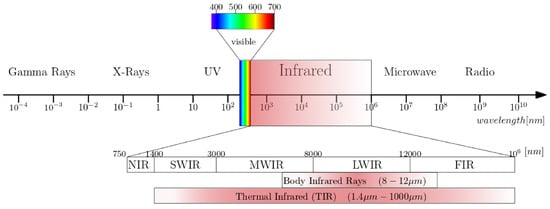

Figure 2 illustrates the electromagnetic spectrum and the IR spectral band in a finer scale. The boundaries between different IR spectral regions can vary. Infrared radiation has a wavelength range of 0.75 (0.78)–1000

μm. According to the ISO 20473 scheme the subdivision is as follows; Near-infrared or NIR (0.78–3

μm), Mid-infrared or MIR (3–50

μm) and Far-infrared or FIR (50–1000

μm). The boundaries that we adopt here are divided in smaller regions that divide up the band based on the response of various detectors [

37]. The five sub-ranges are Near-infrared or NIR (0.7–1

μm), Short-Wave Infrared or SWIR (1–3

μm), Mid-Wave Infrared or MWIR (3–5

μm), Long-Wave Infrared or LWIR (7–14

μm) and Very-Long Wave Infrared or VLWIR (12–30

μm).

Figure 2. The electromagnetic spectrum with a subdivision for infrared wavelengths.

1.3. Skin Cancer and Infrared Thermography

When heat increases unexpectedly, it is an indication that something is wrong. For example, increased mechanical friction develops heat and causes wear, possibly leading to material failure [

38]. Similarly, human heat is associated with many conditions such as inflammation and infection, and even in the time of Hippocrates, physicians used thermobiological diagnostics [

39,

40]. As a living organism, the human body attempts to maintain homeostasis, that is, an equilibrium of all systems within the body, for all physiological processes, which leads to dynamic changes in heat emission [

41]. The result of a complicated combination of central and local regulatory systems is reflected in the surface temperature of an extremity. Core body temperature is maintained constant at depths larger than 20 mm [

27]. Skin surface temperature is a useful indicator of health concerns or a physical dysfunction of near-to-skin processes [

42].

Biomedical infrared thermography detects the emitted radiation on the human body surface and reveals the heterogeneous skin and superficial tissue temperature [

43]. Infrared emissions from human skin at 27 °C are in the wavelength range of 2–20

μm, and peaks at 10

μm. Body infrared rays, a narrow wavelength range of 8–12

μm, is used for medical applications [

22]. Another term commonly used in medical IR imaging is thermal infrared (TIR) [

23]. This region includes the wavelengths 1.4–1000

μm, infrared emission is primarily heat or thermal radiation, hence the term thermography.

The use of infrared thermography for skin cancer is optimal because of the temperature changes and temperature distribution and it is noninvasiveness [

42]. Skin cancer cells are enlarged compared to normal skin cells due to the high rate of uncontrolled cell division [

40]. As a result of the high rate of cell division, cancer cells must convert more energy to run cellular processes [

44]. This chemical process is called metabolism. Due to the high metabolism, there is a higher energy demand, which also leads to increased angiogenesis. Angiogenesis is the physiological process by which new blood vessels form from existing vessels to provide the necessary extra energy [

45,

46,

47]. In conjunction with the increased energy requirements, melanoma skin lesions are thought to have a higher (

Δ 2–4 K) temperature than the surrounding healthy skin [

27,

48,

49,

50]. Therefore, IR imaging for melanoma skin lesions is based on the detection of new blood vessels and chemical changes associated with a tumour development and growth [

42,

51]. Other skin tumor types, such as basal cell carcinoma may form an encapsulating layer of involved cells which acts like a thermal insulator, resulting in a delayed thermoregulatory process [

27,

52]. Similarly, González et al. [

50] studied the vascularity of different skin lesions (melanoma and non-melanoma) and discovered that each cancer type has its own thermal signature.

2. Concepts of Thermography

2.1. Qualitative versus Quantitative Thermography

In qualitative thermography, the infrared data is presented as an image of the scene or sample and this thermogram is sufficient to analyze or interpret the problem. It finds its use in search and rescue operations where warm human bodies should be found, in site surveillance of places or in situations with poor visibility [

53].

In contrast, quantitative thermography uses the ability to detect and record the temperature of each pixel. The infrared camera must be calibrated and take into account atmospheric interference, surface characteristics, emissivity, camera angle, distance etc. [

54].

2.2. Passive versus Active Thermography in Biomedical Applications

Passive thermography investigates the sample in its steady state without application of an external thermal stress [

13]. The passive approach tests materials and structures that are inherently at a different temperature than the ambient temperature [

55]. Active thermography measures transient temperatures following an external thermal load. The thermal modulation can be cooling or heating of the sample. Thermal loading can be by conductive or convective heat transfer or by absorption of infrared radiation. The major drawback of thermography are the exogenous disturbances such as external heat sources, evaporative heat losses, etc., which can introduce biases or affect image quality [

56].

2.2.1. Passive Thermography

Passive thermography is the most commonly used thermal imaging technique [

13]. Thermograms are taken from a sample in a steady state, so there is no external thermal excitation. The thermograms are examined for abnormal temperature differences, hot and cold spots or asymmetric temperature distributions, that indicate a potential health problem [

42]. Passive thermal imaging is qualitative and the information that can be retrieved is rather limited [

13]. Hot or cold spots on the skin surface are influenced by various subcutaneous factors such as metabolic processes or the presence of large blood vessels and bones, etc. Other external factors can also influence passive thermography measurements. The position of the patient, heat exchange conditions with the environment, recent ingestion of hot or cold beverages, time of the day, etc., all have an influence on the passive thermography measurements [

43]. Other environmental conditions that may effect the measurements are: room temperature, relative humidity, air circulation flow and the intrinsic conditions of the examination room [

57]. This results in limitations in the interpretation of thermograms. Interpretation of the diagnostic value of skin temperature distribution is difficult and requires careful preparation of the patient in stable environmental conditions [

58]. To limit the influence of such factors, some authors [

59] have attempted to devise rigorous measurement procedures, which unfortunately severely limit the feasibility of passive thermography in routine clinical practice [

13].

2.2.2. Active Thermography

Unlike passive thermography, active thermography requires thermal excitation, while an IR imaging device captures the dynamic temporal distribution of temperature [

13]. Active thermography can be used to obtain quantitative information about the thermal properties of the sample. To use the quantitative data, a temperature calibration should be performed at known temperatures [

43]. Various excitation sources can be used for active thermography, for example, laser heating, flash lamps, halogen lamps, electric heating, ultrasonic excitation, eddy currents, microwaves, and others. Inhomogeneities or defects in materials cause distortion of spatial temperature distribution and lead to temperature differences on the material surface. The main advantage of active thermography in the biomedical field is the possibility of a short thermal interaction with the sample. The thermal excitation should be shorter than the activation time of the biofeedback processes which can affect the measurement results [

58].

Thermal Excitation: Cooling vs. Heating

Thermal agitation can be achieved by heating or cooling the sample through various approaches. The first cooling setups for active thermography of human skin are based on stimulation of the skin by conductive heat transfer using cold gel packs or balloons filled with a cold alcohol/water dispersion [

60]. Large, uniformly distributed temperature gradients can be generated on the skin lesion, but some difficulties arise. These cooling methods use conductive heat transfer which means that the cooling devices touch the skin lesion. It is therefore almost impossible to monitor the surface temperature of the tissue during the thermal excitation, and accurate synchronization between the IR detector and the thermal excitation setup is difficult to achieve. Conductive cooling limits the acquisition of quantitative information [

13].

For active thermography, heat can also be used as thermal excitation. Human skin can be heated by absorption of electromagnetic radiation, conduction or convection. Visible light cannot be used due to the different pigmentation of the lesion compared to the surrounding skin, which would result in heterogeneous heating [

13]. A SWIR radiation source (about 2

μm) can be used to heat the skin because the absorption of the skin in IR is high and less affected by pigmentation [

61]. When heating the skin, only a smaller heat gradient can be produced due to the limited heating temperature of 42 °C, a higher value would damage the living cells [

58].

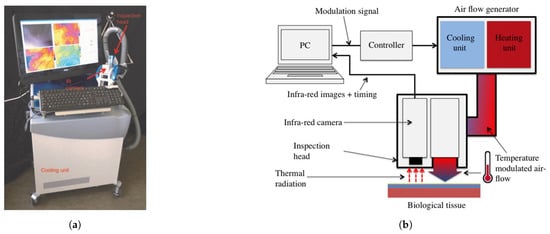

According to Bonmarin et al. [

13], convective heat transfer is probably the optimal thermal excitation method for dermatological applications. The airflow can be regulated in temperature and relatively large temperature gradients can be exerted. Due to convective heat transfer, the skin surface temperature can be monitored with a IR camera without any obstacles. This is a noncontact thermal excitation method that contributes to a hygienic and optimal clinical device.

This led to the conclusion that cooling, although technically more difficult, is the better solution. Cooling the skin to 4 °C is acceptable, resulting in greater thermal contrasts. The large temperature gradient can be accurately measured and makes it possible to show the dominant internal heat flows, which resemble the internal structure of a tested region [

58].

2.2.3. Lock-In Thermography

Lock-in thermography or thermal wave imaging is commonly used in industrial environments where nondestructive testing of materials is required [

62]. Heat is periodically introduced at a specific lock-in frequency and the local surface temperature modulation is evaluated and averaged over a number of periods [

63]. The resulting surface temperature oscillations allows the detection of variations in the thermophysical properties under the surface of the sample [

64]. Compared to steady state thermography, lock-in thermography has a higher signal to noise ratio (SNR) [

63]. The phase signal with varying lock-in frequencies can reveal anomalies at different depths [

63]. The high SNR has the advantage that it allows us to amplify the defect signals to make it more visible in the phase image. The in-phase and out-of-phase signals can be calculated using Equations (

2) and (

3), respectively [

63]. Bonmarin et al. [

62] presented a lock-in thermal imaging setup for a proof of concept study on benign lesions as shown in

Figure 3a,b. Bhowmik et al. [

65] conducted a numerical study for the detection of subsurface skin lesions using frequency modulated thermal wave imaging (FMTWI). FMTWI is an improved technique that is faster than lock-in thermography, and provides better resolution of deeper defects with a lower peak power incident heat flux [

65].

Figure 3. Lock-in (

a) Lock-in device. Reprinted with permission from Ref. [

62]. 2014, John Wiley & Sons A/S; (

b) Description of lock-in setup. Reprinted with permission from Ref. [

62]. 2014, John Wiley & Sons A/S.

The amplitude

A and phase

ϕ can be calculated with Equations (

4) and (

5):

2.3. Infrared Cameras

This section is intended to provide a brief overview of the most important aspects in the selection of IR cameras for dermatological applications. A detailed look at the state-of-the-art IR camera technology is beyond the scope of this review but can be found in the numerous reviews on this topic [

66,

67,

68,

69]. For a comparison of IR detector technologies chosen by other research groups in their studies, see

Section 4.3. The most important minimum specifications for clinical IRT devices, according to the International Academy of Clinical Thermology (IACT) thermography guidelines [

70] are listed here:

-

Spectral response of 5–15 μm with a peak around 8–10 μm.

-

NETD of <80 mK

-

Minimal accuracy of +/−2%.

-

Spatial resolution of 1 mm2 at a measuring distance of 40 cm from the detector.

-

Fast real-time capturing of infrared data

-

Absolute resolution: >19,200 temperature points

-

Instantanious Field of View: <2.5 mRad

-

Emissivity ϵ set to 0.98 (human skin)