Cognition is the acquisition of knowledge by the mechanical process of information flow in a system. In animal cognition, input is received by the various sensory modalities and the output may beoccur as a motor or other actionresponse. The sensory information is internally transformed to a set of representations which is the basis forof cognitive processing. This is in contrast to the traditional definition that is based on mental processes and a ming and originated from metaphysical descriptionphilosophy.

- animal cognition

- cognitive processes

- physical processes

- mental processes

1. Definition of Cognition

1.1. A Scientific Definition of Cognition

A dDictionary will oftenies commonly define cognition as thea mental process for the acquisitionring of knowledge. However, this view originated withfrom the assignment of mental processes to the act of thinking. Theought. These mental processes areoriginate from a metaphysical description of cognition whichthat includes the concepts of consciousness and intentionality.[1][2] This also inclassudes the concept thmes that objects in nature are reflections of a true and determined form.

Instead, a material description of cognition is restrictived to the physical processes ofavailable in nature. An example is available from the studiesy of primate face recognition where the measurable propertieements of facial features are the unit ofbasis for object recognition.[3] This perspective also excludes the concept that there is an innate knowledge of objects, so instead cognition forms a representation of an objects from itstheir constituent parts.,[4][5] Therefore, and the physical processes of cognition are probabilistic in nature since a specific object may vary in its partsnot deterministic.

1.2. Mechanical Perspective of Cognition

MosScientific work in the sciences acceptsgenerally accedes to a mechanical perspective of the description of information processesing in the brain. However, the traditional perspective, including based on the descriptions ofuality of physical and mental processing, is retained by somein various academic disciplines. For example, there is a conjecture about the relationship between the human mind and any simulation of it.[6] This idea is based on assumptions about intentionality and the act of thinkinought. However, a physical process of cognition is insteaddefined by the generatedion of action by neuronal cells instead of awithout dependence on a non-material process.[7]

Another example is concernsin the intention to moveof moving a body limb, such as in the act of reaching for a cup. Instead, studies have replaced the assignment of intentionality with a material interpretation of this motor action, and additionally showedshows that the relevant neuronal activity occurs before a perceptual awareness of the motor action.[8]

Across the natural sciences, the neural systems are is studied at the differentvarious biological scales, including fromat the molecular level up to the higher level which involves the to that of synthesis of information processing.[9][10] The higher lordevelr perspective is of particular interest since there is an analogous system exists in the neural network models of computer science.[11][12] This offers a comparative approach to understanding cognitive processes. However, at the lower levelscale, the artificial neural system is baseddependent on an abstract model of neurons and their synapses network, so this level is scale less comparable toility with an animal brainneural system.

1.3. Scope of this Definition

For tThis descripe definition of cognition, the definition as used here is restricted to a set of mechanical processes. The Also, the cognitive process of cognition is also approaching is described from a broad perspective along with a few ewith examples from the visual system and deep learning approaches from computer science.

2. Cognitive Processes in the Visual System

2.1. Probabilistic Processes in Nature

The visual cortical system occupies about one-half of the cerebral cortex. Along with language processing in humans, vision is a major source of sensory infput and recormation fromgnition of the outside world. The complexity of these sensory systems reveals a powerfulan important aspect of the evolutionary process. This process is o, that observed across all cellular life and has led to numerouorganisms and a mechanism for the formation of countless novelties. Biological eEvolution depends on the physical processes, such as mutation and population exponentiality, and is dependent on a geological time scale to build the for constructing complex systems in organismsity at all scales of life.

An example of thlife's complexity is studied in the formation of the camera eye. This type of eye is evolvedform emerged over time from a simpler organ, such as an eye spot, and thise occurrence required a largedepended on a number of asequential adaptations over time.[13][14]. AlTheso, the camera eye evolvede presumably rare and unique events did not hinder the independently in formation of a camera eye in both vertebrate animals and cephalopods. This shows that animalreveals that evolution is a strong force for change, but may restricted bypowerful generating of change in physical traits, although counterforces limit an infinite number of varieties that can occur, and examples include the finiteness of the genetic code and the phenotypes of an organism,physical traits. The latter is particularly its cellular organization and structurerelevant at the cellular level.

The evolution of cognition is an ana similar processlogous series of events to the origin of the camera eye. The probabilistic processes that led to complexity in the camera eye will alsois a drive the evolutior of the origin of cognition and the organization and structure of an design of the animal brainneural systems.

2.2. Abstract Encoding of Sensory Information

“The biologically plausible proximate mechanism of cognition originates from the receipt of high dimensional information from the outside world. In the case of vision, the sensory data consist of reflected light rays that are absorbed across a two-dimensional surface, the retinal cells of the eye. These light rays range across the electromagnetic spectra, but the retinal cells are specific to a small subset of all possible light rays”.[1]

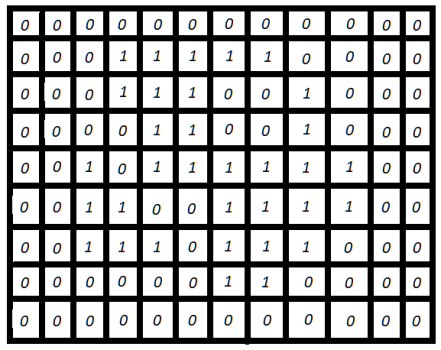

Figure 1 shows the anbove view, presented abstract view ofly, as a sheet of neuronal cells that receives sensory information for input from the outside world. This information is specifically pput is processed byat cell surface receptors and then communicated downstream to athe neural system for fruther processing. The sensory neurons and their receptors may be abstractly considereimagined as a set of activation values that are undergoing changes over time, a dnd succinctly described as a dynamical processsystem.

Figure 1. An abstract representation of information that is received by a sensory organ, such as the light rays that are absorbed by neuronal cells along the cross the retinal surface of the retina of a a camera eye.

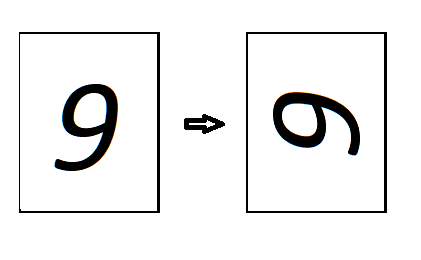

The quesinformation is howprocessing at the sensory organs are tractable to study, but the downstream cognition processes of cognition work. Thiare less understood at the proximate level. These processes includes how the generalization of knowledge is generalized, also called, also referred to as transfer learning, from the that is the organizer of sensory input data.[5][15][16] A pTrart of the problem is solved bynsfer learning is dependent on segmenting the ation of the sensory world and identifyingication of sensory objects with that is resistance to viewpointt to change of perspective (Figure 2).[17] There is a model from In computer science[4] , thatere is dea model[4] designed tpro overcome much of this problemvide segmentation and robust recognition of objects. This approach includvolves the sampling of visual data sensory input, including the parts of osensory objects, and then enencoding the information in an abstract form. This for downstream processes. The encoding scheme is exptected to includes a set of discrete representational levels of an unlabeled objects, and then employs a consensus-based a probabilistic approach toin matching these representations towith a known object in memory.

Figure 2. The first panel is a visual drawing of the digit nine (9), while the next panel is the same digit but ttransformed by rotation of the image.

3. Models of General Cognition

3.1. MaAlgorithematicalmic Description of Cognition

Experts in the sciences hhave investigated the question on whether there is an algorithm that describeexplains brain computation.[18] It wasThey concluded that this is an unsolved problem of mathematicsnot yet solved, even though everyall natural process isses are potentially representable by a quantitative model. Further, they identifiedHowever, information flow in the brain asis a product of a non-linear dynamical system. The information flow is a , a complex phenomenon andthat is analogous to that of the physics of fluid flow. Another expectation is that thise system is highly dimensional and not representedable by a simple set of math equationsmathematical description.[18][19] They further suggested that a more also recommended an empirical approach to explainfor disentangling the system is a viable path forward.

TheAn artificial neural system, like insuch as the deep learning architecture, has a lot of great potential for an empirical understanding ofexplaining animal cognition. This is expected sincbecause artificial systems are built from parts and interrelationships that are known, whereas in nature the origin and history of the neural system is obscured by time and events, and the understanding of its parts rrequires experimentation that is often imprecise and cconfounded with error of all types.

3.2. Encoding of Knowledge in Cognitive Systems

It is possible to hypothesize about a model of object representation in the brain and itsan artificial analog, a such as the deep learning systemarchitecture. First, these cognitive systems are expected to encode objects by their parts, or elements, a topic that is covered above.[3][4][5] Second, it is expected that thie process is a stochastic process, and in t. The artificial system thehas an encoding scheme is in the weight values that are aassigned to the interconnections among nodes of the network. It is further expected that the brain functions similarly at this level, given that thsince these systems are both based on non-linear dynamicc principles and a distributed representations of of an objects.[5][18][20][21]

These encoding schemes are expected to be ly abstract and not of a deterministic design based on a top-down only process. Since cognition is also considered a non-linear dynamical system, the encoding of the representations is expected to be highly distributed among the parts of the neural network.[18][21] Thas a result of a solely top-down generatis is tvestable in an artificial system and in the brain process.

FuMortheover, a physical interpretation of cognition requires the matching of patterns tofor generalizeation of knowledge of the outside world. This is consistent with a view of the cognitive systems ason as a statistical machines with a reliance on sampling for achieving robustness in its output solutions. With the advances in deep learning methods, such as the invention of the transformer architecture[5][22], it is possible to sample and search for exceedingly complex sequence patterns. Also, the sampling of the world occurs withiin a sensory modality, such as from visual or language data, and this is vision or speech, and is complemented by a sampling among the modalities which has the potentially leads to lead to increased robustness in the outputduring information processing.[23]

3.3. Future Directions

3.3.1. Dynamic Properties of Cognition

One question is whether animal cognition is as interpretable as a deep learning system. This question arises because of the difficulty in disentangling the mechanisms of the animal brain, whereas it is possible to record the changing states of an artificial system gsivennce the design is bottom-up. If the artificial system is similar enough, then it is possible to gain insight into the mechanisms of animal cognition.[5][24] However, a problem in this assumption may occur. For example, it is known that the mammalian brain is highly dynamic, such as in the rates of sensory input and in the downstream activation of the internal representations.[18] These dynamic properties are not feasibly modeled in current deep leartificialning systems since there are, a constraints on by hardware design and efficiency.[18] This is an impediment to design of an artificial system that is approximate of animal cognition., Having an artificial system that includealthough there are ideas to model these properties, such as an overlay architecture with “fast weights” is expected tofor provide thising a form of true recursion in processing information from the outside worlda neural network.[5][18]

Since tThe artificial neural network systemss continue to scale in capability, it is reasonable to continue to use an esize and efficiency. This is accompanied by empirical approaches to explore anying sources of error, whether inherent in the method or a result that is not expected. This requires r in these systems. This is dependent on a thorough understanding on how these models work at all levelsare constructed. One strategy for producing avenue for increasing the robust ness in the output is toby combine the ing various kinds of sensory informationdata, such as bothfrom the visual and language dataomains. Another strategy has beenapproach is to establish unbiased measures of the reliability of output from aone of these model. It should be noted that animal cognitions. Likewise, error in information processing is not immune to error either. In the case ofresistant to bias in animals. In human cognition, there is a bias problem in well documented bias in speech perception of speech.[25]

3.3.2. Generalization of Knowledge

Another area of importance is the property of generalization forin a model of cognition. This goal could be is approachedable by processing the particular llevels of representation fromof sensory input, the presumed process that presumably occurs in animals and in theirthe ability of animals to generalize and form knowledge.[4][26][27] In a larger context, this generalizability is based on the premise that information of the outside world is compressible, such as in thes repeatability of in the patterns in theof sensory information.

There is also the question of how to reuse knowledge outside the environment where it is learned, "being able to factorize knowledge into pieces which can easily be recombined in a sequence of computational steps, and being able to manipulate abstract variables, types, and instances".[5] It iseems relevant to have a model of cognition that describes hthe high level representations of these "pieces"parts of the whole, even in the case of aan abstract object. However, the dynamic states of internal representations in cognition may also contribute to the processes of abstract reasoning.

3.3.3. Embodiment in Cognition

Lastly, there is a questionuncertainty on the dependence of animal cognition on the outside world. This dependence has been characterized as the phenomenon of embodiment, so the an animal cognition is also an embodied cognition, even in the case where the outside world is a machine simulation of it.[18][28][29] This is in essence a property of a robot, a mechanical system, where its functioning iss are fully dependent on input and output from the outside world. Although a natural system would receives input, produces output, and thus learn from the world in s at a time scale constrained by the physical world, a somewhat alternative approach in an arn artificial system is to usenot as constrained, such as in reinforcement learning,[29][30][31] a method that hcas been used to approximaten reconstruct the sensorimotor function ofin animals.

A paper by Deepmind[29] describvelopesd artificial agents in a three3-dimensional space where theythat learn from a world that is undergoing changein a continually changing world. The method useemploys a deep reinforcement learning approach combinedmethod in conjunction with a dynamic generation of environments for building each of thethat lead to the uniqueness of each worlds. Each of the world hass have artificial agents that learn to undergohandle tasks and receive a rewards for completion of objectives. An agent observes thea pixel image of an environment and "receives a long with receipt of a "text description of their goal".[29] KnowlTask edge of these tasks xperience is sufficiently generalizable that the agents are oftencapable of adapted foring to tasks that are not yet known from a previously generated worldprior knowledge. This approach reflects an animal that is embodied in a three-dimensional world and is learning interactively by the performingance of physical tasks. It is known that animals navigate and learn from the world around them, so the above approach is a thoughtful experiment for creating similar circumstances to a in the virtual onerealm.

4. Abstract Reasoning

4.1. Abstract Reasoning as a Cognitive Process

Abstract reasoning is often associated with a process of thinkinought, but the elements of thise process are ideally modelrespresented by physical processes in the brainonly. This recommendastriction offers constraints on the emergence of reasoning on aabstract conceptsreasoning, such as in the formation of new ideas. Further, a process of abstract reasoning may be contrasted with visualmpared against the more intuitive forms of cognition in vision and speech recognition. Both these sensory forms of cognition occccur by sensory input to the neural systems of the brain. Without thise sensory input, then the layers of the neural systems are not expected to encode a correspondingnew information by some pathway, such as forexpected in the recognition of visual objects. It is necessaryTherefore, it is expected that theseany information systems are trained on is dependent on an external input sourcesfor learning.

Therefore, iIt follows that abstract reasoning is formed from an input sources which are re received by the neural systems of the brain. If there is no input of information that resembles the input for a formal that is relevant to a pathway of abstract reasoning, then the brainsystem is not expected to substantially encode that pathway. This lalso leads to the hypothesis on whether abstract reasoning is mainly a singlcomprised of one pathway or a number of mmany pathways, and the possible contribution fromof other unrelated pathways, such as from the processes of non-abstract reasoningvisual cognition. It is probable that there is no sharp division between abstract reasoning and the other forms of rreasoning types, and there are likely many typesore than one form of abstract reasoning, including the reasoning used such as for solving a class of related visualgroup of puzzles by eye.

Another hypothesis is on whether these input sources for abstract reasoning occur derive mainly from the internal representations of the brain. If true, then a model of abstract reasoning would mainly ininvolve the true forms of abstract objects, in contrast to the recognition of an object by reconstruction from sensory input.

Of goreater certainty mation input.

Sisnce that aabstract reasoning is dependent on an input source, so there is an expectation that deep learning methods, the modeling ofthe non-linear dynamics of a phenomenon, are sufficient to model one or more pathways ofinvolved in abstract reasoning. This form of rreasoning involves recognition of objects that are not necessarily sensory objects, with definable properties and interrelationships. As with the training process to learn of sensory objects and their properties, it is expected that there is a training process to learn about the forms and properties of abstract objects. This class of problem is of interesting since the universe of abstract objects is largeboundless and their properties and interrelationships are not constrained by the external and physical world.

4.2. Abstract Reasoning in General

A model of higher level cognition includes the process of abstract reasoning.[5] This is a pathway or pathways that are expected to learn the higher level representations in of sensory informationobjects, such as visual or auditory, so that novel input generates output that is based on a set of rules. These rule sets are also expected to havefrom vision or speech, and that the learned input is generative of a generalized applicability. Thable rule set . These may include a single rule or multiple rules that occur in a sequencea sequential number of rules. One method for a solution is to have a is for a deep learning system to learn the rule set, such as in the case of a visual puzzles which isare solved by use of a logical operation.[32] This is likely similar to one of the major ways that a person masters the game of chess, aplays a chess game, memorization of priors for ing prior patterns and events of chess pieces on the game board.

Another kind of visual puzzle is a Rubik's Cube. However, in this case ithe puzzle has a known final state where each face of the cube shares a unique color. In the general case of visual puzzles, if f there is no detectable rule set to solve the puzzle, then a person or a machine system should conclude that no rule set exists. If there is a detea detectable rule set, then there must be patterns of information, including missing information, that allow detection of the rule set. It is also possible that a particular rule set or those with many steps are not solvable by a personconstruction of a rule set.

The pathway to a solution shouldcan include the repeated testing of potential rule sets against an intermediate or final state of the puzzle. This iterative process may beis approachedable by an heuristic search algorithm.[5] However, these puzzles are typically lower dimensional as compared to abstract verbal problems, such as in the general process of inductive reasoning. The acquisition of the rule sets for verbal reasoning require a search for patterns in thisa higher dimensional space. In either of these cases of pattern searching, whether complex or simple, they are dependent on the detection of patterns that represent the rule setsa set of rules.

It is simpler to imagine a logical operation as the pattern that provideffers a solution, but it is expected that a process of inductive reasoning involves higher dimensional representations than an operator that combines boolean values. It is also probable that these representations are dynamic, so that there is a possibility to sample the space of many valid representations.

4.3. Future Directions

4.3.1. Embodiment in a Virtual and Abstract World

While the phenomenon of embodiment refers to our three3-dimensional world, this is not necessarily a complete model for reasoning about abstract concepts. However, it is plausible that at least some abstract concepts are modeled in a virtual three3-dimensional world, and the paper on this topic bysimilarly, Deepmind[29] showsed a solutions to visual problems among thecross a generated space of three3-dimensional worlds.

The population of tasks and their distribution are also constructed by Deepmind's machine learning approach. They show that learning a large distribution of tasks can provides knowledge to solve tasks that are not necessarily within the confines of the ooriginal task distribution.[29][30] This furthers shows a generalizability in solving tasks, along with the promise that increasingly complex ity in the worlds willould lead to an expanded knowledgedistribution of tasks.

However, the problem of abstract concepts extends beyond the conventional sensory representations as formed by the processes of cognition. Examples include visual puzzles with solutions that are abstract and require the association of patterns that extend beyond the visual realm, and the symbolic representations in mathematics.[33]

Combining these two approaches, it is possible to construct a world that is not a reflection of three3-dimensional space as inhabited by animals, but instead to construct a virtual world that consists of abstract objects and sets of tasks[30]. The visual and symbolic puzzles, such as in the case of chess and related boardgames[31], are solvable by deep learning approaches, but the machine reasoning is not generalized across a space of abstract environments and objects. The question is whether the abstract patterns to solve chess are also useful in solving other kinds of puzzles. It seems a valid hypothesis that there is at least overlap in the use of abstract reasoning between these visual puzzles and the synthesis of knowledge from other abstract objects and their interactions[29], such as in solving problems by the use of mathematical symbols and their operators. Since we are capable of abstract thought, it is plausible that generation of a distribution of general abstract tasks would lead to a working system that solves abstract problems in general.

If instead of a dynamic generation of three3-dimensional worlds and objects, there is a vast and dynamic generation of abstract puzzles, for example, then the deep reinforcement learning approach could train on solving these problems and acquire knowledge of the abstractse tasks.[29] The question is whether the distribution of these applicable tasks is generalizable to an unknown set of problems, those unrelated to the original task distribution, and the compressibilikewise that the task space is compressiblety of the space of tasks.

4.3.2. Reinforcement Learning and Generalizability

Google Research showed that an unmodified reinforcement learning approach is not necessarily robust for acquiring knowledge of tasks outside the trained task distribution.[30] Therefore, they introduced an approach that incorporates a measurement for similarity among worlds that thwerey generated duringby the reinforcement learning procedure. This similarity measure is estimated by behavioral similarity, corresponding to the salient features by which an agent finds success in any given world. Given these salient features are shared among the worlds, the agents have a path for generalizing knowledge for success in worlds outside their trailearned experience. Procedurally, the salient features are acquired by a contrastive learning procedure, and embeds these values of behavioral similarity in the neural network itself.[34]

The above reinforcement learning approach is dependent on both a deep learning framework and an input data source. The source of input data is typically a two or three 2- or 3-dimensional environment where an agent learns to accomplish tasks within the confines of the worlds and their rrules.[29][30] One gapproalch is to represent the salient features of tasks and the worlds in a neural network. As Google Research showed[30], the process required an additional step to extract the salient information for creating better models of the tasks and their worlds. They found that this method is more robust forto generalizingation of tasks. Similarly, in animal cognition, it is expected that the salient features to generalize a task are also stored in a neuronal network.

Therefore, a naive input of visual data from a two 2-dimensional environment is not an efficient means to code for for coding tasks that robustconsistently generalize across environments. To capture the higher dimensional information in a set of related tasks, Google Research extended the reinforcement learning approach to better capture the task distribution[30], and it may be possible to mimic this approach by similar methods. These task distributions provide structured data for representing the dynamics of tasks among worlds, and therefore generalize and encode the higher dimensional and dynamic features toin a lower dimensional form.

It is difficult to imagine the relationship between two different environments. A game of checkers and that of chess appear as different games and environment systems. Encoding the dynamics of each of these games in a deep learning framework may show that they relate in an unintuitive abstract way.[29] This concept was expressed in the above article[30], that short paths in a larger pathway may provide salient and generalizable features. In the case of boardgames, the salient features may not correspond to a naive perception of visual relatedness. Likewise, our natural form of abstract reasoning shows that we capture patterns in these boardgames, and these patterns are not entirely recognized by a single ruleset at the level of our awareness, but instead are likely represented at a higher dimensional level in athe neural network.

For emulating a reasoning process, extracting the salient features from a pixel image is a complex problem, and the pathway may involve many sources of error. Converting images to a lower dimensional form, particularly for the salient subtasks, allows for a greater expectation foron generalization and repeatability in the patterns of objects and their eevents. Where it is difficult to extract the salient features fromof a system, then it may be possible to translate the objects and events in the system to text based descriptions, a process that has been studied and introduces a greaterd as a measure offor interpretability to the methodin the procedure. The error in translation to text descriptions is an observablee phenomenon.

Lastly, since the advanced cognitive processes in animals involve a widespread use of dynamic representations, typically called mental representations, it is it is plausible that the tasks are not justmerely generalizable, but may originate in the varied informationsensory systems of the brain, such as speech, vision, anand memory. Therefore, the tasks maywould be expressed by different sensory forms, although the lower dimensional representations are the more generalizable, providing a better substrate for the recognition of patterns, and essential for anythe general process of abstract reasoning.

References

- Friedman, R. Cognition as a Mechanical Process. NeuroSci 2021, 2, 141-150.

- Vlastos, G. Parmenides’ theory of knowledge. In Transactions and Proceedings of the American Philological Association; The Johns Hopkins University Press: Baltimore, MD, USA, 1946; pp. 66–77.

- Chang, L.; Tsao, D.Y. The code for facial identity in the primate brain. Cell 2017, 169, 1013-1028.

- Hinton, G. How to represent part-whole hierarchies in a neural network. 2021, arXiv:2102.12627.

- Bengio, Y.; LeCun, Y.; Hinton G. Deep Learning for AI. Commun ACM 2021, 64, 58-65.

- Searle, J.R.; Willis, S. Intentionality: An essay in the philosophy of mind. Cambridge University Press, Cambridge, UK, 1983.

- Huxley, T.H. Evidence as to Man's Place in Nature. Williams and Norgate, London, UK, 1863.

- Haggard, P. Sense of agency in the human brain. Nat Rev Neurosci 2017, 18, 196-207.

- Ramon, Y.; Cajal, S. Textura del Sistema Nervioso del Hombre y de los Vertebrados trans. Nicolas Moya, Madrid, Spain, 1899.

- Kriegeskorte, N.; Kievit, R.A. Representational geometry: integrating cognition, computation, and the brain. Trends Cognit Sci 2013, 17, 401-412.

- Hinton, G.E. Connectionist learning procedures. Artif Intell 1989, 40, 185-234.

- Schmidhuber, J. Deep learning in neural networks: An overview. Neural Netw 2015, 61, 85-117.

- Paley, W. Natural Theology: or, Evidences of the Existence and Attributes of the Deity, 12th ed., London, UK, 1809.

- Darwin, C. On the origin of species. John Murray, London, UK, 1859.

- Goyal, A.; Didolkar, A.; Ke, N.R.; Blundell, C.; Beaudoin, P.; Heess, N.; Mozer, M.; Bengio, Y. Neural Production Systems. 2021, arXiv:2103.01937.

- Scholkopf, B.; Locatello, F.; Bauer, S.; Ke, N.R.; Kalchbrenner, N.; Goyal, A.; Bengio, Y. Toward Causal Representation Learning. In Proceedings of the IEEE, 2021.

- Wallis, G.; Rolls, E.T. Invariant face and object recognition in the visual system. Prog Neurobiol 1997, 51, 167-194.

- Rina Panigrahy (Chair), Conceptual Understanding of Deep Learning Workshop. Conference and Panel Discussion at Google Research, May 17, 2021. Panelists: Blum, L; Gallant, J; Hinton, G; Liang, P; Yu, B.

- Gibbs, J.W. Elementary Principles in Statistical Mechanics. Charles Scribner's Sons, New York, NY, 1902.

- Griffiths, T.L.; Chater, N.; Kemp, C.; Perfors, A; Tenenbaum, J.B. Probabilistic models of cognition: Exploring representations and inductive biases. Trends in Cognitive Sciences 2010, 14, 357-364.

- Hinton, G.E.; McClelland, J.L.; Rumelhart, D.E. Distributed representations. In Parallel distributed processing: explorations in the microstructure of cognition; Rumelhart, D.E., McClelland, J.L., PDP research group, Eds., Bradford Books: Cambridge, Mass, 1986.

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. 2017, arXiv:1706.03762.

- Hu, R.; Singh, A. UniT: Multimodal Multitask Learning with a Unified Transformer. 2021, arXiv:2102.10772.

- Chaabouni, R.; Kharitonov, E.; Dupoux, E.; Baroni, M. Communicating artificial neural networks develop efficient color-naming systems. Proceedings of the National Academy of Sciences 2021, 118.

- Petty, R.E.; Cacioppo, J.T. The elaboration likelihood model of persuasion. In Communication and Persuasion; Springer: New York, NY, 1986, pp. 1-24.

- Chase, W.G.; Simon, H.A. Perception in chess. Cognitive psychology 1973, 4, 55-81.

- Pang, R.; Lansdell, B.J.; Fairhall, A.L. Dimensionality reduction in neuroscience. Curr Biol 2016, 26, R656-R660.

- Deng, E.; Mutlu, B.; Mataric, M. Embodiment in socially interactive robots. 2019, arXiv:1912.00312.

- Team, E.L., Stooke, A., Mahajan, A., Barros, C., Deck, C., Bauer, J., Sygnowski, J., Trebacz, M., Jaderberg, M., Mathieu, M. and McAleese, N. Open-ended learning leads to generally capable agents. 2021, arXiv:2107.12808.

- Agarwal, R., Machado, M.C., Castro, P.S. and Bellemare, M.G. Contrastive behavioral similarity embeddings for generalization in reinforcement learning. 2021, arXiv:2101.05265.

- Silver, D.; Hubert, T.; Schrittwieser, J.; Antonoglou, I.; Lai, M.; Guez, A.; Lanctot, M.; Sifre, L.; Kumaran, D.; Graepel, T.; Lillicrap, T.; Simonyan, K.; Hassabis, D. A general reinforcement learning algorithm that masters chess, shogi, and Go through self-play. Science 2018, 362, 1140-1144.

- Barrett, D.; Hill, F.; Santoro, A.; Morcos, A.; Lillicrap, T. Measuring abstract reasoning in neural networks. In International Conference on Machine Learning, PMLR, 2018.

- Schuster, T., Kalyan, A., Polozov, O. and Kalai, A.T. Programming Puzzles. 2021, arXiv:2106.05784.

- Chen, T., Kornblith, S., Norouzi, M. and Hinton, G. A simple framework for contrastive learning of visual representations. In International Conference on Machine Learning, PMLR, 2020.

Encyclopedia

Encyclopedia